Introduction

- Course Name: Scalable Data Science and Distributed Machine Learning

- Course Acronym: ScaDaMaLe or sds-3.x.

The course is given in several modules.

Expected Reference Readings

Note that you need to be logged into your library with access to these publishers:

- https://learning.oreilly.com/library/view/high-performance-spark/9781491943199/

- https://learning.oreilly.com/library/view/spark-the-definitive/9781491912201/

- https://learning.oreilly.com/library/view/learning-spark-2nd/9781492050032/

- Introduction to Algorithms, Third Edition, Thomas H. Cormen, Charles E. Leiserson, Ronald L. Rivest, and Clifford Stein from

- Reading Materials Provided

Course Sponsors

The course builds on contents developed since 2016 with support from New Zealand's Data Industry. The 2017-2019 versions were academically sponsored by Uppsala University's Inter-Faculty Course grant, Department of Mathematics and The Centre for Interdisciplinary Mathematics and industrially sponsored by databricks, AWS and Swedish data industry via Combient AB, SEB and Combient Mix AB. The 2021-2023 versions were/are academically sponsored by WASP Graduate School and Centre for Interdisciplinary Mathematics, and industrially sponsored by databricks and AWS via databricks University Alliance and Combient Mix AB via industrial mentorships and internships.

Course Instructor

I, Raazesh Sainudiin or Raaz, will be an instructor for the course.

I have

- more than 16 years of academic research experience in applied mathematics and statistics and

- over 8 years of experience in the data industry.

I currently (2022) have an effective joint appointment as:

- Associate Professor of Mathematics with specialisation in Data Science at Department of Mathematics, Uppsala University, Uppsala, Sweden and

- Researching,Developing and Enabling Chair in Mathematical Data Engineering Sciences at Combient Mix AB, Stockholm, Sweden

Quick links on Raaz's background:

What is the Data Science Process

The Data Science Process in one picture

Data Science Process under the Algorithms-Machines-Peoples-Planet Framework

Note that the Data Product that is typically a desired outcome of the Data Science Process can be anything that has commercial value (to help make a livig, colloquially speaking), including a software product, hardware product, personalized medicine for a specific individual, or pasture-raised chicken based on intensive data collection from field experiments in regenerative agriculture, among others.

It is extremely important to be aware of the underlying actual costs and benefits in any Data Science Process under the Algorithms-Machines-Peoples-Planet Framework. We will see this in the sequel at a high level.

What is scalable data science and distributed machine learning?

Scalability merely refers to the ability of the data science process to scale to massive datasets (popularly known as big data).

For this we need distributed fault-tolerant computing typically over large clusters of commodity computers -- the core infrastructure in a public cloud today.

Distributed Machine Learning allows the models in the data science process to be scalably trained and extract value from big data.

What is Data Science?

It is increasingly accepted that Data Science

is an inter-disciplinary field that uses scientific methods, processes, algorithms and systems to extract knowledge and insights from many structural and unstructured data. Data science is related to data mining, machine learning and big data.

Data science is a "concept to unify statistics, data analysis and their related methods" in order to "understand and analyze actual phenomena" with data. It uses techniques and theories drawn from many fields within the context of mathematics, statistics, computer science, domain knowledge and information science. Turing award winner Jim Gray imagined data science as a "fourth paradigm" of science (empirical, theoretical, computational and now data-driven) and asserted that "everything about science is changing because of the impact of information technology" and the data deluge.

Now, let us look at two industrially-informed academic papers that influence the above quote on what is Data Science, but with a view towards the contents and syllabus of this course.

key insights in the above paper

- Data Science is the study of the generalizabile extraction of knowledge from data.

- A common epistemic requirement in assessing whether new knowledge is actionable for decision making is its predictive power, not just its ability to explain the past.

- A data scientist requires an integrated skill set spanning

- mathematics,

- machine learning,

- artificial intelligence,

- statistics,

- databases, and

- optimization,

- along with a deep understanding of the craft of problem formulation to engineer effective solutions.

key insights in the above paper

- ML is concerned with the building of computers that improve automatically through experience

- ML lies at the intersection of computer science and statistics and at the core of artificial intelligence and data science

- Recent progress in ML is due to:

- development of new algorithms and theory

- ongoing explosion in the availability of online data

- availability of low-cost computation (through clusters of commodity hardware in the cloud )

- The adoption of data science and ML methods is leading to more evidence-based decision-making across:

- life sciences (neuroscience, genomics, agriculture, etc. )

- manufacturing

- robotics (autonomous vehicle)

- vision, speech processing, natural language processing

- education

- financial modeling

- policing

- marketing

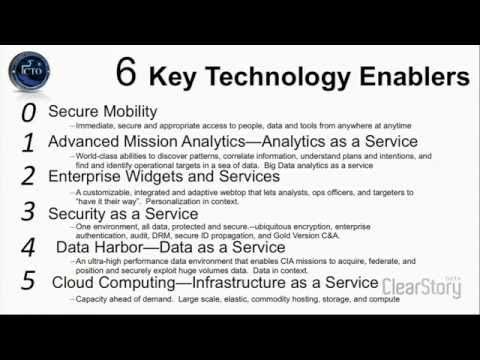

US CIA's CTO Gus Hunt's View on Big Data and Data Science

This is recommended viewing for historical insights into Big Data and Data Science skills - thanks to Snowden's Permanent Record for this pointer. At least watch for a few minutes from about 23 minutes to see what Gus Hunt, the then CTO of the American CIA, thinks about the combination of skills needed for Data Science. Watch the whole talk by Gus Hunt titled CIA's Chief Tech Officer on Big Data: We Try to Collect Everything and Hang Onto It Forever if you have 20 minutes or so to get a better framework for this first lecture on the data science process.

Apache Spark actually grew out of Obama era Big Data straetigic grants to UC Berkeley's AMP Lab (the academic origins of Apark and databricks).

The Gus Hunt's distinction proposed here between enumeration versus modeling is mathematically fundamental. The latter is within the realm of classical probabilistic learning theory (eg. Probabilistic Theory of Pattern Recognition, Devroye, Luc; Györfi, László; Lugosi, Gábor, 1996), including, Deep Learning, Reinforcement Learning, etc.), while the former is partly frameable within a wider mathematical setting known as predicting individual sequences (eg. Prediction, Learning and Games, Cesa-Bianchi, N., & Lugosi, G., 2006).

But what is Data Engineering (including Machine Learning Engineering and Operations) and how does it relate to Data Science?

Data Engineering

There are several views on what a data engineer is supposed to do:

Some views are rather narrow and emphasise division of labour between data engineers and data scientists:

- https://www.oreilly.com/ideas/data-engineering-a-quick-and-simple-definition

- Let's check out what skills a data engineer is expected to have according to the link above.

"Ian Buss, principal solutions architect at Cloudera, notes that data scientists focus on finding new insights from a data set, while data engineers are concerned with the production readiness of that data and all that comes with it: formats, scaling, resilience, security, and more."

What skills do data engineers need? Those “10-30 different big data technologies” Anderson references in “Data engineers vs. data scientists” can fall under numerous areas, such as file formats, > ingestion engines, stream processing, batch processing, batch SQL, data storage, cluster management, transaction databases, web frameworks, data visualizations, and machine learning. And that’s just the tip of the iceberg.

Buss says data engineers should have the following skills and knowledge:

- They need to know Linux and they should be comfortable using the command line.

- They should have experience programming in at least Python or Scala/Java.

- They need to know SQL.

- They need some understanding of distributed systems in general and how they are different from traditional storage and processing systems.

- They need a deep understanding of the ecosystem, including ingestion (e.g. Kafka, Kinesis), processing frameworks (e.g. Spark, Flink) and storage engines (e.g. S3, HDFS, HBase, Kudu). They should know the strengths and weaknesses of each tool and what it's best used for.

- They need to know how to access and process data.

Let's dive deeper into such highly compartmentalised views of data engineers and data scientists and the so-called "machine learning engineers" according the following view:

- https://www.oreilly.com/ideas/data-engineers-vs-data-scientists

Industry will keep evolving, so expect new titles in the future to get new types of jobs done.

The Data Engineering Scientist as "The Middle Way"

Here are some basic axioms that should be self-evident.

- Yes, there are differences in skillsets across humans

- some humans will be better and have inclinations for engineering and others for pure mathematics by nature and nurture

- one human cannot easily be a master of everything needed for innovating a new data-based product or service (very very rarely though this happens)

- Skills can be gained by any human who wants to learn to the extent s/he is able to expend time, energy, etc.

For the Scalable Data Engineering Science Process: towards Production-Ready and Productisable Prototyping we need to allow each data engineer to be more of a data scientist and each data scientist to be more of a data engineer, up to each individual's comfort zones in technical and mathematical/conceptual and time-availability planes, but with some minimal expectations of mutual appreciation.

This course is designed to help you take the first minimal steps towards such a data engineering science process.

In the sequel it will become apparent why a team of data engineering scientists with skills across the conventional (2022) spectrum of data engineer versus data scientist is crucial for Production-Ready and Productisable Prototyping, whose outputs include standard AI products today.

A Brief Tour of Data Science

History of Data Analysis and Where Does "Big Data" Come From?

- A Brief History and Timeline of Data Analysis and Big Data

- https://en.wikipedia.org/wiki/Big_data

- https://whatis.techtarget.com/feature/A-history-and-timeline-of-big-data

- Where does Data Come From?

- Some of the sources of big data.

- online click-streams (a lot of it is recorded but a tiny amount is analyzed):

- record every click

- every ad you view

- every billing event,

- every transaction, every network message, and every fault.

- User-generated content (on web and mobile devices):

- every post that you make on Facebook

- every picture sent on Instagram

- every review you write for Yelp or TripAdvisor

- every tweet you send on Twitter

- every video that you post to YouTube.

- Science (for scientific computing):

- data from various repositories for natural language processing:

- Wikipedia,

- the Library of Congress,

- twitter firehose and google ngrams and digital archives,

- data from scientific instruments/sensors/computers:

- the Large Hadron Collider (more data in a year than all the other data sources combined!)

- genome sequencing data (sequencing cost is dropping much faster than Moore's Law!)

- output of high-performance computers (super-computers) for data fusion, estimation/prediction and exploratory data analysis

- data from various repositories for natural language processing:

- Graphs are also an interesting source of big data (network science).

- social networks (collaborations, followers, fb-friends or other relationships),

- telecommunication networks,

- computer networks,

- road networks

- machine logs:

- by servers around the internet (hundreds of millions of machines out there!)

- internet of things.

- online click-streams (a lot of it is recorded but a tiny amount is analyzed):

Data Science with Cloud Computing and What's Hard about it?

- See Cloud Computing to understand the work-horse for analysing big data at data centers

Cloud computing is the on-demand availability of computer system resources, especially data storage (cloud storage) and computing power, without direct active management by the user. Large clouds often have functions distributed over multiple locations, each location being a data center. Cloud computing relies on sharing of resources to achieve coherence and economies of scale, typically using a "pay-as-you-go" model which can help in reducing capital expenses but may also lead to unexpected operating expenses for unaware users.

-

In fact, if you are logged into

https://*.databricks.com/*you are computing in the cloud! So the computations are actually running in an instance of the hardware available at a data center like the following: -

Here is a data center used by CERN in 2010.

-

What's hard about scalable data science in the cloud?

- To analyse datasets that are big, say more than a few TBs, we need to split the data and put it in several computers that are networked - a typical cloud

- However, as the number of computer nodes in such a network increases, the probability of hardware failure or fault (say the hard-disk or memory or CPU or switch breaking down) also increases and can happen while the computation is being performed

- Therefore for scalable data science, i.e., data science that can scale with the size of the input data by adding more computer nodes, we need fault-tolerant computing and storage framework at the software level to ensure the computations finish even if there are hardware faults.

Here is a recommended light reading on What is "Big Data" -- Understanding the History (18 minutes): - https://towardsdatascience.com/what-is-big-data-understanding-the-history-32078f3b53ce

What should you be able to do at the end of this course?

By following these online interactions in the form of lab/lectures, asking questions, engaging in discussions, doing HOMEWORK assignments and completing the group project, you should be able to:

- Understand the principles of fault-tolerant scalable computing in Spark

- in-memory and generic DAG extensions of Map-reduce

- resilient distributed datasets for fault-tolerance

- skills to process today's big data using state-of-the art techniques in Apache Spark 3.0, in terms of:

- hands-on coding with realistic datasets

- an intuitive understanding of the ideas behind the technology and methods

- pointers to academic papers in the literature, technical blogs and video streams for you to futher your theoretical understanding.

- More concretely, you will be able to:

- Extract, Transform, Load, Interact, Explore and Analyze Data

- Build Scalable Machine Learning Pipelines (or help build them) using Distributed Algorithms and Optimization

- How to keep up?

- This is a fast-changing world.

- Recent videos around Apache Spark are archived here (these videos are a great way to learn the latest happenings in industrial R&D today!):

- What is mathematically stable in the world of 'big data'?

- There is a growing body of work on the analysis of parallel and distributed algorithms, the work-horse of big data and AI.

- We will see the core of this in the theoretical material from Reza Zadeh's course on Distributed Algorithms and Optimisation.

Data Science Process under the Algorithms-Machines-Peoples-Planet Framework

Preparatory perusal at some distance, without necessarily associating oneself with any particular "philosophy", in order to intuitively understand the Data Science Process under the Algorithms-Machines-Peoples-Planet (AMPP) Framework, as formal Decision Problems and Decision Procedures (including any AI/ML algorithm) for consideration before Action using Mathematical Decision Theory.

- Kate Crawford and Vladan Joler, “Anatomy of an AI System: The Amazon Echo As An Anatomical Map of Human Labor, Data and Planetary Resources,” AI Now Institute and Share Lab, (September 7, 2018)

- Browse anatomyof.ai.

- View full-scale map as PDF

- Read Map + Essay as PDF in A3 format (expected time is about 1.5 hours for thorough comprehension)

- MANIFESTO ON THE FUTURE OF SEEDS. Produced by The International Commission on the Future of Food and Agriculture, 36 pages. (2006). Disseminated as PDF from http://lamastex.org/JOE/ManifestoOnFutureOfSeeds*2006.pdf.

- The Joint Operating Environment (JOE) limited to the perspective of The United States:

- Read at least page 3 on "About this Study" https://www.jcs.mil/Portals/36/Documents/Doctrine/concepts/joe*2008.pdf

- Do a deeper dive if interested at https://www.jcs.mil/Doctrine/Joint-Concepts/JOE/ following through Joint Operating Environment, 2010 and Joint Operating Environment 2035, 14 July 2016

- Read at least page 3 on "About this Study" https://www.jcs.mil/Portals/36/Documents/Doctrine/concepts/joe*2008.pdf

- Know the doctrine of Mutual Assured Destruction (MAD). Peruse: https://en.wikipedia.org/wiki/Mutual*assured*destruction

- Peruse https://www.oneearth.org/our-mission/, and more specifically inform yourself by further perusing

- Energy Transition: https://www.oneearth.org/science/energy/

- Nature Conservation: https://www.oneearth.org/science/nature/

- Regenerative Agriculture: https://www.oneearth.org/science/agriculture/

Interactions

When you are involved in a data science process (to "make a living", say) under the AMPP framework, your Algorithms implemented on Machines can have different effects on Peoples (meaning, any living populations of any species, including different human sub-populations, plants, animals, microbes, etc.) and our Planet (soils, climates, oceans, etc.) as long as the Joint Operating Environment is stable to avoid Mutual Assured Destruction.

Discussion 0

- Is it important to be aware of such different effects when building of a data product in some data science process?

- We will limit the discussions on the concrete matter of "Alexa", AWS's voice assistant.

- what are your thoughts on https://anatomyof.ai/?

- We will limit the discussions on the concrete matter of "Alexa", AWS's voice assistant.

This is a primer for our industrial guest speakers from https://www.trase.earth/ who will talk about supply chains.