Introduction

- Course Name: Scalable Data Science and Distributed Machine Learning

- Course Acronym: ScaDaMaLe or sds-3.x.

The course is given in several modules.

Expected Reference Readings

Note that you need to be logged into your library with access to these publishers:

- https://learning.oreilly.com/library/view/high-performance-spark/9781491943199/

- https://learning.oreilly.com/library/view/spark-the-definitive/9781491912201/

- https://learning.oreilly.com/library/view/learning-spark-2nd/9781492050032/

- Introduction to Algorithms, Third Edition, Thomas H. Cormen, Charles E. Leiserson, Ronald L. Rivest, and Clifford Stein from

- Reading Materials Provided

Course Sponsors

The course builds on contents developed since 2016 with support from New Zealand's Data Industry. The 2017-2019 versions were academically sponsored by Uppsala University's Inter-Faculty Course grant, Department of Mathematics and The Centre for Interdisciplinary Mathematics and industrially sponsored by databricks, AWS and Swedish data industry via Combient AB, SEB and Combient Mix AB. The 2021-2023 versions were/are academically sponsored by WASP Graduate School and Centre for Interdisciplinary Mathematics, and industrially sponsored by databricks and AWS via databricks University Alliance and Combient Mix AB via industrial mentorships and internships.

Course Instructor

I, Raazesh Sainudiin or Raaz, will be an instructor for the course.

I have

- more than 16 years of academic research experience in applied mathematics and statistics and

- over 8 years of experience in the data industry.

I currently (2022) have an effective joint appointment as:

- Associate Professor of Mathematics with specialisation in Data Science at Department of Mathematics, Uppsala University, Uppsala, Sweden and

- Researching,Developing and Enabling Chair in Mathematical Data Engineering Sciences at Combient Mix AB, Stockholm, Sweden

Quick links on Raaz's background:

What is the Data Science Process

The Data Science Process in one picture

Data Science Process under the Algorithms-Machines-Peoples-Planet Framework

Note that the Data Product that is typically a desired outcome of the Data Science Process can be anything that has commercial value (to help make a livig, colloquially speaking), including a software product, hardware product, personalized medicine for a specific individual, or pasture-raised chicken based on intensive data collection from field experiments in regenerative agriculture, among others.

It is extremely important to be aware of the underlying actual costs and benefits in any Data Science Process under the Algorithms-Machines-Peoples-Planet Framework. We will see this in the sequel at a high level.

What is scalable data science and distributed machine learning?

Scalability merely refers to the ability of the data science process to scale to massive datasets (popularly known as big data).

For this we need distributed fault-tolerant computing typically over large clusters of commodity computers -- the core infrastructure in a public cloud today.

Distributed Machine Learning allows the models in the data science process to be scalably trained and extract value from big data.

What is Data Science?

It is increasingly accepted that Data Science

is an inter-disciplinary field that uses scientific methods, processes, algorithms and systems to extract knowledge and insights from many structural and unstructured data. Data science is related to data mining, machine learning and big data.

Data science is a "concept to unify statistics, data analysis and their related methods" in order to "understand and analyze actual phenomena" with data. It uses techniques and theories drawn from many fields within the context of mathematics, statistics, computer science, domain knowledge and information science. Turing award winner Jim Gray imagined data science as a "fourth paradigm" of science (empirical, theoretical, computational and now data-driven) and asserted that "everything about science is changing because of the impact of information technology" and the data deluge.

Now, let us look at two industrially-informed academic papers that influence the above quote on what is Data Science, but with a view towards the contents and syllabus of this course.

key insights in the above paper

- Data Science is the study of the generalizabile extraction of knowledge from data.

- A common epistemic requirement in assessing whether new knowledge is actionable for decision making is its predictive power, not just its ability to explain the past.

- A data scientist requires an integrated skill set spanning

- mathematics,

- machine learning,

- artificial intelligence,

- statistics,

- databases, and

- optimization,

- along with a deep understanding of the craft of problem formulation to engineer effective solutions.

key insights in the above paper

- ML is concerned with the building of computers that improve automatically through experience

- ML lies at the intersection of computer science and statistics and at the core of artificial intelligence and data science

- Recent progress in ML is due to:

- development of new algorithms and theory

- ongoing explosion in the availability of online data

- availability of low-cost computation (through clusters of commodity hardware in the cloud )

- The adoption of data science and ML methods is leading to more evidence-based decision-making across:

- life sciences (neuroscience, genomics, agriculture, etc. )

- manufacturing

- robotics (autonomous vehicle)

- vision, speech processing, natural language processing

- education

- financial modeling

- policing

- marketing

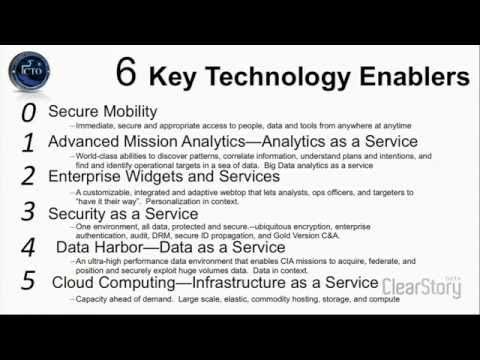

US CIA's CTO Gus Hunt's View on Big Data and Data Science

This is recommended viewing for historical insights into Big Data and Data Science skills - thanks to Snowden's Permanent Record for this pointer. At least watch for a few minutes from about 23 minutes to see what Gus Hunt, the then CTO of the American CIA, thinks about the combination of skills needed for Data Science. Watch the whole talk by Gus Hunt titled CIA's Chief Tech Officer on Big Data: We Try to Collect Everything and Hang Onto It Forever if you have 20 minutes or so to get a better framework for this first lecture on the data science process.

Apache Spark actually grew out of Obama era Big Data straetigic grants to UC Berkeley's AMP Lab (the academic origins of Apark and databricks).

The Gus Hunt's distinction proposed here between enumeration versus modeling is mathematically fundamental. The latter is within the realm of classical probabilistic learning theory (eg. Probabilistic Theory of Pattern Recognition, Devroye, Luc; Györfi, László; Lugosi, Gábor, 1996), including, Deep Learning, Reinforcement Learning, etc.), while the former is partly frameable within a wider mathematical setting known as predicting individual sequences (eg. Prediction, Learning and Games, Cesa-Bianchi, N., & Lugosi, G., 2006).

But what is Data Engineering (including Machine Learning Engineering and Operations) and how does it relate to Data Science?

Data Engineering

There are several views on what a data engineer is supposed to do:

Some views are rather narrow and emphasise division of labour between data engineers and data scientists:

- https://www.oreilly.com/ideas/data-engineering-a-quick-and-simple-definition

- Let's check out what skills a data engineer is expected to have according to the link above.

"Ian Buss, principal solutions architect at Cloudera, notes that data scientists focus on finding new insights from a data set, while data engineers are concerned with the production readiness of that data and all that comes with it: formats, scaling, resilience, security, and more."

What skills do data engineers need? Those “10-30 different big data technologies” Anderson references in “Data engineers vs. data scientists” can fall under numerous areas, such as file formats, > ingestion engines, stream processing, batch processing, batch SQL, data storage, cluster management, transaction databases, web frameworks, data visualizations, and machine learning. And that’s just the tip of the iceberg.

Buss says data engineers should have the following skills and knowledge:

- They need to know Linux and they should be comfortable using the command line.

- They should have experience programming in at least Python or Scala/Java.

- They need to know SQL.

- They need some understanding of distributed systems in general and how they are different from traditional storage and processing systems.

- They need a deep understanding of the ecosystem, including ingestion (e.g. Kafka, Kinesis), processing frameworks (e.g. Spark, Flink) and storage engines (e.g. S3, HDFS, HBase, Kudu). They should know the strengths and weaknesses of each tool and what it's best used for.

- They need to know how to access and process data.

Let's dive deeper into such highly compartmentalised views of data engineers and data scientists and the so-called "machine learning engineers" according the following view:

- https://www.oreilly.com/ideas/data-engineers-vs-data-scientists

Industry will keep evolving, so expect new titles in the future to get new types of jobs done.

The Data Engineering Scientist as "The Middle Way"

Here are some basic axioms that should be self-evident.

- Yes, there are differences in skillsets across humans

- some humans will be better and have inclinations for engineering and others for pure mathematics by nature and nurture

- one human cannot easily be a master of everything needed for innovating a new data-based product or service (very very rarely though this happens)

- Skills can be gained by any human who wants to learn to the extent s/he is able to expend time, energy, etc.

For the Scalable Data Engineering Science Process: towards Production-Ready and Productisable Prototyping we need to allow each data engineer to be more of a data scientist and each data scientist to be more of a data engineer, up to each individual's comfort zones in technical and mathematical/conceptual and time-availability planes, but with some minimal expectations of mutual appreciation.

This course is designed to help you take the first minimal steps towards such a data engineering science process.

In the sequel it will become apparent why a team of data engineering scientists with skills across the conventional (2022) spectrum of data engineer versus data scientist is crucial for Production-Ready and Productisable Prototyping, whose outputs include standard AI products today.

A Brief Tour of Data Science

History of Data Analysis and Where Does "Big Data" Come From?

- A Brief History and Timeline of Data Analysis and Big Data

- https://en.wikipedia.org/wiki/Big_data

- https://whatis.techtarget.com/feature/A-history-and-timeline-of-big-data

- Where does Data Come From?

- Some of the sources of big data.

- online click-streams (a lot of it is recorded but a tiny amount is analyzed):

- record every click

- every ad you view

- every billing event,

- every transaction, every network message, and every fault.

- User-generated content (on web and mobile devices):

- every post that you make on Facebook

- every picture sent on Instagram

- every review you write for Yelp or TripAdvisor

- every tweet you send on Twitter

- every video that you post to YouTube.

- Science (for scientific computing):

- data from various repositories for natural language processing:

- Wikipedia,

- the Library of Congress,

- twitter firehose and google ngrams and digital archives,

- data from scientific instruments/sensors/computers:

- the Large Hadron Collider (more data in a year than all the other data sources combined!)

- genome sequencing data (sequencing cost is dropping much faster than Moore's Law!)

- output of high-performance computers (super-computers) for data fusion, estimation/prediction and exploratory data analysis

- data from various repositories for natural language processing:

- Graphs are also an interesting source of big data (network science).

- social networks (collaborations, followers, fb-friends or other relationships),

- telecommunication networks,

- computer networks,

- road networks

- machine logs:

- by servers around the internet (hundreds of millions of machines out there!)

- internet of things.

- online click-streams (a lot of it is recorded but a tiny amount is analyzed):

Data Science with Cloud Computing and What's Hard about it?

- See Cloud Computing to understand the work-horse for analysing big data at data centers

Cloud computing is the on-demand availability of computer system resources, especially data storage (cloud storage) and computing power, without direct active management by the user. Large clouds often have functions distributed over multiple locations, each location being a data center. Cloud computing relies on sharing of resources to achieve coherence and economies of scale, typically using a "pay-as-you-go" model which can help in reducing capital expenses but may also lead to unexpected operating expenses for unaware users.

-

In fact, if you are logged into

https://*.databricks.com/*you are computing in the cloud! So the computations are actually running in an instance of the hardware available at a data center like the following: -

Here is a data center used by CERN in 2010.

-

What's hard about scalable data science in the cloud?

- To analyse datasets that are big, say more than a few TBs, we need to split the data and put it in several computers that are networked - a typical cloud

- However, as the number of computer nodes in such a network increases, the probability of hardware failure or fault (say the hard-disk or memory or CPU or switch breaking down) also increases and can happen while the computation is being performed

- Therefore for scalable data science, i.e., data science that can scale with the size of the input data by adding more computer nodes, we need fault-tolerant computing and storage framework at the software level to ensure the computations finish even if there are hardware faults.

Here is a recommended light reading on What is "Big Data" -- Understanding the History (18 minutes): - https://towardsdatascience.com/what-is-big-data-understanding-the-history-32078f3b53ce

What should you be able to do at the end of this course?

By following these online interactions in the form of lab/lectures, asking questions, engaging in discussions, doing HOMEWORK assignments and completing the group project, you should be able to:

- Understand the principles of fault-tolerant scalable computing in Spark

- in-memory and generic DAG extensions of Map-reduce

- resilient distributed datasets for fault-tolerance

- skills to process today's big data using state-of-the art techniques in Apache Spark 3.0, in terms of:

- hands-on coding with realistic datasets

- an intuitive understanding of the ideas behind the technology and methods

- pointers to academic papers in the literature, technical blogs and video streams for you to futher your theoretical understanding.

- More concretely, you will be able to:

- Extract, Transform, Load, Interact, Explore and Analyze Data

- Build Scalable Machine Learning Pipelines (or help build them) using Distributed Algorithms and Optimization

- How to keep up?

- This is a fast-changing world.

- Recent videos around Apache Spark are archived here (these videos are a great way to learn the latest happenings in industrial R&D today!):

- What is mathematically stable in the world of 'big data'?

- There is a growing body of work on the analysis of parallel and distributed algorithms, the work-horse of big data and AI.

- We will see the core of this in the theoretical material from Reza Zadeh's course on Distributed Algorithms and Optimisation.

Data Science Process under the Algorithms-Machines-Peoples-Planet Framework

Preparatory perusal at some distance, without necessarily associating oneself with any particular "philosophy", in order to intuitively understand the Data Science Process under the Algorithms-Machines-Peoples-Planet (AMPP) Framework, as formal Decision Problems and Decision Procedures (including any AI/ML algorithm) for consideration before Action using Mathematical Decision Theory.

- Kate Crawford and Vladan Joler, “Anatomy of an AI System: The Amazon Echo As An Anatomical Map of Human Labor, Data and Planetary Resources,” AI Now Institute and Share Lab, (September 7, 2018)

- Browse anatomyof.ai.

- View full-scale map as PDF

- Read Map + Essay as PDF in A3 format (expected time is about 1.5 hours for thorough comprehension)

- MANIFESTO ON THE FUTURE OF SEEDS. Produced by The International Commission on the Future of Food and Agriculture, 36 pages. (2006). Disseminated as PDF from http://lamastex.org/JOE/ManifestoOnFutureOfSeeds*2006.pdf.

- The Joint Operating Environment (JOE) limited to the perspective of The United States:

- Read at least page 3 on "About this Study" https://www.jcs.mil/Portals/36/Documents/Doctrine/concepts/joe*2008.pdf

- Do a deeper dive if interested at https://www.jcs.mil/Doctrine/Joint-Concepts/JOE/ following through Joint Operating Environment, 2010 and Joint Operating Environment 2035, 14 July 2016

- Read at least page 3 on "About this Study" https://www.jcs.mil/Portals/36/Documents/Doctrine/concepts/joe*2008.pdf

- Know the doctrine of Mutual Assured Destruction (MAD). Peruse: https://en.wikipedia.org/wiki/Mutual*assured*destruction

- Peruse https://www.oneearth.org/our-mission/, and more specifically inform yourself by further perusing

- Energy Transition: https://www.oneearth.org/science/energy/

- Nature Conservation: https://www.oneearth.org/science/nature/

- Regenerative Agriculture: https://www.oneearth.org/science/agriculture/

Interactions

When you are involved in a data science process (to "make a living", say) under the AMPP framework, your Algorithms implemented on Machines can have different effects on Peoples (meaning, any living populations of any species, including different human sub-populations, plants, animals, microbes, etc.) and our Planet (soils, climates, oceans, etc.) as long as the Joint Operating Environment is stable to avoid Mutual Assured Destruction.

Discussion 0

- Is it important to be aware of such different effects when building of a data product in some data science process?

- We will limit the discussions on the concrete matter of "Alexa", AWS's voice assistant.

- what are your thoughts on https://anatomyof.ai/?

- We will limit the discussions on the concrete matter of "Alexa", AWS's voice assistant.

This is a primer for our industrial guest speakers from https://www.trase.earth/ who will talk about supply chains.

Why Apache Spark?

- Apache Spark: A Unified Engine for Big Data Processing By Matei Zaharia, Reynold S. Xin, Patrick Wendell, Tathagata Das, Michael Armbrust, Ankur Dave, Xiangrui Meng, Josh Rosen, Shivaram Venkataraman, Michael J. Franklin, Ali Ghodsi, Joseph Gonzalez, Scott Shenker, Ion Stoica Communications of the ACM, Vol. 59 No. 11, Pages 56-65 10.1145/2934664

Right-click the above image-link, open in a new tab and watch the video (4 minutes) or read about it in the Communications of the ACM in the frame below or from the link above.

Key Insights from Apache Spark: A Unified Engine for Big Data Processing

- A simple programming model can capture streaming, batch, and interactive workloads and enable new applications that combine them.

- Apache Spark applications range from finance to scientific data processing and combine libraries for SQL, machine learning, and graphs.

- In six years, Apache Spark has grown to 1,000 contributors and thousands of deployments.

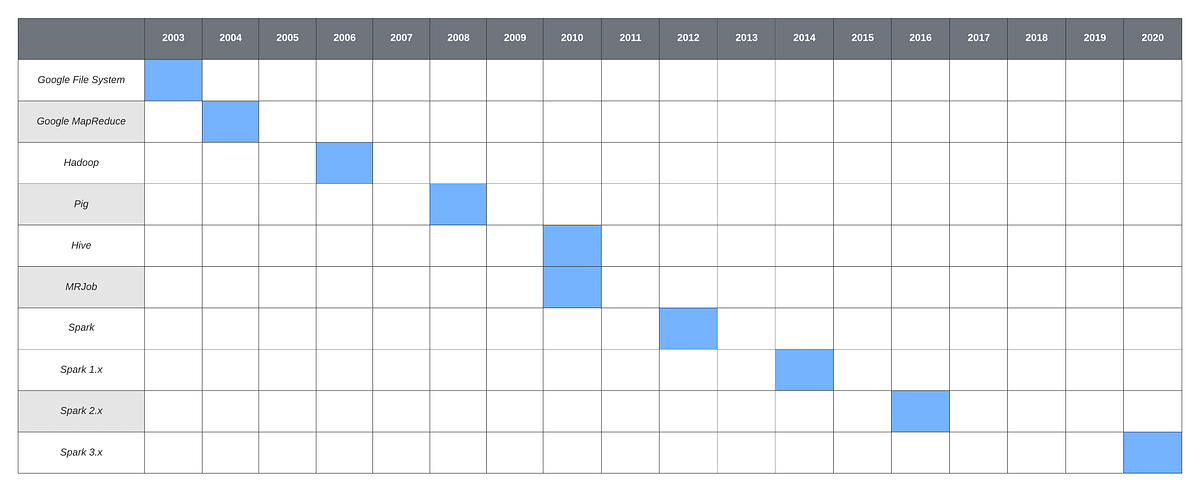

Spark 3.0 is the latest version now (20200918) and it should be seen as the latest step in the evolution of tools in the big data ecosystem as summarized in https://towardsdatascience.com/what-is-big-data-understanding-the-history-32078f3b53ce:

Alternatives to Apache Spark

There are several alternatives to Apache Spark, but none of them have the penetration and community of Spark as of 2021.

For real-time streaming operations Apache Flink is competitive. See Apache Flink vs Spark – Will one overtake the other? for a July 2021 comparison. Most scalable data science and engineering problems faced by several major industries in Sweden today are routinely solved using tools in the ecosystem around Apache Spark. Therefore, we will focus on Apache Spark here which still holds the world record for 10TB or 10,000 GB sort by Alibaba cloud in 06/17/2020.

Several alternatives to Apache Spark exist. See the following for verious commercial options: - https://sourceforge.net/software/product/Apache-Spark/alternatives

Read the following for a comparison of three popular frameworks in 2022 for distributed computing:

Here, we will focus on Apache Spark as it is still a popular framework for distributed computing with a rich ecosystem around it.

The big data problem

Hardware, distributing work, handling failed and slow machines

Let us recall and appreciate the following:

- The Big Data Problem

- Many routine problems today involve dealing with "big data", operationally, this is a dataset that is larger than a few TBs and thus won't fit into a single commodity computer like a powerful desktop or laptop computer.

- Hardware for Big Data

- The best single commodity computer can not handle big data as it has limited hard-disk and memory

- Thus, we need to break the data up into lots of commodity computers that are networked together via cables to communicate instructions and data between them - this can be thought of as a cloud

- How to distribute work across a cluster of commodity machines?

- We need a software-level framework for this.

- How to deal with failures or slow machines?

- We also need a software-level framework for this.

Key Papers

- Key Historical Milestones

- 1956-1979: Stanford, MIT, CMU, and other universities develop set/list operations in LISP, Prolog, and other languages for parallel processing

- 2004: READ: Google's MapReduce: Simplified Data Processing on Large Clusters, by Jeffrey Dean and Sanjay Ghemawat

- 2006: Yahoo!'s Apache Hadoop, originating from the Yahoo!’s Nutch Project, Doug Cutting - wikipedia

- 2009: Cloud computing with Amazon Web Services Elastic MapReduce, a Hadoop version modified for Amazon Elastic Cloud Computing (EC2) and Amazon Simple Storage System (S3), including support for Apache Hive and Pig.

- 2010: READ: The Hadoop Distributed File System, by Konstantin Shvachko, Hairong Kuang, Sanjay Radia, and Robert Chansler. IEEE MSST

- Apache Spark Core Papers

- 2012: READ: Resilient Distributed Datasets: A Fault-Tolerant Abstraction for In-Memory Cluster Computing, Matei Zaharia, Mosharaf Chowdhury, Tathagata Das, Ankur Dave, Justin Ma, Murphy McCauley, Michael J. Franklin, Scott Shenker and Ion Stoica. NSDI

- 2016: Apache Spark: A Unified Engine for Big Data Processing By Matei Zaharia, Reynold S. Xin, Patrick Wendell, Tathagata Das, Michael Armbrust, Ankur Dave, Xiangrui Meng, Josh Rosen, Shivaram Venkataraman, Michael J. Franklin, Ali Ghodsi, Joseph Gonzalez, Scott Shenker, Ion Stoica , Communications of the ACM, Vol. 59 No. 11, Pages 56-65, 10.1145/2934664

- A lot has happened since 2016 to improve efficiency of Spark and embed more into the big data ecosystem

- More research papers on Spark are available from here and we will refer to them in the sequel as needed:

MapReduce and Apache Spark.

MapReduce as we will see shortly in action is a framework for distributed fault-tolerant computing over a fault-tolerant distributed file-system, such as Google File System or open-source Hadoop for storage.

- Unfortunately, Map Reduce is bounded by Disk I/O and can be slow

- especially when doing a sequence of MapReduce operations requirinr multiple Disk I/O operations

- Apache Spark can use Memory instead of Disk to speed-up MapReduce Operations

- Spark Versus MapReduce - the speed-up is orders of magnitude faster

- SUMMARY

- Spark uses memory instead of disk alone and is thus fater than Hadoop MapReduce

- Spark's resilience abstraction is by RDD (resilient distributed dataset)

- RDDs can be recovered upon failures from their lineage graphs, the recipes to make them starting from raw data

- Spark supports a lot more than MapReduce, including streaming, interactive in-memory querying, etc.

- Spark demonstrated an unprecedented sort of 1 petabyte (1,000 terabytes) worth of data in 234 minutes running on 190 Amazon EC2 instances (in 2015).

- Spark expertise corresponds to the highest Median Salary in the US (~ 150K)

Login to databricks

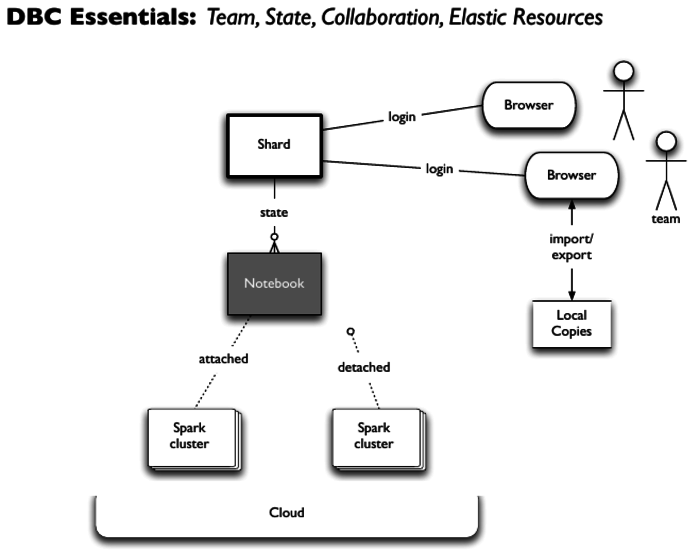

We will use databricks community edition and later on the databricks project shard granted for this course under the databricks university alliance with cloud computing grants from databricks for waived DBU units and AWS.

Please go here for a relaxed and detailed-enough tour (later):

databricks community edition

First obtain a free Obtain a databricks community edition account at https://community.cloud.databricks.com by following these instructions: https://youtu.be/FH2KDhaFkZg.

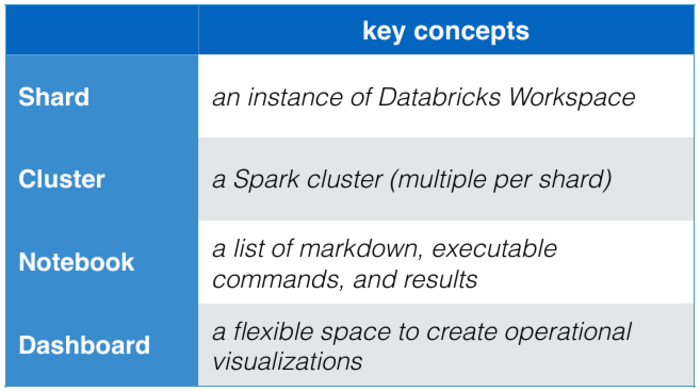

Let's get an overview of the databricks managed cloud for processing big data with Apache Spark.

DBC Essentials: Team, State, Collaboration, Elastic Resources in one picture

You Should All Have databricks community edition account by now! and have successfully logged in to it.

Import Course Content Now!

Two Steps:

- Create a folder named

scalable-data-sciencein yourWorkspace(NO Typos due to hard-coding of paths in the sequel!)

- Import the following

.dbcarchives from the following URL intoWorkspace/scalable-data-sciencefolder you just created:- https://github.com/lamastex/scalable-data-science/raw/master/dbcArchives/latest/

- start with the first file for now and import more as needed:

Open-Source Computing Environment

We will mainly use docker for local development on our own infrastructure, such as a laptop.

docker

You can install and learn docker on your laptop; https://docs.docker.com/get-started/.

In a Terminal, after installing docker, type:

$ docker run --rm -it lamastex/dockerdev:latest

The above docker container has already downloaded the needed sources for a very basic Spark developer environment using this Dockerfile which builds on this spark3x.Dockerfile. Having the source for a dockerfile is helpful when one needs to adapt it for the future.

You can test your docker installation by launching the spark-shell inside the docker container, compute 1+1 and :quit from spark-shell and exit from docker.

root@7715366c86a4:~# spark-shell

22/08/18 12:24:26 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://7715366c86a4:4040

Spark context available as 'sc' (master = local[*], app id = local-1660825477089).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.1.2

/_/

Using Scala version 2.12.10 (OpenJDK 64-Bit Server VM, Java 1.8.0_312)

Type in expressions to have them evaluated.

Type :help for more information.

scala> 1+1

res0: Int = 2

scala> :quit

root@c6ced3348c97:~# exit

zeppelin

You may also use the open source project https://zeppelin.apache.org/ through the provided zeppelin spark docker container lamastex/zeppelin-spark after successfully installing docker in your laptop.

Several companies use databricks notebooks but several others use zeppelin or jupyter or other notebook servers. Therefore, it is good to be familiar with a couple notebook servers and formats.

Please go here for a relaxed and detailed-enough tour (later):

Multi-lingual Notebooks

Write Spark code for processing your data in notebooks.

Note that there are several open-sourced notebook servers including:

For comparison between pairs of notebook servers see:

- https://datasciencenotebook.org/compare/zeppelin/databricks

- https://datasciencenotebook.org/compare/jupyter/databricks

Here, we are mainly focused on using databricks notebooks due to its effeciently managed engineering layers over AWS (or Azure or GPC public clouds).

This allows us to focus on the concepts and models and get to implement codes with ease.

NOTE: By now you should have already opened this notebook and attached it to a cluster:

- that you started in the Community Edition of databricks

- or that you started in the zeppelin spark docker container similar to lamastex/zeppelin-spark

Databricks Notebook

Next we delve into the mechanics of working with databricks notebooks.

- But many of the details also apply to other notebook environments such as zeppelin with minor differences.

Some Zeppelin caveats

- Since many companies use other notebook servers we will make remarks on minor differences between databricks and zeppelin notebook formats, in case you want to try zeppelin server on your laptop or your own infrastructure.

- Note that one can run Jupyter within Zeppelin. We use Zeppelin as opposed to Jupyter here because Zeppelin comes with a much broader collection of default interpreters for today's big data ecosystem and we wanted to only have one other open-source notebook server to complement learning in the currently (2022) closed-source notebook server of databricks.

Notebooks can be written in Python, Scala, R, or SQL.

- This is a Scala notebook - which is indicated next to the title above by

(Scala). - One can choose the default language of the notebook when it is created.

Creating a new Notebook

- Select Create > Notebook.

- Enter the name of the notebook, the language (Python, Scala, R or SQL) for the notebook, and a cluster to run it on.

- From Left Menu you can go to 'Workspace' and then Click the tiangle on the right side of a folder to open the folder menu and create notebook in the folder.

Cloning a Notebook

- You can clone a notebook to create a copy of it, for example if you want to edit or run an Example notebook like this one.

- Click File > Clone in the notebook context bar above.

- Enter a new name and location for your notebook. If Access Control is enabled, you can only clone to folders that you have Manage permissions on.

Clone Or Import This Notebook

-

From the File menu at the top left of this notebook, choose Clone or click Import Notebook on the top right. This will allow you to interactively execute code cells as you proceed through the notebook.

-

Enter a name and a desired location for your cloned notebook (i.e. Perhaps clone to your own user directory or the "Shared" directory.)

-

Navigate to the location you selected (e.g. click Menu > Workspace >

Your cloned location)

Attach the Notebook to a cluster

- A Cluster is a group of machines which can run commands in cells.

- Check the upper left corner of your notebook to see if it is Attached or Detached.

- If Detached, click on the right arrow and select a cluster to attach your notebook to.

- If there is no running cluster, create one.

Deep-dive into databricks notebooks

Let's take a deeper dive into a databricks notebook next.

Cells are units that make up notebooks

Cells each have a type - including scala, python, sql, R, markdown, filesystem, and shell.

- While cells default to the type of the Notebook, other cell types are supported as well.

- This cell is in markdown and is used for documentation. Markdown is a simple text formatting syntax.

Beware of minor differences across notebook formats

- Different notebook formats use different tags for the same language interpreter. But they are quite easy to remember.

- For example in zeppelin

%sparkand%pysparkare used instead of databricks'%scalaand%pythonto denote spark and pyspark cells. - However

%mdand%share used for markdown and shell in both notebook formats. ***

- For example in zeppelin

Create and Edit a New Markdown Cell in this Notebook

NOTE: You will be writing your group project report as databricks notebooks, therefore it is important to use markdown effectively.

-

When you mouse between cells, a + sign will pop up in the center that you can click on to create a new cell.

-

Type

%md Hello, world!into your new cell (%mdindicates the cell is markdown). -

Click out of the cell or double-click to see the cell contents update.

Running a cell in your notebook.

- Press Shift+Enter when in the cell to run it and proceed to the next cell.

- The cells contents should update.

- NOTE: Cells are not automatically run each time you open it.

- Instead, Previous results from running a cell are saved and displayed.

- Alternately, press Ctrl+Enter when in a cell to run it, but not proceed to the next cell.

You Try Now! Just double-click the cell below, modify the text following %md and press Ctrl+Enter to evaluate it and see it's mark-down'd output.

> %md Hello, world!

Hello, world!

Markdown Cell Tips

- To change a non-markdown cell to markdown, add

%mdto very start of the cell. - After updating the contents of a markdown cell, click out of the cell to update the formatted contents of a markdown cell.

- To edit an existing markdown cell, doubleclick the cell.

Learn more about markdown:

Note that there are flavours or minor variants and enhancements of markdown, including those specific to databricks, github, pandoc, etc.

It will be future-proof to remain in the syntactic zone of pure markdown (at the intersection of various flavours) as much as possible and go with pandoc-compatible style if choices are necessary. ***

Run a Scala Cell

- Run the following scala cell.

- Note: There is no need for any special indicator (such as

%md) necessary to create a Scala cell in a Scala notebook. - You know it is a scala notebook because of the

(Scala)appended to the name of this notebook. - Make sure the cell contents updates before moving on.

- Press Shift+Enter when in the cell to run it and proceed to the next cell.

- The cells contents should update.

- Alternately, press Ctrl+Enter when in a cell to run it, but not proceed to the next cell.

- characters following

//are comments in scala. - RECALL: In zeppelin

%sparkis used instead of%scala. ***

1+1

res0: Int = 2

println(System.currentTimeMillis) // press Ctrl+Enter to evaluate println that prints its argument as a line

1664271875320

1+1

res2: Int = 2

Spark is written in Scala, but ...

For this reason Scala will be the primary language for this course is Scala.

However, let us use the best language for the job! as each cell can be written in a specific language in the same notebook. Such multi-lingual notebooks are the norm in any realistic data science process today!

The beginning of each cells has a language type if it is not the default language of the notebook. Such cell-specific language types include the following with the prefix %:

-

%scalafor Spark Scala,%sparkin zeppelin

-

%pyfor PySpark,%pysparkin zeppelin

-

%rfor R, -

%sqlfor SQL, -

%shfor BASH SHELL and -

%mdfor markdown. -

While cells default to the language type of the Notebook (scala, python, r or sql), other cell types are supported as well in a cell-specific manner.

-

For example, Python Notebooks can contain python, sql, markdown, and even scala cells. This lets you write notebooks that do use multiple languages.

-

This cell is in markdown as it begins with

%mdand is used for documentation purposes.

Thus, all language-typed cells can be created in any notebook, regardless of the the default language of the notebook itself, provided interpreter support exists for the languages.

Cross-language cells can be used to mix commands from other languages.

Examples:

print("For example, this is a scala notebook, but we can use %py to run python commands inline.")

// you can be explicit about the language even if the notebook's default language is the same

println("We can access Scala like this.")

We can access Scala like this.

Command line cells can be used to work with local files on the Spark driver node. * Start a cell with %sh to run a command line command

# This is a command line cell. Commands you write here will be executed as if they were run on the command line.

# For example, in this cell we access the help pages for the bash shell.

ls

whoami

Notebooks can be run from other notebooks using %run

This is commonly used to import functions you defined in other notebooks.

- Syntax in databricks:

%run /full/path/to/notebook - Syntax in zeppelin:

z.runNote("id")

Displaying in notebooks

Notebooks are great for displaying data in the input cell immediately in the result or output cell. This is often an effective web-based REPL environment to prototype for the data science process.

To display a dataframe or tabular data named myDataFrame:

- Notebook-agnostic syntax in Spark for textual display:

myDataFrame.show() - Syntax in databricks:

display(myDataFrame) - Syntax in zeppelin:

z.show(myDataFrame)

One can also display more sophisticated outputs involving HTML and D3 using display_HTML in databricks or %html in zeppelin. See respective documentation for details. Zeppelin is extremely flexible via helium when it comes to creating interactive visualisations of results from a data science process pipeline.

Further Pointers

Here are some useful links to bookmark as you will need to use them for Reference.

These links provide a relaxed and detailed-enough tour (that you may need to take later):

- databricks

- zeppelin (if you want an open-source notebook server deployed on your own computing infrastructure)

Load Datasets into Databricks

Simply, hit run Runa all cells in this Notebook to load all the core datasets we will be using in the first couple of modules in this course.

It can take about 2-3 minutes once your cluster starts.

Note This notebook should be skipped in docker-compose zeppelin instance of the course.

wget https://github.com/lamastex/sds-datasets/raw/master/datasets-sds.zip

ls -al *

unzip datasets-sds.zip

pwd

rm -r dbfs:///datasets/sds/

res0: Boolean = true

mkdirs dbfs:///datasets/sds/

res1: Boolean = true

cp -r file:///databricks/driver/datasets-sds/ dbfs:///datasets/sds/

res2: Boolean = true

ls /datasets/sds/

| path | name | size | modificationTime |

|---|---|---|---|

| dbfs:/datasets/sds/Rdatasets/ | Rdatasets/ | 0.0 | 1.664296004294e12 |

| dbfs:/datasets/sds/cs100/ | cs100/ | 0.0 | 1.664296004295e12 |

| dbfs:/datasets/sds/flights/ | flights/ | 0.0 | 1.664296004295e12 |

| dbfs:/datasets/sds/mnist-digits/ | mnist-digits/ | 0.0 | 1.664296004295e12 |

| dbfs:/datasets/sds/people/ | people/ | 0.0 | 1.664296004295e12 |

| dbfs:/datasets/sds/people.json/ | people.json/ | 0.0 | 1.664296004295e12 |

| dbfs:/datasets/sds/power-plant/ | power-plant/ | 0.0 | 1.664296004295e12 |

| dbfs:/datasets/sds/social_media_usage.csv/ | social_media_usage.csv/ | 0.0 | 1.664296004295e12 |

| dbfs:/datasets/sds/songs/ | songs/ | 0.0 | 1.664296004295e12 |

| dbfs:/datasets/sds/souall.txt.gz | souall.txt.gz | 3576868.0 | 1.664296002e12 |

| dbfs:/datasets/sds/spark-examples/ | spark-examples/ | 0.0 | 1.664296004295e12 |

| dbfs:/datasets/sds/weather/ | weather/ | 0.0 | 1.664296004295e12 |

| dbfs:/datasets/sds/wikipedia-datasets/ | wikipedia-datasets/ | 0.0 | 1.664296004295e12 |

sc.textFile("/datasets/sds/souall.txt.gz").count // should be 22258

res4: Long = 22258

Now you have loaded the core datasets for the course.

Scala Crash Course

Here we take a minimalist approach to learning just enough Scala, the language that Apache Spark is written in, to be able to use Spark effectively.

In the sequel we can learn more Scala concepts as they arise. This learning can be done by chasing the pointers in this crash course for a detailed deeper dive on your own time.

There are two basic ways in which we can learn Scala:

1. Learn Scala in a notebook environment

For convenience we use databricks Scala notebooks like this one here.

You can learn Scala locally on your own computer using Scala REPL (and Spark using Spark-Shell).

2. Learn Scala in your own computer

The most easy way to get Scala locally is through sbt, the Scala Build Tool. You can also use an IDE that integrates sbt.

See: https://docs.scala-lang.org/getting-started/index.html to set up Scala in your own computer.

Software Engineering NOTE: You can use docker pull lamastex/dockerdev:spark3x and use the docker container for local development based on minimal pointers here: https://github.com/lamastex/dockerDev. Being able to modularise and reuse codes modularly requires the building of libraries which inturn requires building them locally with tools such as sbt or mvn for instance. You can also set-up modern IDEs like https://code.visualstudio.com/ or https://www.jetbrains.com/idea/, etc. for this.

Scala Resources

You will not be learning scala systematically and thoroughly in this course. You will learn to use Scala by doing various Spark jobs.

If you are interested in learning scala properly, then there are various resources, including:

- scala-lang.org is the core Scala resource. Bookmark the following three links:

- tour-of-scala - Bite-sized introductions to core language features.

- we will go through the tour in a hurry now as some Scala familiarity is needed immediately.

- scala-book - An online book introducing the main language features

- you are expected to use this resource to figure out Scala as needed.

- scala-cheatsheet - A handy cheatsheet covering the basics of Scala syntax.

- visual-scala-reference - This guide collects some of the most common functions of the Scala Programming Language and explain them conceptual and graphically in a simple way.

- tour-of-scala - Bite-sized introductions to core language features.

- Online Resources, including:

- Books

The main sources for the following content are (you are encouraged to read them for more background):

- Martin Oderski's Scala by example

- Scala crash course by Holden Karau

- Darren's brief introduction to scala and breeze for statistical computing

What is Scala?

"Scala smoothly integrates object-oriented and functional programming. It is designed to express common programming patterns in a concise, elegant, and type-safe way." by Matrin Odersky.

- High-level language for the Java Virtual Machine (JVM)

- Object oriented + functional programming

- Statically typed

- Comparable in speed to Java

- Type inference saves us from having to write explicit types most of the time Interoperates with Java

- Can use any Java class (inherit from, etc.)

- Can be called from Java code

See a quick tour here:

Why Scala?

- Spark was originally written in Scala, which allows concise function syntax and interactive use

- Spark APIs for other languages include:

- Java API for standalone use

- Python API added to reach a wider user community of programmes

- R API added more recently to reach a wider community of data analyststs

- Unfortunately, Python and R APIs are generally behind Spark's native Scala (for eg. GraphX is only available in Scala currently and datasets are only available in Scala as of 20200918).

- See Darren Wilkinson's 11 reasons for scala as a platform for statistical computing and data science. It is embedded in-place below for your convenience.

Scala Versus Python for Apache Spark

Read: https://www.projectpro.io/article/scala-vs-python-for-apache-spark/213 for an interesting overview.

Learn Scala in Notebook Environment

Run a Scala Cell

- Run the following scala cell.

- Note: There is no need for any special indicator (such as

%md) necessary to create a Scala cell in a Scala notebook. - You know it is a scala notebook because of the

(Scala)appended to the name of this notebook. - Make sure the cell contents updates before moving on.

- Press Shift+Enter when in the cell to run it and proceed to the next cell.

- The cells contents should update.

- Alternately, press Ctrl+Enter when in a cell to run it, but not proceed to the next cell.

- characters following

//are comments in scala. ***

1+1

res0: Int = 2

println(System.currentTimeMillis) // press Ctrl+Enter to evaluate println that prints its argument as a line

1664271977227

Let's get our hands dirty in Scala

We will go through the following programming concepts and tasks by building on https://docs.scala-lang.org/tour/basics.html.

- Scala Types

- Expressions and Printing

- Naming and Assignments

- Functions and Methods in Scala

- Classes and Case Classes

- Methods and Tab-completion

- Objects and Traits

- Collections in Scala and Type Hierarchy

- Functional Programming and MapReduce

- Lazy Evaluations and Recursions

Remark: You need to take a computer science course (from CourseEra, for example) to properly learn Scala. Here, we will learn to use Scala by example to accomplish our data science tasks at hand. You can learn more Scala as needed from various sources pointed out above in Scala Resources.

Scala Types

In Scala, all values have a type, including numerical values and functions. The diagram below illustrates a subset of the type hierarchy.

For now, notice some common types we will be usinf including Int, String, Double, Unit, Boolean, List, etc. For more details see https://docs.scala-lang.org/tour/unified-types.html. We will return to this at the end of the notebook after seeing a brief tour of Scala now.

Expressions

Expressions are computable statements such as the 1+1 we have seen before.

1+1

res2: Int = 2

We can print the output of a computed or evaluated expressions as a line using println:

println(1+1) // printing 2

2

println("hej hej!") // printing a string

hej hej!

Naming and Assignments

value and variable as val and var

You can name the results of expressions using keywords val and var.

Let us assign the integer value 5 to x as follows:

val x : Int = 5 // <Ctrl+Enter> to declare a value x to be integer 5.

x: Int = 5

x is a named result and it is a value since we used the keyword val when naming it.

Scala is statically typed, but it uses built-in type inference machinery to automatically figure out that x is an integer or Int type as follows. Let's declare a value x to be Int 5 next without explictly using Int.

val x = 5 // <Ctrl+Enter> to declare a value x as Int 5 (type automatically inferred)

x: Int = 5

Let's declare x as a Double or double-precision floating-point type using decimal such as 5.0 (a digit has to follow the decimal point!)

val x = 5.0 // <Ctrl+Enter> to declare a value x as Double 5

x: Double = 5.0

Alternatively, we can assign x as a Double explicitly. Note that the decimal point is not needed in this case due to explicit typing as Double.

val x : Double = 5 // <Ctrl+Enter> to declare a value x as Double 5 (type automatically inferred)

x: Double = 5.0

Next note that labels need to be declared on first use. We have declared x to be a val which is short for value. This makes x immutable (cannot be changed).

Thus, x cannot be just re-assigned, as the following code illustrates in the resulting error: ... error: reassignment to val.

//x = 10 // uncomment and <Ctrl+Enter> to try to reassign val x to 10

Scala allows declaration of mutable variables as well using var, as follows:

var y = 2 // <Shift+Enter> to declare a variable y to be integer 2 and go to next cell

y: Int = 2

y = 3 // <Shift+Enter> to change the value of y to 3

y: Int = 3

y = y+1 // adds 1 to y

y: Int = 4

y += 2 // adds 2 to y

println(y) // the var y is 6 now

6

Blocks

Just combine expressions by surrounding them with { and } called a block.

println({

val x = 1+1

x+2 // expression in last line is returned for the block

})// prints 4

4

println({ val x=22; x+2})

24

Functions

Functions are expressions that have parameters. A function takes arguments as input and returns expressions as output.

A function can be nameless or anonymous and simply return an output from a given input. For example, the following annymous function returns the square of the input integer.

(x: Int) => x*x

res10: Int => Int = $Lambda$5453/989605864@15857286

On the left of => is a list of parameters with name and type. On the right is an expression involving the parameters.

You can also name functions:

val multiplyByItself = (x: Int) => x*x

multiplyByItself: Int => Int = $Lambda$5454/1749476144@41de911a

println(multiplyByItself(10))

100

A function can have no parameters:

val howManyAmI = () => 1

howManyAmI: () => Int = $Lambda$5457/1325610144@72b8fd99

println(howManyAmI()) // 1

1

A function can have more than one parameter:

val multiplyTheseTwoIntegers = (a: Int, b: Int) => a*b

multiplyTheseTwoIntegers: (Int, Int) => Int = $Lambda$5458/1327542156@7adcf443

println(multiplyTheseTwoIntegers(2,4)) // 8

8

Methods

Methods are very similar to functions, but a few key differences exist.

Methods use the def keyword followed by a name, parameter list(s), a return type, and a body.

def square(x: Int): Int = x*x // <Shitf+Enter> to define a function named square

square: (x: Int)Int

Note that the return type Int is specified after the parameter list and a :.

square(5) // <Shitf+Enter> to call this function on argument 5

res14: Int = 25

val y = 3 // <Shitf+Enter> make val y as Int 3

y: Int = 3

square(y) // <Shitf+Enter> to call the function on val y of the right argument type Int

res15: Int = 9

val x = 5.0 // let x be Double 5.0

x: Double = 5.0

//square(x) // <Shift+Enter> to call the function on val x of type Double will give type mismatch error

def square(x: Int): Int = { // <Shitf+Enter> to declare function in a block

val answer = x*x

answer // the last line of the function block is returned

}

square: (x: Int)Int

square(5000) // <Shift+Enter> to call the function

res17: Int = 25000000

// <Shift+Enter> to define function with input and output type as String

def announceAndEmit(text: String): String =

{

println(text)

text // the last line of the function block is returned

}

announceAndEmit: (text: String)String

Scala has a return keyword but it is rarely used as the expression in the last line of the multi-line block is the method's return value.

// <Ctrl+Enter> to call function which prints as line and returns as String

announceAndEmit("roger roger")

roger roger

res18: String = roger roger

A method can have output expressions involving multiple parameter lists:

def multiplyAndTranslate(x: Int, y: Int)(translateBy: Int): Int = (x * y) + translateBy

multiplyAndTranslate: (x: Int, y: Int)(translateBy: Int)Int

println(multiplyAndTranslate(2, 3)(4)) // (2*3)+4 = 10

10

A method can have no parameter lists at all:

def time: Long = System.currentTimeMillis

time: Long

println("Current time in milliseconds is " + time)

Current time in milliseconds is 1664271993372

println("Current time in milliseconds is " + time)

Current time in milliseconds is 1664271993681

Classes

The class keyword followed by the name and constructor parameters is used to define a class.

class Box(h: Int, w: Int, d: Int) {

def printVolume(): Unit = println(h*w*d)

}

defined class Box

- The return type of the method

printVolumeisUnit. - When the return type is

Unitit indicates that there is nothing meaningful to return, similar tovoidin Java and C, but with a difference. - Because every Scala expression must have some value, there is actually a singleton value of type

Unit, written()and carrying no information.

We can make an instance of the class with the new keyword.

val my1Cube = new Box(1,1,1)

my1Cube: Box = Box@2f0a334c

And call the method on the instance.

my1Cube.printVolume() // 1

1

Our named instance my1Cube of the Box class is immutable due to val.

You can have mutable instances of the class using var.

var myVaryingCuboid = new Box(1,3,2)

myVaryingCuboid: Box = Box@41dfc388

myVaryingCuboid.printVolume()

6

myVaryingCuboid = new Box(1,1,1)

myVaryingCuboid: Box = Box@3b1898db

myVaryingCuboid.printVolume()

1

See https://docs.scala-lang.org/tour/classes.html for more details as needed.

Case Classes

Scala has a special type of class called a case class that can be defined with the case class keyword.

Unlike classes, whose instances are compared by reference, instances of case classes are immutable by default and compared by value. This makes them useful for defining rows of typed values in Spark.

case class Point(x: Int, y: Int, z: Int)

defined class Point

Case classes can be instantiated without the new keyword.

val point = Point(1, 2, 3)

val anotherPoint = Point(1, 2, 3)

val yetAnotherPoint = Point(2, 2, 2)

point: Point = Point(1,2,3)

anotherPoint: Point = Point(1,2,3)

yetAnotherPoint: Point = Point(2,2,2)

Instances of case classes are compared by value and not by reference.

if (point == anotherPoint) {

println(point + " and " + anotherPoint + " are the same.")

} else {

println(point + " and " + anotherPoint + " are different.")

} // Point(1,2,3) and Point(1,2,3) are the same.

if (point == yetAnotherPoint) {

println(point + " and " + yetAnotherPoint + " are the same.")

} else {

println(point + " and " + yetAnotherPoint + " are different.")

} // Point(1,2,3) and Point(2,2,2) are different.

Point(1,2,3) and Point(1,2,3) are the same.

Point(1,2,3) and Point(2,2,2) are different.

By contrast, instances of classes are compared by reference.

myVaryingCuboid.printVolume() // should be 1 x 1 x 1

1

my1Cube.printVolume() // should be 1 x 1 x 1

1

if (myVaryingCuboid == my1Cube) {

println("myVaryingCuboid and my1Cube are the same.")

} else {

println("myVaryingCuboid and my1Cube are different.")

} // they are compared by reference and are not the same.

myVaryingCuboid and my1Cube are different.

More about case classes here: https://docs.scala-lang.org/tour/case-classes.html.

Methods and Tab-completion

Many methods of a class can be accessed by ..

val s = "hi" // <Ctrl+Enter> to declare val s to String "hi"

s: String = hi

You can place the cursor after . following a declared object and find out the methods available for it as shown in the image below.

You Try doing this next.

//s. // place cursor after the '.' and press Tab to see all available methods for s

For example,

- scroll down to

containsand double-click on it. - This should lead to

s.containsin your cell. - Now add an argument String to see if

scontains the argument, for example, try:s.contains("f")s.contains("")ands.contains("i")

//s // <Shift-Enter> recall the value of String s

s.contains("f") // <Shift-Enter> returns Boolean false since s does not contain the string "f"

res31: Boolean = false

s.contains("") // <Shift-Enter> returns Boolean true since s contains the empty string ""

res32: Boolean = true

s.contains("i") // <Ctrl+Enter> returns Boolean true since s contains the string "i"

res33: Boolean = true

Objects

Objects are single instances of their own definitions using the object keyword. You can think of them as singletons of their own classes.

object IdGenerator {

private var currentId = 0

def make(): Int = {

currentId += 1

currentId

}

}

defined object IdGenerator

You can access an object through its name:

val newId: Int = IdGenerator.make()

val newerId: Int = IdGenerator.make()

newId: Int = 1

newerId: Int = 2

println(newId) // 1

println(newerId) // 2

1

2

For details see https://docs.scala-lang.org/tour/singleton-objects.html

Traits

Traits are abstract data types containing certain fields and methods. They can be defined using the trait keyword.

In Scala inheritance, a class can only extend one other class, but it can extend multiple traits.

trait Greeter {

def greet(name: String): Unit

}

defined trait Greeter

Traits can have default implementations also.

trait Greeter {

def greet(name: String): Unit =

println("Hello, " + name + "!")

}

defined trait Greeter

You can extend traits with the extends keyword and override an implementation with the override keyword:

class DefaultGreeter extends Greeter

class SwedishGreeter extends Greeter {

override def greet(name: String): Unit = {

println("Hej hej, " + name + "!")

}

}

class CustomizableGreeter(prefix: String, postfix: String) extends Greeter {

override def greet(name: String): Unit = {

println(prefix + name + postfix)

}

}

defined class DefaultGreeter

defined class SwedishGreeter

defined class CustomizableGreeter

Instantiate the classes.

val greeter = new DefaultGreeter()

val swedishGreeter = new SwedishGreeter()

val customGreeter = new CustomizableGreeter("How are you, ", "?")

greeter: DefaultGreeter = DefaultGreeter@737bf119

swedishGreeter: SwedishGreeter = SwedishGreeter@6bdaad03

customGreeter: CustomizableGreeter = CustomizableGreeter@4564c74a

Call the greet method in each case.

greeter.greet("Scala developer") // Hello, Scala developer!

swedishGreeter.greet("Scala developer") // Hej hej, Scala developer!

customGreeter.greet("Scala developer") // How are you, Scala developer?

Hello, Scala developer!

Hej hej, Scala developer!

How are you, Scala developer?

A class can also be made to extend multiple traits.

For more details see: https://docs.scala-lang.org/tour/traits.html.

Main Method

The main method is the entry point of a Scala program.

The Java Virtual Machine requires a main method, named main, that takes an array of strings as its only argument.

Using an object, you can define the main method as follows:

object Main {

def main(args: Array[String]): Unit =

println("Hello, Scala developer!")

}

defined object Main

What I try not do while learning a new language?

- I don't immediately try to ask questions like: how can I do this particular variation of some small thing I just learned so I can use patterns I am used to from another language I am hooked-on right now?

- first go through the detailed Scala Tour on your own and then through the 50 odd lessons in the Scala Book

- then return to 1. and ask detailed cross-language comparison questions by diving deep as needed with the source and scala docs as needed (google or duck-duck-go search!).

Scala Crash Course Continued

Recall!

Scala Resources

You will not be learning scala systematically and thoroughly in this course. You will learn to use Scala by doing various Spark jobs.

If you are interested in learning scala properly, then there are various resources, including:

- scala-lang.org is the core Scala resource. Bookmark the following three links:

- tour-of-scala - Bite-sized introductions to core language features.

- we will go through the tour in a hurry now as some Scala familiarity is needed immediately.

- scala-book - An online book introducing the main language features

- you are expected to use this resource to figure out Scala as needed.

- scala-cheatsheet - A handy cheatsheet covering the basics of Scala syntax.

- visual-scala-reference - This guide collects some of the most common functions of the Scala Programming Language and explain them conceptual and graphically in a simple way.

- tour-of-scala - Bite-sized introductions to core language features.

- Online Resources, including:

- Books

The main sources for the following content are (you are encouraged to read them for more background):

Let's continue getting our hands dirty in Scala

We will go through the remaining programming concepts and tasks by building on https://docs.scala-lang.org/tour/basics.html.

- Scala Types

- Expressions and Printing

- Naming and Assignments

- Functions and Methods in Scala

- Classes and Case Classes

- Methods and Tab-completion

- Objects and Traits

- Collections in Scala and Type Hierarchy

- Functional Programming and MapReduce

- Lazy Evaluations and Recursions

Remark: You need to take a computer science course (from CourseEra, for example) to properly learn Scala. Here, we will learn to use Scala by example to accomplish our data science tasks at hand. You can learn more Scala as needed from various sources pointed out above in Scala Resources.

Scala Type Hierarchy

In Scala, all values have a type, including numerical values and functions. The diagram below illustrates a subset of the type hierarchy.

For now, notice some common types we will be usinf including Int, String, Double, Unit, Boolean, List, etc.

Let us take a closer look at Scala Type Hierarchy now here:

Scala Collections

Familiarize yourself with the main Scala collections classes here:

List

Lists are one of the most basic data structures.

There are several other Scala collections and we will introduce them as needed. The other most common ones are Vector, Array and Seq and the ArrayBuffer.

For details on list see: - https://docs.scala-lang.org/overviews/scala-book/list-class.html

// <Ctrl+Enter> to declare (an immutable) val lst as List of Int's 1,2,3

val lst = List(1, 2, 3)

lst: List[Int] = List(1, 2, 3)

Vectors

The Vector class is an indexed, immutable sequence. The “indexed” part of the description means that you can access Vector elements very rapidly by their index value, such as accessing listOfPeople(999999).

In general, except for the difference that Vector is indexed and List is not, the two classes work the same, so we’ll run through these examples quickly.

For details see: - https://docs.scala-lang.org/overviews/scala-book/vector-class.html

val vec = Vector(1,2,3)

vec: scala.collection.immutable.Vector[Int] = Vector(1, 2, 3)

vec(0) // access first element with index 0

res0: Int = 1

Arrays, Sequences and Tuples

See https://www.scala-lang.org/api/current/scala/collection/index.html for docs.

val arr = Array(1,2,3) // <Shift-Enter> to declare an Array

arr: Array[Int] = Array(1, 2, 3)

val seq = Seq(1,2,3) // <Shift-Enter> to declare a Seq

seq: Seq[Int] = List(1, 2, 3)

A tuple is a neat class that gives you a simple way to store heterogeneous (different) items in the same container. We will use tuples for key-value pairs in Spark.

See https://docs.scala-lang.org/overviews/scala-book/tuples.html

val myTuple = ('a',1) // a 2-tuple

myTuple: (Char, Int) = (a,1)

myTuple._1 // accessing the first element of the tuple. NOTE index starts at 1 not 0 for tuples

res1: Char = a

myTuple._2 // accessing the second element of the tuple

res2: Int = 1

Functional Programming and MapReduce

"Functional programming is a style of programming that emphasizes writing applications using only pure functions and immutable values. As Alvin Alexander wrote in Functional Programming, Simplified, rather than using that description, it can be helpful to say that functional programmers have an extremely strong desire to see their code as math — to see the combination of their functions as a series of algebraic equations. In that regard, you could say that functional programmers like to think of themselves as mathematicians. That’s the driving desire that leads them to use only pure functions and immutable values, because that’s what you use in algebra and other forms of math."

See https://docs.scala-lang.org/overviews/scala-book/functional-programming.html for short lessons in functional programming.

We will apply functions for processing elements of a scala collection to quickly demonstrate functional programming.

Five ways of adding 1

The first four use anonymous functions and the last one uses a named method.

- explicit version:

(x: Int) => x + 1

- type-inferred more intuitive version:

x => x + 1

- placeholder syntax (each argument must be used exactly once):

_ + 1

- type-inferred more intuitive version with code-block for larger function body:

x => {

// body is a block of code

val integerToAdd = 1

x + integerToAdd

}

- as methods using

def:

def addOne(x: Int): Int = x + 1

Now, let's do some functional programming over scala collection (List) using some of their methods: map, filter and reduce. In the end we will write our first mapReduce program!

For more details see:

map

See: https://superruzafa.github.io/visual-scala-reference/map/

trait Collection[A] {

def map[B](f: (A) => B): Collection[B]

}

map creates a collection using as elements the results obtained from applying the function f to each element of this collection.

// <Shift+Enter> to map each value x of lst with x+10 to return a new List(11, 12, 13)

lst.map(x => x + 10)

res3: List[Int] = List(11, 12, 13)

// <Shift+Enter> for the same as above using place-holder syntax

lst.map( _ + 10)

res4: List[Int] = List(11, 12, 13)

filter

See: https://superruzafa.github.io/visual-scala-reference/filter/

trait Collection[A] {

def filter(p: (A) => Boolean): Collection[A]

}

filter creates a collection with those elements that satisfy the predicate p and discarding the rest.

// <Shift+Enter> to return a new List(1, 3) after filtering x's from lst if (x % 2 == 1) is true

lst.filter(x => (x % 2 == 1) )

res5: List[Int] = List(1, 3)

// <Shift+Enter> for the same as above using place-holder syntax

lst.filter( _ % 2 == 1 )

res6: List[Int] = List(1, 3)

reduce

See: https://superruzafa.github.io/visual-scala-reference/reduce/

trait Collection[A] {

def reduce(op: (A, A) => A): A

}

reduce applies the binary operator op to pairs of elements in this collection until the final result is calculated.

// <Shift+Enter> to use reduce to add elements of lst two at a time to return Int 6

lst.reduce( (x, y) => x + y )

res7: Int = 6

// <Ctrl+Enter> for the same as above but using place-holder syntax

lst.reduce( _ + _ )

res8: Int = 6

Let's combine map and reduce programs above to find the sum of after 10 has been added to every element of the original List lst as follows:

lst.map(x => x+10)

.reduce((x,y) => x+y) // <Ctrl-Enter> to get Int 36 = sum(1+10,2+10,3+10)

res9: Int = 36

Exercise in Functional Programming

You should spend an hour or so going through the Functional Programming Section of the Scala Book:

Scala lets you write code in an object-oriented programming (OOP) style, a functional programming (FP) style, and even in a hybrid style, using both approaches in combination. This book assumes that you’re coming to Scala from an OOP language like Java, C++, or C#, so outside of covering Scala classes, there aren’t any special sections about OOP in this book. But because the FP style is still relatively new to many developers, we’ll provide a brief introduction to Scala’s support for FP in the next several lessons.

Functional programming is a style of programming that emphasizes writing applications using only pure functions and immutable values. As Alvin Alexander wrote in Functional Programming, Simplified, rather than using that description, it can be helpful to say that functional programmers have an extremely strong desire to see their code as math — to see the combination of their functions as a series of algebraic equations. In that regard, you could say that functional programmers like to think of themselves as mathematicians. That’s the driving desire that leads them to use only pure functions and immutable values, because that’s what you use in algebra and other forms of math.

Functional programming is a large topic, and there’s no simple way to condense the entire topic into this little book, but in the following lessons we’ll give you a taste of FP, and show some of the tools Scala provides for developers to write functional code.

There are lots of methods in Scala Collections. And much more in this scalable language. See for example http://docs.scala-lang.org/cheatsheets/index.html.

Lazy Evaluation

Another powerful programming concept we will need is lazy evaluation -- a form of delayed evaluation. So the value of an expression that is lazily evaluated is only available when it is actually needed.

This is to be contrasted with eager evaluation that we have seen so far -- an expression is immediately evaluated.

val eagerImmutableInt = 1 // eagerly evaluated as 1

eagerImmutableInt: Int = 1

var eagerMutableInt = 2 // eagerly evaluated as 2

eagerMutableInt: Int = 2

Let's demonstrate lazy evaluation using a getTime method and the keyword lazy.

import java.util.Calendar

import java.util.Calendar

lazy val lazyImmutableTime = Calendar.getInstance.getTime // lazily defined and not evaluated immediately

lazyImmutableTime: java.util.Date = <lazy>

val eagerImmutableTime = Calendar.getInstance.getTime // egaerly evaluated immediately

eagerImmutableTime: java.util.Date = Tue Sep 27 09:49:49 UTC 2022

println(lazyImmutableTime) // evaluated when actully needed by println

Tue Sep 27 09:49:49 UTC 2022

println(eagerImmutableTime) // prints what was already evaluated eagerly

Tue Sep 27 09:49:49 UTC 2022

def lazyDefinedInt = 5 // you can also use method to lazily define

lazyDefinedInt: Int

lazyDefinedInt // only evaluated now

res12: Int = 5

See https://www.scala-exercises.org/scalatutorial/lazyevaluation for more details including the following example with StringBuilder.

val builder = new StringBuilder //built-in

builder: StringBuilder =

builder.result()

res13: String = ""

val x = { builder += 'x'; 1 } // eagerly evaluates x as 1 after appending 'x' to builder. NOTE: ';' is used to separate multiple expressions on the same line

x: Int = 1

builder.result()

res14: String = x

x

res15: Int = 1

builder.result() // calling x again should not append x again to builder

res16: String = x

lazy val y = { builder += 'y'; 2 } // lazily evaluate y later when it is called

y: Int = <lazy>

builder.result() // builder should remain unchanged

res17: String = x

def z = { builder += 'z'; 3 } // lazily evaluate z later when the method is called

z: Int

builder.result() // builder should remain unchanged

res18: String = x

What should builder.result() be after the following arithmetic expression involving x,y and z is evaluated?

z + y + x + z + y + x

res19: Int = 12

Lazy Evaluation Exercise - You try Now!

Understand why the output above is what it is!

- Why is

zdifferent in its appearance in the finalbuilderstring when compared toxandyas we evaluated?

z + y + x + z + y + x

// putting it all together

val builder = new StringBuilder

val x = { builder += 'x'; 1 }

lazy val y = { builder += 'y'; 2 }

def z = { builder += 'z'; 3 }

// comment next line after different summands to understand difference between val, lazy val and def

z + y + x + z + y + x

builder.result()

builder: StringBuilder = xzyz

x: Int = 1

y: Int = <lazy>

z: Int

res20: String = xzyz

Why Lazy?

Imagine a more complex expression involving the evaluation of millions of values. Lazy evaluation will allow us to actually compute with big data when it may become impossible to hold all the values in memory. This is exactly what Apache Spark does as we will see.

Recursions

Recursion is a powerful framework when a function calls another function, including itself, until some terminal condition is reached.

Here we want to distinguish between two ways of implementing a recursion using a simple example of factorial.

Recall that for any natural number \(n\), its factorial is denoted and defined as follows:

\[ n! := n \times (n-1) \times (n-2) \times \cdots \times 2 \times 1 \]

which has the following recursive expression:

\[ n! = n*(n-1)! , , \qquad 0! = 1 \]

Let us implement it using two approaches: a naive approach that can run out of memory and another tail-recursive approach that uses constant memory. Read https://www.scala-exercises.org/scalatutorial/tailrecursion for details.

def factorialNaive(n: Int): Int =

if (n == 0) 1 else n * factorialNaive(n - 1)

factorialNaive: (n: Int)Int

factorialNaive(4)

res21: Int = 24

When factorialNaive(4) was evaluated above the following steps were actually done:

factorial(4)

if (4 == 0) 1 else 4 * factorial(4 - 1)

4 * factorial(3)

4 * (3 * factorial(2))

4 * (3 * (2 * factorial(1)))

4 * (3 * (2 * (1 * factorial(0)))

4 * (3 * (2 * (1 * 1)))

24

Notice how we add one more element to our expression at each recursive call. Our expressions becomes bigger and bigger until we end by reducing it to the final value. So the final expression given by a directed acyclic graph (DAG) of the pairwise multiplications given by the right-branching binary tree, whose leaves are input integers and internal nodes are the bianry * operator, can get very large when the input n is large.

Tail recursion is a sophisticated way of implementing certain recursions so that memory requirements can be kept constant, as opposed to naive recursions.

That difference in the rewriting rules actually translates directly to a difference in the actual execution on a computer. In fact, it turns out that if you have a recursive function that calls itself as its last action, then you can reuse the stack frame of that function. This is called tail recursion.

And by applying that trick, a tail recursive function can execute in constant stack space, so it's really just another formulation of an iterative process. We could say a tail recursive function is the functional form of a loop, and it executes just as efficiently as a loop.

Implementation of tail recursion in the Exercise below uses Scala annotation, which is a way to associate meta-information with definitions. In our case, the annotation @tailrec ensures that a method is indeed tail-recursive. See the last link to understand how memory requirements can be kept constant in tail recursions.

We mainly want you to know that tail recursions are an important functional programming concept.

Tail Recursion Exercise - You Try Now

- Uncomment the next three cells

- Replace

???in the next cell with the correct values to make this a tail recursion for factorial.

/*

import scala.annotation.tailrec

// replace ??? with the right values to make this a tail recursion for factorial

def factorialTail(n: Int): Int = {

@tailrec

def iter(x: Int, result: Int): Int =

if ( x == ???) result

else iter(x - 1, result * x)

iter( n, ??? )

}

*/

//factorialTail(3) //shouldBe 6

//factorialTail(4) //shouldBe 24

Functional Programming is a vast subject and we are merely covering the fewest core ideas to get started with Apache Spark asap.

We will return to more concepts as we need them in the sequel.

Introduction to Spark

Spark Essentials: RDDs, Transformations and Actions

- This introductory notebook describes how to get started running Spark (Scala) code in Notebooks.