Introduction to Spark Streaming

Spark Streaming is an extension of the core Spark API that enables scalable, high-throughput, fault-tolerant stream processing of live data streams.

This is a walk-through of excerpts from the following resources:

Overview

Spark Streaming is an extension of the core Spark API that enables scalable, high-throughput, fault-tolerant stream processing of live data streams.

Data can be ingested from many sources like

- Kafka,

- Flume,

- Twitter Streaming and REST APIs,

- ZeroMQ,

- Amazon Kinesis, or

- TCP sockets,

- etc

and can be processed using complex algorithms expressed with high-level functions like map, reduce, join and window.

Finally, processed data can be pushed out to filesystems, databases, and live dashboards. In fact, you can apply Spark's

- machine learning and

- graph processing algorithms on data streams.

Internally, it works as follows:

- Spark Streaming receives live input data streams and

- divides the data into batches,

- which are then processed by the Spark engine

- to generate the final stream of results in batches.

Discretized Streams (DStreams)

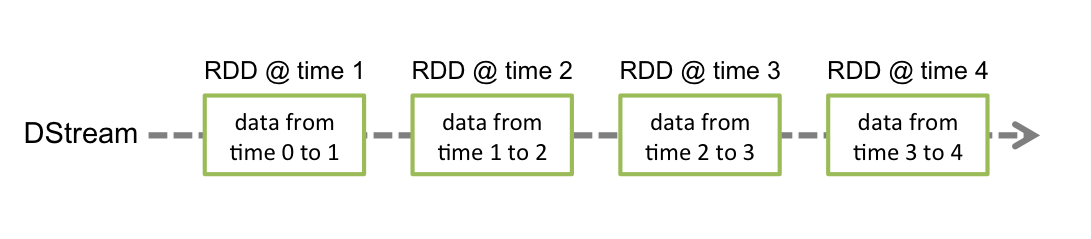

Discretized Stream or DStream is the basic abstraction provided by Spark Streaming. It represents a continuous stream of data, either the input data stream received from source (for eg. Kafka, Flume, and Kinesis) or the processed data stream generated by transforming the input stream. Internally, a DStream is represented by a continuous series of RDDs, which is Spark's abstraction of an immutable, distributed dataset (see Spark Programming Guide for more details). Each RDD in a DStream contains data from a certain interval, as shown in the following figure.

This guide shows you how to start writing Spark Streaming programs with DStreams. You can write Spark Streaming programs in Scala, Java or Python (introduced in Spark 1.2), all of which are presented in this guide.

Here, we will focus on Streaming in Scala.

Spark Streaming is a near-real-time micro-batch stream processing engine as opposed to other real-time stream processing frameworks like Apache Storm. Typically 'near-real-time' in Spark Streaming can be in the order of seconds as opposed to milliseconds, for example.

Three Quick Examples

Before we go into the details of how to write your own Spark Streaming program, let us take a quick look at what a simple Spark Streaming program looks like.

We will choose the first two examples in Databricks notebooks below.

Spark Streaming Hello World Examples

These are adapted from several publicly available Databricks Notebooks

- Streaming Word Count (Scala)

- Tweet Collector for Capturing Live Tweets

- Twitter Hashtag Count (Scala)

Other examples we won't try here:

- Kinesis Word Count (Scala)

- Kafka Word Count (Scala)

- FileStream Word Count (Python)

- etc.

- Streaming Word Count

This is a hello world example of Spark Streaming which counts words on 1 second batches of streaming data.

It uses an in-memory string generator as a dummy source for streaming data.

Setting up a streaming source

Configurations

Configurations that control the streaming app in the notebook

// === Configuration to control the flow of the application ===

val stopActiveContext = true

// "true" = stop if any existing StreamingContext is running;

// "false" = dont stop, and let it run undisturbed, but your latest code may not be used

// === Configurations for Spark Streaming ===

val batchIntervalSeconds = 1

val eventsPerSecond = 1000 // For the dummy source

// Verify that the attached Spark cluster is 1.4.0+

require(sc.version.replace(".", "").toInt >= 140, "Spark 1.4.0+ is required to run this notebook. Please attach it to a Spark 1.4.0+ cluster.")

stopActiveContext: Boolean = true

batchIntervalSeconds: Int = 1

eventsPerSecond: Int = 1000

Imports

Import all the necessary libraries. If you see any error here, you have to make sure that you have attached the necessary libraries to the attached cluster.

import org.apache.spark._

import org.apache.spark.storage._

import org.apache.spark.streaming._

import org.apache.spark._

import org.apache.spark.storage._

import org.apache.spark.streaming._

Setup: Define the function that sets up the StreamingContext

In this we will do two things.

-

Define a custom receiver as the dummy source (no need to understand this)

- this custom receiver will have lines that end with a random number between 0 and 9 and read:

I am a dummy source 2 I am a dummy source 8 ...

This is the dummy source implemented as a custom receiver. No need to understand this now.

// This is the dummy source implemented as a custom receiver. No need to fully understand this.

import scala.util.Random

import org.apache.spark.streaming.receiver._

class DummySource(ratePerSec: Int) extends Receiver[String](StorageLevel.MEMORY_AND_DISK_2) {

def onStart() {

// Start the thread that receives data over a connection

new Thread("Dummy Source") {

override def run() { receive() }

}.start()

}

def onStop() {

// There is nothing much to do as the thread calling receive()

// is designed to stop by itself isStopped() returns false

}

/** Create a socket connection and receive data until receiver is stopped */

private def receive() {

while(!isStopped()) {

store("I am a dummy source " + Random.nextInt(10))

Thread.sleep((1000.toDouble / ratePerSec).toInt)

}

}

}

import scala.util.Random

import org.apache.spark.streaming.receiver._

defined class DummySource

Transforming and Acting on the DStream of lines

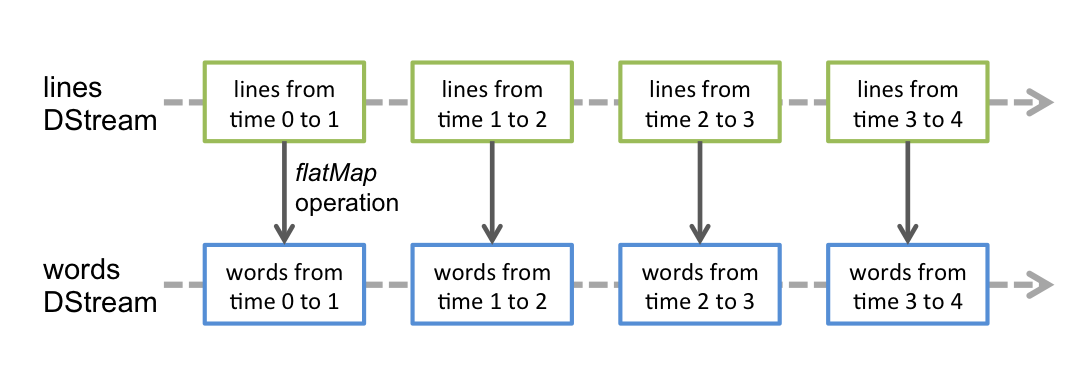

Any operation applied on a DStream translates to operations on the underlying RDDs. For converting a stream of lines to words, the flatMap operation is applied on each RDD in the lines DStream to generate the RDDs of the wordStream DStream. This is shown in the following figure.

These underlying RDD transformations are computed by the Spark engine. The DStream operations hide most of these details and provide the developer with a higher-level API for convenience.

Next reduceByKey is used to get wordCountStream that counts the words in wordStream.

Finally, this is registered as a temporary table for each RDD in the DStream.

Let's try to understand the following creatingFunc to create a new StreamingContext and setting it up for word count and registering it as temp table for each batch of 1000 lines per second in the stream.

batchIntervalSeconds

res1: Int = 1

var newContextCreated = false // Flag to detect whether new context was created or not

// Function to create a new StreamingContext and set it up

def creatingFunc(): StreamingContext = {

// Create a StreamingContext - starting point for a Spark Streaming job

val ssc = new StreamingContext(sc, Seconds(batchIntervalSeconds))

// Create a stream that generates 1000 lines per second

val stream = ssc.receiverStream(new DummySource(eventsPerSecond))

// Split the lines into words, and then do word count

val wordStream = stream.flatMap { _.split(" ") }

val wordCountStream = wordStream.map(word => (word, 1)).reduceByKey(_ + _)

// Create temp table at every batch interval

wordCountStream.foreachRDD { rdd =>

rdd.toDF("word", "count").createOrReplaceTempView("batch_word_count")

}

stream.foreachRDD { rdd =>

System.out.println("# events = " + rdd.count())

System.out.println("\t " + rdd.take(10).mkString(", ") + ", ...")

}

ssc.remember(Minutes(1)) // To make sure data is not deleted by the time we query it interactively

println("Creating function called to create new StreamingContext")

newContextCreated = true

ssc

}

newContextCreated: Boolean = false

creatingFunc: ()org.apache.spark.streaming.StreamingContext

Start Streaming Job

First it is important to stop existing StreamingContext if any and then start/restart the new one.

Here we are going to use the configurations at the top of the notebook to decide whether to stop any existing StreamingContext, and start a new one, or recover one from existing checkpoints.

// Stop any existing StreamingContext

// The getActive function is proviced by Databricks to access active Streaming Contexts

if (stopActiveContext) {

StreamingContext.getActive.foreach { _.stop(stopSparkContext = false) }

}

// Get or create a streaming context

val ssc = StreamingContext.getActiveOrCreate(creatingFunc)

if (newContextCreated) {

println("New context created from currently defined creating function")

} else {

println("Existing context running or recovered from checkpoint, may not be running currently defined creating function")

}

// Start the streaming context in the background.

ssc.start()

// This is to ensure that we wait for some time before the background streaming job starts. This will put this cell on hold for 5 times the batchIntervalSeconds.

ssc.awaitTerminationOrTimeout(batchIntervalSeconds * 5 * 1000)

Creating function called to create new StreamingContext

New context created from currently defined creating function

# events = 0

, ...

# events = 803

I am a dummy source 6, I am a dummy source 6, I am a dummy source 5, I am a dummy source 3, I am a dummy source 7, I am a dummy source 2, I am a dummy source 8, I am a dummy source 1, I am a dummy source 7, I am a dummy source 5, ...

# events = 879

I am a dummy source 7, I am a dummy source 5, I am a dummy source 3, I am a dummy source 0, I am a dummy source 2, I am a dummy source 3, I am a dummy source 1, I am a dummy source 2, I am a dummy source 7, I am a dummy source 7, ...

# events = 888

I am a dummy source 9, I am a dummy source 0, I am a dummy source 1, I am a dummy source 2, I am a dummy source 7, I am a dummy source 3, I am a dummy source 3, I am a dummy source 0, I am a dummy source 1, I am a dummy source 9, ...

# events = 883

I am a dummy source 6, I am a dummy source 5, I am a dummy source 4, I am a dummy source 6, I am a dummy source 4, I am a dummy source 2, I am a dummy source 3, I am a dummy source 6, I am a dummy source 1, I am a dummy source 6, ...

ssc: org.apache.spark.streaming.StreamingContext = org.apache.spark.streaming.StreamingContext@50c3571

res2: Boolean = false

Interactive Querying

Now let's try querying the table. You can run this command again and again, you will find the numbers changing.

select * from batch_word_count

| word | count |

|---|---|

| 8 | 107.0 |

| 0 | 100.0 |

| dummy | 890.0 |

| a | 890.0 |

| I | 890.0 |

| 9 | 70.0 |

| 1 | 89.0 |

| 2 | 87.0 |

| source | 890.0 |

| 3 | 91.0 |

| 4 | 79.0 |

| am | 890.0 |

| 5 | 96.0 |

| 6 | 82.0 |

| 7 | 89.0 |

Try again for current table.

select * from batch_word_count

| word | count |

|---|---|

| 8 | 109.0 |

| 0 | 77.0 |

| dummy | 888.0 |

| a | 888.0 |

| I | 888.0 |

| 9 | 84.0 |

| 1 | 85.0 |

| 2 | 89.0 |

| source | 888.0 |

| 3 | 86.0 |

| 4 | 91.0 |

| am | 888.0 |

| 5 | 74.0 |

| 6 | 84.0 |

| 7 | 109.0 |

Finally, if you want stop the StreamingContext, you can uncomment and execute the following code:

StreamingContext.getActive.foreach { _.stop(stopSparkContext = false) }

StreamingContext.getActive.foreach { _.stop(stopSparkContext = false) } // please do this if you are done!

Next two examples Spark Streaming is with live tweets.

Dear Researcher,

The main form of assessment for day 01 of module 01 and both days of module 03 is most likely going to be a fairly open-ended project in groups of appropriate sizes (with some structure TBD) that is close to your research interests. The exact details will be given at the end of day 02 of module 03. There will most likely be dedicated Office Hours after module 03 to support you with the project (admin, infrastructure, etc).

Towards this, as one possibility for project, I strongly encourage you to apply for Twitter Developer Account (you need a Twitter user account first). This process can take couple of weeks. With Twitter developer account you can do your own experiments in Twitter and it would be an interesting application of streaming.

The instructions are roughly as follows (Twitter will ask different questions to different users... rules keep evolving... just make it clear you are just wanting credentials for learning Spark streaming. Keep it simple.): https://lamastex.github.io/scalable-data-science/sds/basics/instructions/getTwitterDevCreds/ (Links to an external site.)

You can still follow the lab/lectures without your own Twitter Developer credentials IF I/we dynamically decide to go through Twitter examples of Streaming (provided at least one of you has applied for the developer credentials and is interested in such experiments), but then you will not be able to do your own experiments live in Twitter as I will be doing mine.

Twitter can be a fun source of interesting projects from uniformly sampled social media interactions in the world (but there are many other projects, especially those coming from your own research questions).

Cheers!

Raaz

Twitter Utility Functions

Here we develop a few notebooks starting with 025_* to help us with Twitter experiments.

Extended TwitterUtils

We extend twitter utils from Spark to allow for filtering by user-ids using .follow and strings in the tweet using .track method of twitter4j.

This is part of Project MEP: Meme Evolution Programme and supported by databricks, AWS and a Swedish VR grant.

The analysis is available in the following databricks notebook: * http://lamastex.org/lmse/mep/src/extendedTwitterUtils.html

Copyright 2016-2020 Ivan Sadikov and Raazesh Sainudiin

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

import twitter4j._

import twitter4j.auth.Authorization

import twitter4j.conf.ConfigurationBuilder

import twitter4j.auth.OAuthAuthorization

import org.apache.spark.streaming._

import org.apache.spark.streaming.dstream._

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.receiver.Receiver

import twitter4j._

import twitter4j.auth.Authorization

import twitter4j.conf.ConfigurationBuilder

import twitter4j.auth.OAuthAuthorization

import org.apache.spark.streaming._

import org.apache.spark.streaming.dstream._

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.receiver.Receiver

class ExtendedTwitterReceiver(

twitterAuth: Authorization,

filters: Seq[String],

userFilters: Seq[Long],

storageLevel: StorageLevel

) extends Receiver[Status](storageLevel) {

@volatile private var twitterStream: TwitterStream = _

@volatile private var stopped = false

def onStart() {

try {

val newTwitterStream = new TwitterStreamFactory().getInstance(twitterAuth)

newTwitterStream.addListener(new StatusListener {

def onStatus(status: Status): Unit = {

store(status)

}

// Unimplemented

def onDeletionNotice(statusDeletionNotice: StatusDeletionNotice) {}

def onTrackLimitationNotice(i: Int) {}

def onScrubGeo(l: Long, l1: Long) {}

def onStallWarning(stallWarning: StallWarning) {}

def onException(e: Exception) {

if (!stopped) {

restart("Error receiving tweets", e)

}

}

})

// do filtering only when filters are available

if (filters.nonEmpty || userFilters.nonEmpty) {

val query = new FilterQuery()

if (filters.nonEmpty) {

query.track(filters.mkString(","))

}

if (userFilters.nonEmpty) {

query.follow(userFilters: _*)

}

newTwitterStream.filter(query)

} else {

newTwitterStream.sample()

}

setTwitterStream(newTwitterStream)

println("Twitter receiver started")

stopped = false

} catch {

case e: Exception => restart("Error starting Twitter stream", e)

}

}

def onStop() {

stopped = true

setTwitterStream(null)

println("Twitter receiver stopped")

}

private def setTwitterStream(newTwitterStream: TwitterStream) = synchronized {

if (twitterStream != null) {

twitterStream.shutdown()

}

twitterStream = newTwitterStream

}

}

defined class ExtendedTwitterReceiver

class ExtendedTwitterInputDStream(

ssc_ : StreamingContext,

twitterAuth: Option[Authorization],

filters: Seq[String],

userFilters: Seq[Long],

storageLevel: StorageLevel

) extends ReceiverInputDStream[Status](ssc_) {

private def createOAuthAuthorization(): Authorization = {

new OAuthAuthorization(new ConfigurationBuilder().build())

}

private val authorization = twitterAuth.getOrElse(createOAuthAuthorization())

override def getReceiver(): Receiver[Status] = {

new ExtendedTwitterReceiver(authorization, filters, userFilters, storageLevel)

}

}

defined class ExtendedTwitterInputDStream

import twitter4j.Status

import twitter4j.auth.Authorization

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.StreamingContext

import org.apache.spark.streaming.dstream.{ReceiverInputDStream, DStream}

object ExtendedTwitterUtils {

def createStream(

ssc: StreamingContext,

twitterAuth: Option[Authorization],

filters: Seq[String] = Nil,

userFilters: Seq[Long] = Nil,

storageLevel: StorageLevel = StorageLevel.MEMORY_AND_DISK_SER_2

): ReceiverInputDStream[Status] = {

new ExtendedTwitterInputDStream(ssc, twitterAuth, filters, userFilters, storageLevel)

}

}

import twitter4j.Status

import twitter4j.auth.Authorization

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.StreamingContext

import org.apache.spark.streaming.dstream.{ReceiverInputDStream, DStream}

defined module ExtendedTwitterUtils

println("done running the extendedTwitterUtils2run notebook - ready to stream from twitter")

Tweet Transmission Tree Function

This is part of Project MEP: Meme Evolution Programme and supported by databricks, AWS and a Swedish VR grant.

Please see the following notebook to understand the rationale for the Tweet Transmission Tree Functions: * http://lamastex.org/lmse/mep/src/TweetAnatomyAndTransmissionTree.html

Copyright 2016-2020 Akinwande Atanda and Raazesh Sainudiin

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

import org.apache.spark.sql.types.{StructType, StructField, StringType};

import org.apache.spark.sql.functions._

import org.apache.spark.sql.types._

import org.apache.spark.sql.ColumnName

import org.apache.spark.sql.DataFrame

spark.sql("set spark.sql.legacy.timeParserPolicy=LEGACY")

def fromParquetFile2DF(InputDFAsParquetFilePatternString: String): DataFrame = {

sqlContext.

read.parquet(InputDFAsParquetFilePatternString)

}

def tweetsJsonStringDF2TweetsDF(tweetsAsJsonStringInputDF: DataFrame): DataFrame = {

sqlContext

.read

.json(tweetsAsJsonStringInputDF.map({case Row(val1: String) => val1}))

}

def tweetsIDLong_JsonStringPairDF2TweetsDF(tweetsAsIDLong_JsonStringInputDF: DataFrame): DataFrame = {

sqlContext

.read

.json(tweetsAsIDLong_JsonStringInputDF.map({case Row(val0:Long, val1: String) => val1}))

}

def tweetsDF2TTTDF(tweetsInputDF: DataFrame): DataFrame = {

tweetsInputDF.select(

unix_timestamp($"createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("CurrentTweetDate"),

$"id".as("CurrentTwID"),

$"lang".as("lang"),

$"geoLocation.latitude".as("lat"),

$"geoLocation.longitude".as("lon"),

unix_timestamp($"retweetedStatus.createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("CreationDateOfOrgTwInRT"),

$"retweetedStatus.id".as("OriginalTwIDinRT"),

unix_timestamp($"quotedStatus.createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("CreationDateOfOrgTwInQT"),

$"quotedStatus.id".as("OriginalTwIDinQT"),

$"inReplyToStatusId".as("OriginalTwIDinReply"),

$"user.id".as("CPostUserId"),

unix_timestamp($"user.createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("userCreatedAtDate"),

$"retweetedStatus.user.id".as("OPostUserIdinRT"),

$"quotedStatus.user.id".as("OPostUserIdinQT"),

$"inReplyToUserId".as("OPostUserIdinReply"),

$"user.name".as("CPostUserName"),

$"retweetedStatus.user.name".as("OPostUserNameinRT"),

$"quotedStatus.user.name".as("OPostUserNameinQT"),

$"user.screenName".as("CPostUserSN"),

$"retweetedStatus.user.screenName".as("OPostUserSNinRT"),

$"quotedStatus.user.screenName".as("OPostUserSNinQT"),

$"inReplyToScreenName".as("OPostUserSNinReply"),

$"user.favouritesCount",

$"user.followersCount",

$"user.friendsCount",

$"user.isVerified",

$"user.isGeoEnabled",

$"text".as("CurrentTweet"),

$"retweetedStatus.userMentionEntities.id".as("UMentionRTiD"),

$"retweetedStatus.userMentionEntities.screenName".as("UMentionRTsN"),

$"quotedStatus.userMentionEntities.id".as("UMentionQTiD"),

$"quotedStatus.userMentionEntities.screenName".as("UMentionQTsN"),

$"userMentionEntities.id".as("UMentionASiD"),

$"userMentionEntities.screenName".as("UMentionASsN")

).withColumn("TweetType",

when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" === -1,

"Original Tweet")

.when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" > -1,

"Reply Tweet")

.when($"OriginalTwIDinRT".isNotNull &&$"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" === -1,

"ReTweet")

.when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" === -1,

"Quoted Tweet")

.when($"OriginalTwIDinRT".isNotNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" === -1,

"Retweet of Quoted Tweet")

.when($"OriginalTwIDinRT".isNotNull && $"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" > -1,

"Retweet of Reply Tweet")

.when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" > -1,

"Reply of Quoted Tweet")

.when($"OriginalTwIDinRT".isNotNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" > -1,

"Retweet of Quoted Rely Tweet")

.otherwise("Unclassified"))

.withColumn("MentionType",

when($"UMentionRTid".isNotNull && $"UMentionQTid".isNotNull, "RetweetAndQuotedMention")

.when($"UMentionRTid".isNotNull && $"UMentionQTid".isNull, "RetweetMention")

.when($"UMentionRTid".isNull && $"UMentionQTid".isNotNull, "QuotedMention")

.when($"UMentionRTid".isNull && $"UMentionQTid".isNull, "AuthoredMention")

.otherwise("NoMention"))

.withColumn("Weight", lit(1L))

}

def tweetsDF2TTTDFWithURLsAndHashtags(tweetsInputDF: DataFrame): DataFrame = {

tweetsInputDF.select(

unix_timestamp($"createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("CurrentTweetDate"),

$"id".as("CurrentTwID"),

$"lang".as("lang"),

$"geoLocation.latitude".as("lat"),

$"geoLocation.longitude".as("lon"),

unix_timestamp($"retweetedStatus.createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("CreationDateOfOrgTwInRT"),

$"retweetedStatus.id".as("OriginalTwIDinRT"),

unix_timestamp($"quotedStatus.createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("CreationDateOfOrgTwInQT"),

$"quotedStatus.id".as("OriginalTwIDinQT"),

$"inReplyToStatusId".as("OriginalTwIDinReply"),

$"user.id".as("CPostUserId"),

unix_timestamp($"user.createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("userCreatedAtDate"),

$"retweetedStatus.user.id".as("OPostUserIdinRT"),

$"quotedStatus.user.id".as("OPostUserIdinQT"),

$"inReplyToUserId".as("OPostUserIdinReply"),

$"user.name".as("CPostUserName"),

$"retweetedStatus.user.name".as("OPostUserNameinRT"),

$"quotedStatus.user.name".as("OPostUserNameinQT"),

$"user.screenName".as("CPostUserSN"),

$"retweetedStatus.user.screenName".as("OPostUserSNinRT"),

$"quotedStatus.user.screenName".as("OPostUserSNinQT"),

$"inReplyToScreenName".as("OPostUserSNinReply"),

$"user.favouritesCount",

$"user.followersCount",

$"user.friendsCount",

$"user.isVerified",

$"user.isGeoEnabled",

$"text".as("CurrentTweet"),

$"retweetedStatus.userMentionEntities.id".as("UMentionRTiD"),

$"retweetedStatus.userMentionEntities.screenName".as("UMentionRTsN"),

$"quotedStatus.userMentionEntities.id".as("UMentionQTiD"),

$"quotedStatus.userMentionEntities.screenName".as("UMentionQTsN"),

$"userMentionEntities.id".as("UMentionASiD"),

$"userMentionEntities.screenName".as("UMentionASsN"),

$"urlEntities.expandedURL".as("URLs"),

$"hashtagEntities.text".as("hashTags")

).withColumn("TweetType",

when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" === -1,

"Original Tweet")

.when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" > -1,

"Reply Tweet")

.when($"OriginalTwIDinRT".isNotNull &&$"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" === -1,

"ReTweet")

.when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" === -1,

"Quoted Tweet")

.when($"OriginalTwIDinRT".isNotNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" === -1,

"Retweet of Quoted Tweet")

.when($"OriginalTwIDinRT".isNotNull && $"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" > -1,

"Retweet of Reply Tweet")

.when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" > -1,

"Reply of Quoted Tweet")

.when($"OriginalTwIDinRT".isNotNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" > -1,

"Retweet of Quoted Rely Tweet")

.otherwise("Unclassified"))

.withColumn("MentionType",

when($"UMentionRTid".isNotNull && $"UMentionQTid".isNotNull, "RetweetAndQuotedMention")

.when($"UMentionRTid".isNotNull && $"UMentionQTid".isNull, "RetweetMention")

.when($"UMentionRTid".isNull && $"UMentionQTid".isNotNull, "QuotedMention")

.when($"UMentionRTid".isNull && $"UMentionQTid".isNull, "AuthoredMention")

.otherwise("NoMention"))

.withColumn("Weight", lit(1L))

}

println("""USAGE: val df = tweetsDF2TTTDF(tweetsJsonStringDF2TweetsDF(fromParquetFile2DF("parquetFileName")))

val df = tweetsDF2TTTDF(tweetsIDLong_JsonStringPairDF2TweetsDF(fromParquetFile2DF("parquetFileName")))

""")

// try to modify the function tweetsDF2TTTDF so some fields are not necessarily assumed to be available

// there are better ways - https://stackoverflow.com/questions/35904136/how-do-i-detect-if-a-spark-dataframe-has-a-column

def tweetsDF2TTTDFLightWeight(tweetsInputDF: DataFrame): DataFrame = {

tweetsInputDF.select(

unix_timestamp($"createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("CurrentTweetDate"),

$"id".as("CurrentTwID"),

$"lang".as("lang"),

//$"geoLocation.latitude".as("lat"),

//$"geoLocation.longitude".as("lon"),

unix_timestamp($"retweetedStatus.createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("CreationDateOfOrgTwInRT"),

$"retweetedStatus.id".as("OriginalTwIDinRT"),

unix_timestamp($"quotedStatus.createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("CreationDateOfOrgTwInQT"),

$"quotedStatus.id".as("OriginalTwIDinQT"),

$"inReplyToStatusId".as("OriginalTwIDinReply"),

$"user.id".as("CPostUserId"),

unix_timestamp($"user.createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("userCreatedAtDate"),

$"retweetedStatus.user.id".as("OPostUserIdinRT"),

$"quotedStatus.user.id".as("OPostUserIdinQT"),

$"inReplyToUserId".as("OPostUserIdinReply"),

$"user.name".as("CPostUserName"),

$"retweetedStatus.user.name".as("OPostUserNameinRT"),

$"quotedStatus.user.name".as("OPostUserNameinQT"),

$"user.screenName".as("CPostUserSN"),

$"retweetedStatus.user.screenName".as("OPostUserSNinRT"),

$"quotedStatus.user.screenName".as("OPostUserSNinQT"),

$"inReplyToScreenName".as("OPostUserSNinReply"),

$"user.favouritesCount",

$"user.followersCount",

$"user.friendsCount",

$"user.isVerified",

$"user.isGeoEnabled",

$"text".as("CurrentTweet"),

$"retweetedStatus.userMentionEntities.id".as("UMentionRTiD"),

$"retweetedStatus.userMentionEntities.screenName".as("UMentionRTsN"),

$"quotedStatus.userMentionEntities.id".as("UMentionQTiD"),

$"quotedStatus.userMentionEntities.screenName".as("UMentionQTsN"),

$"userMentionEntities.id".as("UMentionASiD"),

$"userMentionEntities.screenName".as("UMentionASsN")

).withColumn("TweetType",

when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" === -1,

"Original Tweet")

.when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" > -1,

"Reply Tweet")

.when($"OriginalTwIDinRT".isNotNull &&$"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" === -1,

"ReTweet")

.when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" === -1,

"Quoted Tweet")

.when($"OriginalTwIDinRT".isNotNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" === -1,

"Retweet of Quoted Tweet")

.when($"OriginalTwIDinRT".isNotNull && $"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" > -1,

"Retweet of Reply Tweet")

.when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" > -1,

"Reply of Quoted Tweet")

.when($"OriginalTwIDinRT".isNotNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" > -1,

"Retweet of Quoted Rely Tweet")

.otherwise("Unclassified"))

.withColumn("MentionType",

when($"UMentionRTid".isNotNull && $"UMentionQTid".isNotNull, "RetweetAndQuotedMention")

.when($"UMentionRTid".isNotNull && $"UMentionQTid".isNull, "RetweetMention")

.when($"UMentionRTid".isNull && $"UMentionQTid".isNotNull, "QuotedMention")

.when($"UMentionRTid".isNull && $"UMentionQTid".isNull, "AuthoredMention")

.otherwise("NoMention"))

.withColumn("Weight", lit(1L))

}

def tweetsDF2TTTDFWithURLsAndHashtagsLightWeight(tweetsInputDF: DataFrame): DataFrame = {

tweetsInputDF.select(

unix_timestamp($"createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("CurrentTweetDate"),

$"id".as("CurrentTwID"),

$"lang".as("lang"),

//$"geoLocation.latitude".as("lat"),

//$"geoLocation.longitude".as("lon"),

unix_timestamp($"retweetedStatus.createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("CreationDateOfOrgTwInRT"),

$"retweetedStatus.id".as("OriginalTwIDinRT"),

unix_timestamp($"quotedStatus.createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("CreationDateOfOrgTwInQT"),

$"quotedStatus.id".as("OriginalTwIDinQT"),

$"inReplyToStatusId".as("OriginalTwIDinReply"),

$"user.id".as("CPostUserId"),

unix_timestamp($"user.createdAt", """MMM dd, yyyy hh:mm:ss a""").cast(TimestampType).as("userCreatedAtDate"),

$"retweetedStatus.user.id".as("OPostUserIdinRT"),

$"quotedStatus.user.id".as("OPostUserIdinQT"),

$"inReplyToUserId".as("OPostUserIdinReply"),

$"user.name".as("CPostUserName"),

$"retweetedStatus.user.name".as("OPostUserNameinRT"),

$"quotedStatus.user.name".as("OPostUserNameinQT"),

$"user.screenName".as("CPostUserSN"),

$"retweetedStatus.user.screenName".as("OPostUserSNinRT"),

$"quotedStatus.user.screenName".as("OPostUserSNinQT"),

$"inReplyToScreenName".as("OPostUserSNinReply"),

$"user.favouritesCount",

$"user.followersCount",

$"user.friendsCount",

$"user.isVerified",

$"user.isGeoEnabled",

$"text".as("CurrentTweet"),

$"retweetedStatus.userMentionEntities.id".as("UMentionRTiD"),

$"retweetedStatus.userMentionEntities.screenName".as("UMentionRTsN"),

$"quotedStatus.userMentionEntities.id".as("UMentionQTiD"),

$"quotedStatus.userMentionEntities.screenName".as("UMentionQTsN"),

$"userMentionEntities.id".as("UMentionASiD"),

$"userMentionEntities.screenName".as("UMentionASsN"),

$"urlEntities.expandedURL".as("URLs"),

$"hashtagEntities.text".as("hashTags")

).withColumn("TweetType",

when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" === -1,

"Original Tweet")

.when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" > -1,

"Reply Tweet")

.when($"OriginalTwIDinRT".isNotNull &&$"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" === -1,

"ReTweet")

.when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" === -1,

"Quoted Tweet")

.when($"OriginalTwIDinRT".isNotNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" === -1,

"Retweet of Quoted Tweet")

.when($"OriginalTwIDinRT".isNotNull && $"OriginalTwIDinQT".isNull && $"OriginalTwIDinReply" > -1,

"Retweet of Reply Tweet")

.when($"OriginalTwIDinRT".isNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" > -1,

"Reply of Quoted Tweet")

.when($"OriginalTwIDinRT".isNotNull && $"OriginalTwIDinQT".isNotNull && $"OriginalTwIDinReply" > -1,

"Retweet of Quoted Rely Tweet")

.otherwise("Unclassified"))

.withColumn("MentionType",

when($"UMentionRTid".isNotNull && $"UMentionQTid".isNotNull, "RetweetAndQuotedMention")

.when($"UMentionRTid".isNotNull && $"UMentionQTid".isNull, "RetweetMention")

.when($"UMentionRTid".isNull && $"UMentionQTid".isNotNull, "QuotedMention")

.when($"UMentionRTid".isNull && $"UMentionQTid".isNull, "AuthoredMention")

.otherwise("NoMention"))

.withColumn("Weight", lit(1L))

}

Extended TwitterUtils with Language

We extend twitter utils from Spark to allow for filtering by user-ids using .follow and strings in the tweet using .track method of twitter4j.

This notebook is mainly left to show how one can adapt one of the exsiting notebooks for twitter experiemnts to cahnge the design of the experiment itself. Similarly, one can extend further into the twitter4j functions and methods we are wrapping into Scala here.

This is part of Project MEP: Meme Evolution Programme and supported by databricks academic partners program.

The analysis is available in the following databricks notebook: * http://lamastex.org/lmse/mep/src/extendedTwitterUtils.html

Copyright 2016 Ivan Sadikov and Raazesh Sainudiin

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

import twitter4j._

import twitter4j.auth.Authorization

import twitter4j.conf.ConfigurationBuilder

import twitter4j.auth.OAuthAuthorization

import org.apache.spark.streaming._

import org.apache.spark.streaming.dstream._

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.receiver.Receiver

import twitter4j._

import twitter4j.auth.Authorization

import twitter4j.conf.ConfigurationBuilder

import twitter4j.auth.OAuthAuthorization

import org.apache.spark.streaming._

import org.apache.spark.streaming.dstream._

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.receiver.Receiver

class ExtendedTwitterReceiver(

twitterAuth: Authorization,

filters: Seq[String],

userFilters: Seq[Long],

langFilters: Seq[String],

storageLevel: StorageLevel

) extends Receiver[Status](storageLevel) {

@volatile private var twitterStream: TwitterStream = _

@volatile private var stopped = false

def onStart() {

try {

val newTwitterStream = new TwitterStreamFactory().getInstance(twitterAuth)

newTwitterStream.addListener(new StatusListener {

def onStatus(status: Status): Unit = {

store(status)

}

// Unimplemented

def onDeletionNotice(statusDeletionNotice: StatusDeletionNotice) {}

def onTrackLimitationNotice(i: Int) {}

def onScrubGeo(l: Long, l1: Long) {}

def onStallWarning(stallWarning: StallWarning) {}

def onException(e: Exception) {

if (!stopped) {

restart("Error receiving tweets", e)

}

}

})

// do filtering only when filters are available

if (filters.nonEmpty || userFilters.nonEmpty || langFilters.nonEmpty) {

val query = new FilterQuery()

if (filters.nonEmpty) {

query.track(filters.mkString(","))

}

if (userFilters.nonEmpty) {

query.follow(userFilters: _*)

}

if (langFilters.nonEmpty) {

query.language(langFilters.mkString(","))

}

newTwitterStream.filter(query)

} else {

newTwitterStream.sample()

}

setTwitterStream(newTwitterStream)

println("Twitter receiver started")

stopped = false

} catch {

case e: Exception => restart("Error starting Twitter stream", e)

}

}

def onStop() {

stopped = true

setTwitterStream(null)

println("Twitter receiver stopped")

}

private def setTwitterStream(newTwitterStream: TwitterStream) = synchronized {

if (twitterStream != null) {

twitterStream.shutdown()

}

twitterStream = newTwitterStream

}

}

defined class ExtendedTwitterReceiver

class ExtendedTwitterInputDStream(

ssc_ : StreamingContext,

twitterAuth: Option[Authorization],

filters: Seq[String],

userFilters: Seq[Long],

langFilters: Seq[String],

storageLevel: StorageLevel

) extends ReceiverInputDStream[Status](ssc_) {

private def createOAuthAuthorization(): Authorization = {

new OAuthAuthorization(new ConfigurationBuilder().build())

}

private val authorization = twitterAuth.getOrElse(createOAuthAuthorization())

override def getReceiver(): Receiver[Status] = {

new ExtendedTwitterReceiver(authorization, filters, userFilters, langFilters, storageLevel)

}

}

defined class ExtendedTwitterInputDStream

import twitter4j.Status

import twitter4j.auth.Authorization

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.StreamingContext

import org.apache.spark.streaming.dstream.{ReceiverInputDStream, DStream}

object ExtendedTwitterUtils {

def createStream(

ssc: StreamingContext,

twitterAuth: Option[Authorization],

filters: Seq[String] = Nil,

userFilters: Seq[Long] = Nil,

langFilters: Seq[String] = Nil,

storageLevel: StorageLevel = StorageLevel.MEMORY_AND_DISK_SER_2

): ReceiverInputDStream[Status] = {

new ExtendedTwitterInputDStream(ssc, twitterAuth, filters, userFilters, langFilters, storageLevel)

}

}

import twitter4j.Status

import twitter4j.auth.Authorization

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.StreamingContext

import org.apache.spark.streaming.dstream.{ReceiverInputDStream, DStream}

defined object ExtendedTwitterUtils

println("done running the extendedTwitterUtils2run notebook - ready to stream from twitter by filtering on strings, users and language")

done running the extendedTwitterUtils2run notebook - ready to stream from twitter by filtering on strings, users and language

Tweet Streaming Collector

Let us build a system to collect live tweets using Spark streaming.

Here are the main steps in this notebook:

- let's collect from the public twitter stream and write to DBFS as json strings in a boiler-plate manner to understand the componets better.

- Then we will turn the collector into a function and use it

- Finally we will use some DataFrame-based pipelines to convert the raw tweets into other structured content.

Note that capturing tweets from the public streams for free using Twitter's Streaming API has some caveats. We are supposed to have access to a uniformly random sample of roughly 1% of all Tweets across the globe, but what's exactly available in the sample from the full twitter social media network, i.e. all status updates in the planet, for such free collection is not exactly known in terms of sub-sampling strategies like starification layers, etc. This latter is Twitter's proprietary information. However, we are supposed to be able to assume that it is indeed a random sample of roughly 1% of all tweets.

We will call extendedTwitterUtils notebook from here.

But first install the following libraries from maven central and attach to this cluster:

- gson with maven coordinates

com.google.code.gson:gson:2.8.6 - twitter4j-examples with maven coordinates

org.twitter4j:twitter4j-examples:4.0.7

"./025_a_extendedTwitterUtils2run"

import twitter4j._

import twitter4j.auth.Authorization

import twitter4j.conf.ConfigurationBuilder

import twitter4j.auth.OAuthAuthorization

import org.apache.spark.streaming._

import org.apache.spark.streaming.dstream._

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.receiver.Receiver

Go to SparkUI and see if a streaming job is already running. If so you need to terminate it before starting a new streaming job. Only one streaming job can be run on the DB CE.

defined class ExtendedTwitterReceiver

defined class ExtendedTwitterInputDStream

// this will make sure all streaming job in the cluster are stopped

StreamingContext.getActive.foreach{ _.stop(stopSparkContext = false) }

import twitter4j.Status

import twitter4j.auth.Authorization

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.StreamingContext

import org.apache.spark.streaming.dstream.{ReceiverInputDStream, DStream}

defined object ExtendedTwitterUtils

done running the extendedTwitterUtils2run notebook - ready to stream from twitter

Let's create a directory in dbfs for storing tweets in the cluster's distributed file system.

val outputDirectoryRoot = "/datasets/tweetsStreamTmp" // output directory

outputDirectoryRoot: String = /datasets/tweetsStreamTmp

dbutils.fs.mkdirs("/datasets/tweetsStreamTmp")

res2: Boolean = true

//display(dbutils.fs.ls(outputDirectoryRoot))

// to remove a pre-existing directory and start from scratch uncomment next line and evaluate this cell

dbutils.fs.rm("/datasets/tweetsStreamTmp", true)

res4: Boolean = true

Capture tweets in every sliding window of slideInterval many milliseconds.

val slideInterval = new Duration(1 * 1000) // 1 * 1000 = 1000 milli-seconds = 1 sec

slideInterval: org.apache.spark.streaming.Duration = 1000 ms

Recall that Discretized Stream or DStream is the basic abstraction provided by Spark Streaming. It represents a continuous stream of data, either the input data stream received from source, or the processed data stream generated by transforming the input stream. Internally, a DStream is represented by a continuous series of RDDs, which is Spark?s abstraction of an immutable, distributed dataset (see Spark Programming Guide for more details). Each RDD in a DStream contains data from a certain interval, as shown in the following figure.

Let's import google's json library next.

import com.google.gson.Gson

import com.google.gson.Gson

Our goal is to take each RDD in the twitter DStream and write it as a json file in our dbfs.

// Create a Spark Streaming Context.

val ssc = new StreamingContext(sc, slideInterval)

ssc: org.apache.spark.streaming.StreamingContext = org.apache.spark.streaming.StreamingContext@7a8fbb86

CAUTION

Extracting knowledge from tweets is "easy" using techniques shown here, but one has to take legal responsibility for the use of this knowledge and conform to the rules and policies linked below.

Remeber that the use of twitter itself comes with various strings attached. Read:

Crucially, the use of the content from twitter by you (as done in this worksheet) comes with some strings. Read:

Enter your own Twitter API Credentials.

- Go to https://apps.twitter.com and look up your Twitter API Credentials, or create an app to create them.

- Get your own Twitter API Credentials:

consumerKey,consumerSecret,accessTokenandaccessTokenSecretand enter them in the cell below.

Ethical/Legal Aspects

See Background Readings/Viewings in Project MEP:

Tweet Collector

There are several steps to make a streaming twitter collector. We will do them one by one so you learn all the components. In the sequel we will make a function out of the various steps.

1. Twitter Credentials

First step towards doing your own experiments in twitter is to enter your Twitter API credentials.

- Go to https://apps.twitter.com and look up your Twitter API Credentials, or create an app to create them.

- Run the code in a cell to Enter your own credentials.

// put your own twitter developer credentials below instead of xxx

// instead of the '%run ".../secrets/026_secret_MyTwitterOAuthCredentials"' below

// you need to copy-paste the following code-block with your own Twitter credentials replacing XXXX

// put your own twitter developer credentials below

import twitter4j.auth.OAuthAuthorization

import twitter4j.conf.ConfigurationBuilder

// These have been regenerated!!! - need to chane them

def myAPIKey = "XXXX" // APIKey

def myAPISecret = "XXXX" // APISecretKey

def myAccessToken = "XXXX" // AccessToken

def myAccessTokenSecret = "XXXX" // AccessTokenSecret

System.setProperty("twitter4j.oauth.consumerKey", myAPIKey)

System.setProperty("twitter4j.oauth.consumerSecret", myAPISecret)

System.setProperty("twitter4j.oauth.accessToken", myAccessToken)

System.setProperty("twitter4j.oauth.accessTokenSecret", myAccessTokenSecret)

println("twitter OAuth Credentials loaded")

The cell-below will not expose my Twitter API Credentials: myAPIKey, myAPISecret, myAccessToken and myAccessTokenSecret. Use the code above to enter your own credentials in a scala cell.

"Users/raazesh.sainudiin@math.uu.se/scalable-data-science/secrets/026_secret_MyTwitterOAuthCredentials"

// Create a Twitter Stream for the input source.

val auth = Some(new OAuthAuthorization(new ConfigurationBuilder().build()))

val twitterStream = ExtendedTwitterUtils.createStream(ssc, auth)

auth: Some[twitter4j.auth.OAuthAuthorization] = Some(OAuthAuthorization{consumerKey='8uN0N9RTLT1viaR811yyG7xwk', consumerSecret='******************************************', oauthToken=AccessToken{screenName='null', userId=4173723312}})

twitterStream: org.apache.spark.streaming.dstream.ReceiverInputDStream[twitter4j.Status] = ExtendedTwitterInputDStream@7f635e6d

2. Mapping Tweets to JSON

Let's map the tweets into JSON formatted string (one tweet per line). We will use Google GSON library for this.

val twitterStreamJson = twitterStream.map(

x => { val gson = new Gson();

val xJson = gson.toJson(x)

xJson

}

)

twitterStreamJson: org.apache.spark.streaming.dstream.DStream[String] = org.apache.spark.streaming.dstream.MappedDStream@b874a46

twitter OAuth Credentials loaded

import twitter4j.auth.OAuthAuthorization

import twitter4j.conf.ConfigurationBuilder

import twitter4j.auth.OAuthAuthorization

import twitter4j.conf.ConfigurationBuilder

myAPIKey: String

myAPISecret: String

myAccessToken: String

myAccessTokenSecret: String

outputDirectoryRoot

res8: String = /datasets/tweetsStreamTmp

var numTweetsCollected = 0L // track number of tweets collected

val partitionsEachInterval = 1 // This tells the number of partitions in each RDD of tweets in the DStream.

twitterStreamJson.foreachRDD(

(rdd, time) => { // for each RDD in the DStream

val count = rdd.count()

if (count > 0) {

val outputRDD = rdd.repartition(partitionsEachInterval) // repartition as desired

outputRDD.saveAsTextFile(outputDirectoryRoot + "/tweets_" + time.milliseconds.toString) // save as textfile

numTweetsCollected += count // update with the latest count

}

}

)

numTweetsCollected: Long = 0

partitionsEachInterval: Int = 1

3. Start the Stream

Nothing has actually happened yet.

Let's start the spark streaming context we have created next.

ssc.start()

Let's look at the spark UI now and monitor the streaming job in action! Go to Clusters on the left and click on UI and then Streaming.

numTweetsCollected // number of tweets collected so far

res11: Long = 468

Let's try seeing again in a few seconds how many tweets have been collected up to now.

numTweetsCollected // number of tweets collected so far

res12: Long = 859

4. Stop the Stream

Note that you could easilt fill up disk space!!!

So let's stop the streaming job next.

ssc.stop(stopSparkContext = false) // gotto stop soon!!!

Let's make sure that the Streaming UI is not active in the Clusters UI.

StreamingContext.getActive.foreach { _.stop(stopSparkContext = false) } // extra cautious stopping of all active streaming contexts

5. Examine Collected Tweets

Next let's examine what was saved in dbfs.

display(dbutils.fs.ls(outputDirectoryRoot))

| path | name | size |

|---|---|---|

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867067000/ | tweets_1605867067000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867068000/ | tweets_1605867068000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867069000/ | tweets_1605867069000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867070000/ | tweets_1605867070000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867071000/ | tweets_1605867071000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867072000/ | tweets_1605867072000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867073000/ | tweets_1605867073000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867074000/ | tweets_1605867074000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867075000/ | tweets_1605867075000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867076000/ | tweets_1605867076000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867077000/ | tweets_1605867077000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867078000/ | tweets_1605867078000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867079000/ | tweets_1605867079000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867080000/ | tweets_1605867080000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867081000/ | tweets_1605867081000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867082000/ | tweets_1605867082000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867083000/ | tweets_1605867083000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867084000/ | tweets_1605867084000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867085000/ | tweets_1605867085000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867086000/ | tweets_1605867086000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867087000/ | tweets_1605867087000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867088000/ | tweets_1605867088000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867089000/ | tweets_1605867089000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867090000/ | tweets_1605867090000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867091000/ | tweets_1605867091000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867092000/ | tweets_1605867092000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867093000/ | tweets_1605867093000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867094000/ | tweets_1605867094000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867095000/ | tweets_1605867095000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867096000/ | tweets_1605867096000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867097000/ | tweets_1605867097000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867098000/ | tweets_1605867098000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867099000/ | tweets_1605867099000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867100000/ | tweets_1605867100000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867101000/ | tweets_1605867101000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867102000/ | tweets_1605867102000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867103000/ | tweets_1605867103000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867104000/ | tweets_1605867104000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867105000/ | tweets_1605867105000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867106000/ | tweets_1605867106000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867107000/ | tweets_1605867107000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867108000/ | tweets_1605867108000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867109000/ | tweets_1605867109000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867110000/ | tweets_1605867110000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867111000/ | tweets_1605867111000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867112000/ | tweets_1605867112000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867113000/ | tweets_1605867113000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867114000/ | tweets_1605867114000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867115000/ | tweets_1605867115000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867116000/ | tweets_1605867116000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867117000/ | tweets_1605867117000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867118000/ | tweets_1605867118000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867119000/ | tweets_1605867119000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867120000/ | tweets_1605867120000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867121000/ | tweets_1605867121000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867122000/ | tweets_1605867122000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867123000/ | tweets_1605867123000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867124000/ | tweets_1605867124000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867125000/ | tweets_1605867125000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867126000/ | tweets_1605867126000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867127000/ | tweets_1605867127000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867128000/ | tweets_1605867128000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867129000/ | tweets_1605867129000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867130000/ | tweets_1605867130000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867131000/ | tweets_1605867131000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867132000/ | tweets_1605867132000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867133000/ | tweets_1605867133000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867134000/ | tweets_1605867134000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867135000/ | tweets_1605867135000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867136000/ | tweets_1605867136000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867137000/ | tweets_1605867137000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867138000/ | tweets_1605867138000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867139000/ | tweets_1605867139000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867140000/ | tweets_1605867140000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867141000/ | tweets_1605867141000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867142000/ | tweets_1605867142000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867143000/ | tweets_1605867143000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867144000/ | tweets_1605867144000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867145000/ | tweets_1605867145000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867146000/ | tweets_1605867146000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867147000/ | tweets_1605867147000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867148000/ | tweets_1605867148000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867149000/ | tweets_1605867149000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867150000/ | tweets_1605867150000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867151000/ | tweets_1605867151000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867152000/ | tweets_1605867152000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867153000/ | tweets_1605867153000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867154000/ | tweets_1605867154000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867155000/ | tweets_1605867155000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867156000/ | tweets_1605867156000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867157000/ | tweets_1605867157000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867158000/ | tweets_1605867158000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867159000/ | tweets_1605867159000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867160000/ | tweets_1605867160000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867161000/ | tweets_1605867161000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867162000/ | tweets_1605867162000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867163000/ | tweets_1605867163000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867164000/ | tweets_1605867164000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867165000/ | tweets_1605867165000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867166000/ | tweets_1605867166000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867167000/ | tweets_1605867167000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867168000/ | tweets_1605867168000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867169000/ | tweets_1605867169000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867170000/ | tweets_1605867170000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867171000/ | tweets_1605867171000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867172000/ | tweets_1605867172000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867173000/ | tweets_1605867173000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867174000/ | tweets_1605867174000/ | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867175000/ | tweets_1605867175000/ | 0.0 |

val tweetsDir = outputDirectoryRoot+"/tweets_1605867068000/" // use an existing file, may have to rename folder based on output above!

tweetsDir: String = /datasets/tweetsStreamTmp/tweets_1605867068000/

display(dbutils.fs.ls(tweetsDir))

| path | name | size |

|---|---|---|

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867068000/_SUCCESS | _SUCCESS | 0.0 |

| dbfs:/datasets/tweetsStreamTmp/tweets_1605867068000/part-00000 | part-00000 | 122395.0 |

sc.textFile(tweetsDir+"part-00000").count()

res17: Long = 31

val outJson = sqlContext.read.json(tweetsDir+"part-00000")

outJson: org.apache.spark.sql.DataFrame = [contributorsIDs: array<string>, createdAt: string ... 26 more fields]

outJson.printSchema()

root

|-- contributorsIDs: array (nullable = true)

| |-- element: string (containsNull = true)

|-- createdAt: string (nullable = true)

|-- currentUserRetweetId: long (nullable = true)

|-- displayTextRangeEnd: long (nullable = true)

|-- displayTextRangeStart: long (nullable = true)

|-- favoriteCount: long (nullable = true)

|-- hashtagEntities: array (nullable = true)

| |-- element: struct (containsNull = true)

| | |-- end: long (nullable = true)

| | |-- start: long (nullable = true)

| | |-- text: string (nullable = true)

|-- id: long (nullable = true)

|-- inReplyToScreenName: string (nullable = true)

|-- inReplyToStatusId: long (nullable = true)

|-- inReplyToUserId: long (nullable = true)

|-- isFavorited: boolean (nullable = true)

|-- isPossiblySensitive: boolean (nullable = true)

|-- isRetweeted: boolean (nullable = true)

|-- isTruncated: boolean (nullable = true)

|-- lang: string (nullable = true)

|-- mediaEntities: array (nullable = true)

| |-- element: struct (containsNull = true)

| | |-- displayURL: string (nullable = true)

| | |-- end: long (nullable = true)

| | |-- expandedURL: string (nullable = true)

| | |-- id: long (nullable = true)

| | |-- mediaURL: string (nullable = true)

| | |-- mediaURLHttps: string (nullable = true)

| | |-- sizes: struct (nullable = true)

| | | |-- 0: struct (nullable = true)

| | | | |-- height: long (nullable = true)

| | | | |-- resize: long (nullable = true)

| | | | |-- width: long (nullable = true)

| | | |-- 1: struct (nullable = true)

| | | | |-- height: long (nullable = true)

| | | | |-- resize: long (nullable = true)

| | | | |-- width: long (nullable = true)

| | | |-- 2: struct (nullable = true)

| | | | |-- height: long (nullable = true)

| | | | |-- resize: long (nullable = true)

| | | | |-- width: long (nullable = true)

| | | |-- 3: struct (nullable = true)

| | | | |-- height: long (nullable = true)

| | | | |-- resize: long (nullable = true)

| | | | |-- width: long (nullable = true)

| | |-- start: long (nullable = true)

| | |-- type: string (nullable = true)

| | |-- url: string (nullable = true)

| | |-- videoAspectRatioHeight: long (nullable = true)

| | |-- videoAspectRatioWidth: long (nullable = true)

| | |-- videoDurationMillis: long (nullable = true)

| | |-- videoVariants: array (nullable = true)

| | | |-- element: string (containsNull = true)

|-- quotedStatus: struct (nullable = true)

| |-- contributorsIDs: array (nullable = true)

| | |-- element: string (containsNull = true)

| |-- createdAt: string (nullable = true)

| |-- currentUserRetweetId: long (nullable = true)

| |-- displayTextRangeEnd: long (nullable = true)

| |-- displayTextRangeStart: long (nullable = true)

| |-- favoriteCount: long (nullable = true)

| |-- hashtagEntities: array (nullable = true)

| | |-- element: string (containsNull = true)

| |-- id: long (nullable = true)

| |-- inReplyToScreenName: string (nullable = true)

| |-- inReplyToStatusId: long (nullable = true)

| |-- inReplyToUserId: long (nullable = true)

| |-- isFavorited: boolean (nullable = true)

| |-- isPossiblySensitive: boolean (nullable = true)

| |-- isRetweeted: boolean (nullable = true)

| |-- isTruncated: boolean (nullable = true)

| |-- lang: string (nullable = true)

| |-- mediaEntities: array (nullable = true)

| | |-- element: struct (containsNull = true)

| | | |-- displayURL: string (nullable = true)

| | | |-- end: long (nullable = true)

| | | |-- expandedURL: string (nullable = true)

| | | |-- id: long (nullable = true)

| | | |-- mediaURL: string (nullable = true)

| | | |-- mediaURLHttps: string (nullable = true)

| | | |-- sizes: struct (nullable = true)

| | | | |-- 0: struct (nullable = true)

| | | | | |-- height: long (nullable = true)

| | | | | |-- resize: long (nullable = true)

| | | | | |-- width: long (nullable = true)

| | | | |-- 1: struct (nullable = true)

| | | | | |-- height: long (nullable = true)

| | | | | |-- resize: long (nullable = true)

| | | | | |-- width: long (nullable = true)

| | | | |-- 2: struct (nullable = true)

| | | | | |-- height: long (nullable = true)

| | | | | |-- resize: long (nullable = true)

| | | | | |-- width: long (nullable = true)

| | | | |-- 3: struct (nullable = true)

| | | | | |-- height: long (nullable = true)

| | | | | |-- resize: long (nullable = true)

| | | | | |-- width: long (nullable = true)

| | | |-- start: long (nullable = true)

| | | |-- type: string (nullable = true)

| | | |-- url: string (nullable = true)

| | | |-- videoAspectRatioHeight: long (nullable = true)

| | | |-- videoAspectRatioWidth: long (nullable = true)

| | | |-- videoDurationMillis: long (nullable = true)

| | | |-- videoVariants: array (nullable = true)

| | | | |-- element: string (containsNull = true)

| |-- place: struct (nullable = true)

| | |-- boundingBoxCoordinates: array (nullable = true)

| | | |-- element: array (containsNull = true)

| | | | |-- element: struct (containsNull = true)

| | | | | |-- latitude: double (nullable = true)

| | | | | |-- longitude: double (nullable = true)

| | |-- boundingBoxType: string (nullable = true)

| | |-- country: string (nullable = true)

| | |-- countryCode: string (nullable = true)

| | |-- fullName: string (nullable = true)

| | |-- id: string (nullable = true)

| | |-- name: string (nullable = true)

| | |-- placeType: string (nullable = true)

| | |-- url: string (nullable = true)

| |-- quotedStatusId: long (nullable = true)

| |-- retweetCount: long (nullable = true)

| |-- source: string (nullable = true)

| |-- symbolEntities: array (nullable = true)

| | |-- element: string (containsNull = true)

| |-- text: string (nullable = true)

| |-- urlEntities: array (nullable = true)

| | |-- element: string (containsNull = true)

| |-- user: struct (nullable = true)

| | |-- createdAt: string (nullable = true)

| | |-- description: string (nullable = true)

| | |-- descriptionURLEntities: array (nullable = true)

| | | |-- element: string (containsNull = true)

| | |-- favouritesCount: long (nullable = true)

| | |-- followersCount: long (nullable = true)

| | |-- friendsCount: long (nullable = true)

| | |-- id: long (nullable = true)

| | |-- isContributorsEnabled: boolean (nullable = true)

| | |-- isDefaultProfile: boolean (nullable = true)

| | |-- isDefaultProfileImage: boolean (nullable = true)

| | |-- isFollowRequestSent: boolean (nullable = true)

| | |-- isGeoEnabled: boolean (nullable = true)

| | |-- isProtected: boolean (nullable = true)

| | |-- isVerified: boolean (nullable = true)

| | |-- listedCount: long (nullable = true)

| | |-- location: string (nullable = true)

| | |-- name: string (nullable = true)

| | |-- profileBackgroundColor: string (nullable = true)

| | |-- profileBackgroundImageUrl: string (nullable = true)

| | |-- profileBackgroundImageUrlHttps: string (nullable = true)

| | |-- profileBackgroundTiled: boolean (nullable = true)

| | |-- profileBannerImageUrl: string (nullable = true)

| | |-- profileImageUrl: string (nullable = true)

| | |-- profileImageUrlHttps: string (nullable = true)

| | |-- profileLinkColor: string (nullable = true)

| | |-- profileSidebarBorderColor: string (nullable = true)

| | |-- profileSidebarFillColor: string (nullable = true)

| | |-- profileTextColor: string (nullable = true)

| | |-- profileUseBackgroundImage: boolean (nullable = true)

| | |-- screenName: string (nullable = true)

| | |-- showAllInlineMedia: boolean (nullable = true)

| | |-- statusesCount: long (nullable = true)

| | |-- translator: boolean (nullable = true)

| | |-- url: string (nullable = true)

| | |-- utcOffset: long (nullable = true)

| |-- userMentionEntities: array (nullable = true)

| | |-- element: struct (containsNull = true)

| | | |-- end: long (nullable = true)

| | | |-- id: long (nullable = true)

| | | |-- name: string (nullable = true)

| | | |-- screenName: string (nullable = true)

| | | |-- start: long (nullable = true)

|-- quotedStatusId: long (nullable = true)

|-- quotedStatusPermalink: struct (nullable = true)

| |-- displayURL: string (nullable = true)

| |-- end: long (nullable = true)

| |-- expandedURL: string (nullable = true)

| |-- start: long (nullable = true)

| |-- url: string (nullable = true)

|-- retweetCount: long (nullable = true)

|-- retweetedStatus: struct (nullable = true)

| |-- contributorsIDs: array (nullable = true)

| | |-- element: string (containsNull = true)

| |-- createdAt: string (nullable = true)

| |-- currentUserRetweetId: long (nullable = true)

| |-- displayTextRangeEnd: long (nullable = true)

| |-- displayTextRangeStart: long (nullable = true)

| |-- favoriteCount: long (nullable = true)

| |-- hashtagEntities: array (nullable = true)

| | |-- element: struct (containsNull = true)

| | | |-- end: long (nullable = true)

| | | |-- start: long (nullable = true)

| | | |-- text: string (nullable = true)

| |-- id: long (nullable = true)

| |-- inReplyToScreenName: string (nullable = true)

| |-- inReplyToStatusId: long (nullable = true)

| |-- inReplyToUserId: long (nullable = true)

| |-- isFavorited: boolean (nullable = true)

| |-- isPossiblySensitive: boolean (nullable = true)

| |-- isRetweeted: boolean (nullable = true)

| |-- isTruncated: boolean (nullable = true)

| |-- lang: string (nullable = true)

| |-- mediaEntities: array (nullable = true)

| | |-- element: struct (containsNull = true)

| | | |-- displayURL: string (nullable = true)

| | | |-- end: long (nullable = true)

| | | |-- expandedURL: string (nullable = true)

| | | |-- id: long (nullable = true)

| | | |-- mediaURL: string (nullable = true)

| | | |-- mediaURLHttps: string (nullable = true)

| | | |-- sizes: struct (nullable = true)

| | | | |-- 0: struct (nullable = true)

| | | | | |-- height: long (nullable = true)

| | | | | |-- resize: long (nullable = true)

| | | | | |-- width: long (nullable = true)

| | | | |-- 1: struct (nullable = true)

| | | | | |-- height: long (nullable = true)

| | | | | |-- resize: long (nullable = true)

| | | | | |-- width: long (nullable = true)

| | | | |-- 2: struct (nullable = true)

| | | | | |-- height: long (nullable = true)

| | | | | |-- resize: long (nullable = true)

| | | | | |-- width: long (nullable = true)

| | | | |-- 3: struct (nullable = true)

| | | | | |-- height: long (nullable = true)

| | | | | |-- resize: long (nullable = true)

| | | | | |-- width: long (nullable = true)

| | | |-- start: long (nullable = true)

| | | |-- type: string (nullable = true)

| | | |-- url: string (nullable = true)

| | | |-- videoAspectRatioHeight: long (nullable = true)

| | | |-- videoAspectRatioWidth: long (nullable = true)

| | | |-- videoDurationMillis: long (nullable = true)

| | | |-- videoVariants: array (nullable = true)

| | | | |-- element: string (containsNull = true)

| |-- quotedStatusId: long (nullable = true)

| |-- retweetCount: long (nullable = true)

| |-- source: string (nullable = true)

| |-- symbolEntities: array (nullable = true)

| | |-- element: string (containsNull = true)

| |-- text: string (nullable = true)

| |-- urlEntities: array (nullable = true)

| | |-- element: struct (containsNull = true)

| | | |-- displayURL: string (nullable = true)

| | | |-- end: long (nullable = true)

| | | |-- expandedURL: string (nullable = true)

| | | |-- start: long (nullable = true)

| | | |-- url: string (nullable = true)

| |-- user: struct (nullable = true)

| | |-- createdAt: string (nullable = true)

| | |-- description: string (nullable = true)

| | |-- descriptionURLEntities: array (nullable = true)

| | | |-- element: string (containsNull = true)

| | |-- favouritesCount: long (nullable = true)

| | |-- followersCount: long (nullable = true)

| | |-- friendsCount: long (nullable = true)

| | |-- id: long (nullable = true)

| | |-- isContributorsEnabled: boolean (nullable = true)

| | |-- isDefaultProfile: boolean (nullable = true)

| | |-- isDefaultProfileImage: boolean (nullable = true)

| | |-- isFollowRequestSent: boolean (nullable = true)

| | |-- isGeoEnabled: boolean (nullable = true)

| | |-- isProtected: boolean (nullable = true)

| | |-- isVerified: boolean (nullable = true)

| | |-- listedCount: long (nullable = true)

| | |-- location: string (nullable = true)

| | |-- name: string (nullable = true)

| | |-- profileBackgroundColor: string (nullable = true)

| | |-- profileBackgroundImageUrl: string (nullable = true)

| | |-- profileBackgroundImageUrlHttps: string (nullable = true)

| | |-- profileBackgroundTiled: boolean (nullable = true)

| | |-- profileBannerImageUrl: string (nullable = true)

| | |-- profileImageUrl: string (nullable = true)

| | |-- profileImageUrlHttps: string (nullable = true)

| | |-- profileLinkColor: string (nullable = true)

| | |-- profileSidebarBorderColor: string (nullable = true)

| | |-- profileSidebarFillColor: string (nullable = true)

| | |-- profileTextColor: string (nullable = true)

| | |-- profileUseBackgroundImage: boolean (nullable = true)

| | |-- screenName: string (nullable = true)

| | |-- showAllInlineMedia: boolean (nullable = true)

| | |-- statusesCount: long (nullable = true)

| | |-- translator: boolean (nullable = true)

| | |-- url: string (nullable = true)

| | |-- utcOffset: long (nullable = true)

| |-- userMentionEntities: array (nullable = true)

| | |-- element: struct (containsNull = true)

| | | |-- end: long (nullable = true)

| | | |-- id: long (nullable = true)

| | | |-- name: string (nullable = true)

| | | |-- screenName: string (nullable = true)

| | | |-- start: long (nullable = true)

|-- source: string (nullable = true)

|-- symbolEntities: array (nullable = true)

| |-- element: string (containsNull = true)

|-- text: string (nullable = true)

|-- urlEntities: array (nullable = true)

| |-- element: struct (containsNull = true)

| | |-- displayURL: string (nullable = true)

| | |-- end: long (nullable = true)

| | |-- expandedURL: string (nullable = true)

| | |-- start: long (nullable = true)

| | |-- url: string (nullable = true)

|-- user: struct (nullable = true)

| |-- createdAt: string (nullable = true)

| |-- description: string (nullable = true)

| |-- descriptionURLEntities: array (nullable = true)