Overview

Structured Streaming is a scalable and fault-tolerant stream processing engine built on the Spark SQL engine. You can express your streaming computation the same way you would express a batch computation on static data. The Spark SQL engine will take care of running it incrementally and continuously and updating the final result as streaming data continues to arrive. You can use the Dataset/DataFrame API in Scala, Java, Python or R to express streaming aggregations, event-time windows, stream-to-batch joins, etc. The computation is executed on the same optimized Spark SQL engine. Finally, the system ensures end-to-end exactly-once fault-tolerance guarantees through checkpointing and Write Ahead Logs. In short, Structured Streaming provides fast, scalable, fault-tolerant, end-to-end exactly-once stream processing without the user having to reason about streaming.

In this guide, we are going to walk you through the programming model and the APIs. First, let’s start with a simple example - a streaming word count.

Programming Model

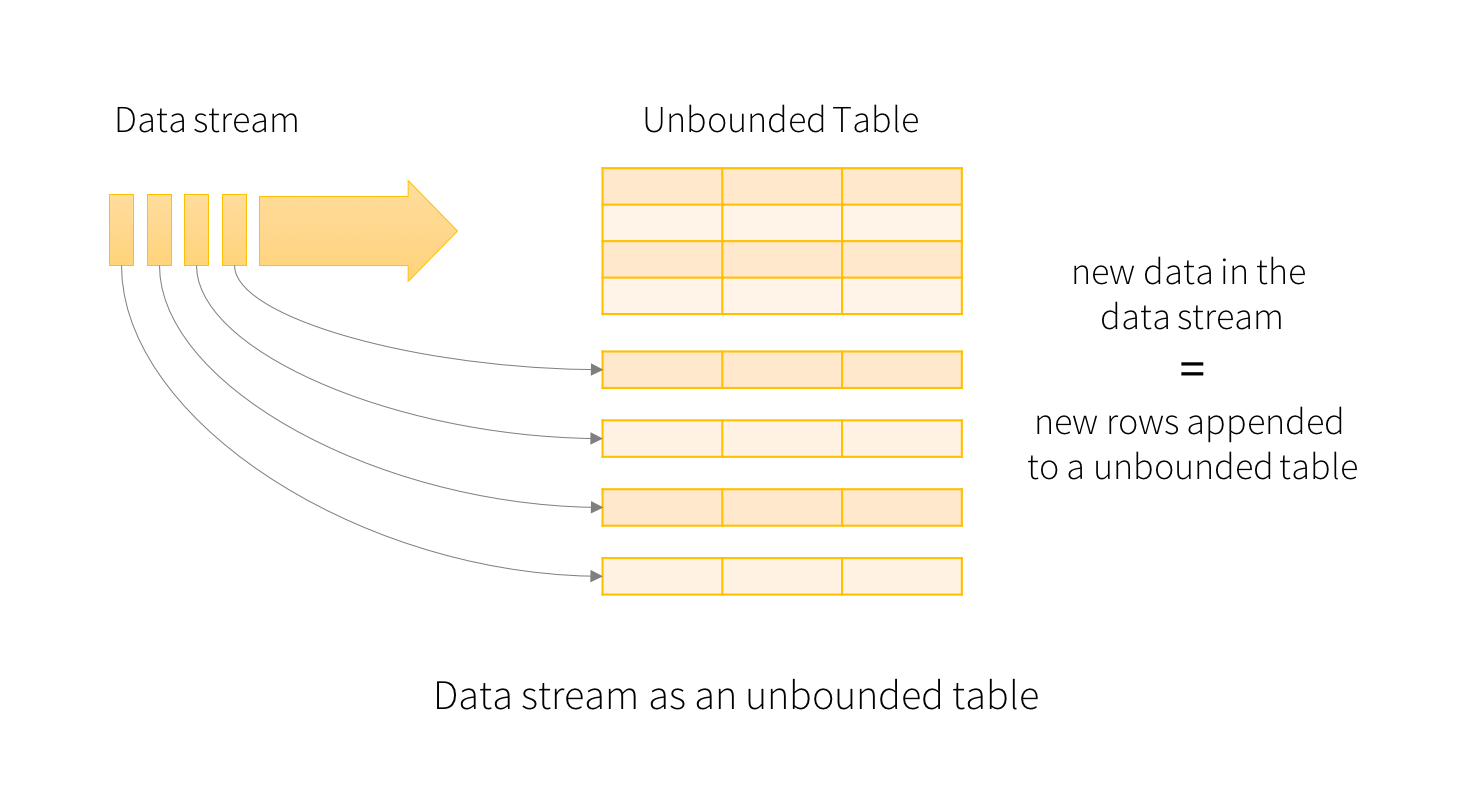

The key idea in Structured Streaming is to treat a live data stream as a table that is being continuously appended. This leads to a new stream processing model that is very similar to a batch processing model. You will express your streaming computation as standard batch-like query as on a static table, and Spark runs it as an incremental query on the unbounded input table. Let’s understand this model in more detail.

Basic Concepts

Consider the input data stream as the “Input Table”. Every data item that is arriving on the stream is like a new row being appended to the Input Table.

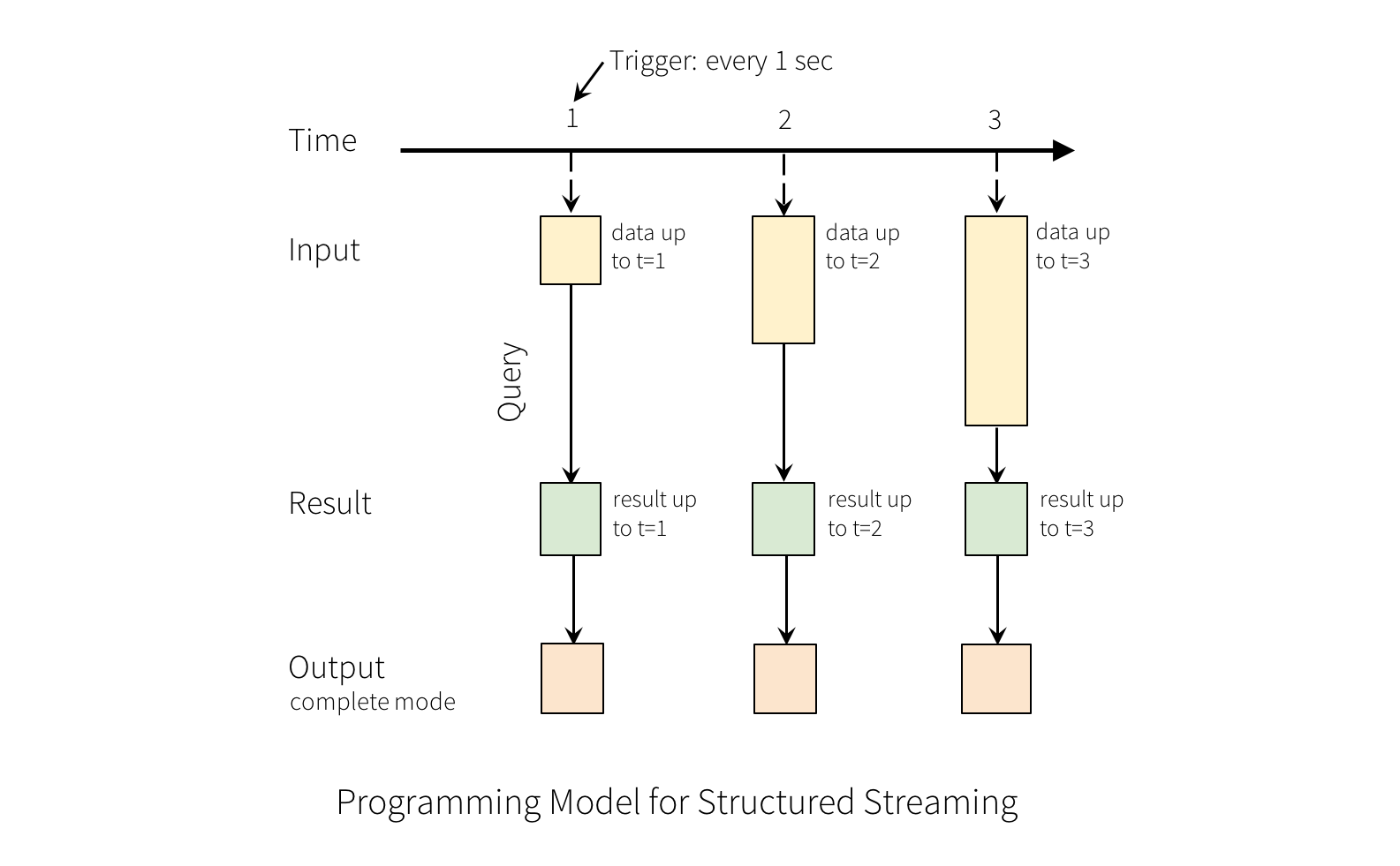

A query on the input will generate the “Result Table”. Every trigger interval (say, every 1 second), new rows get appended to the Input Table, which eventually updates the Result Table. Whenever the result table gets updated, we would want to write the changed result rows to an external sink.

The “Output” is defined as what gets written out to the external storage. The output can be defined in a different mode:

-

Complete Mode - The entire updated Result Table will be written to the external storage. It is up to the storage connector to decide how to handle writing of the entire table.

-

Append Mode - Only the new rows appended in the Result Table since the last trigger will be written to the external storage. This is applicable only on the queries where existing rows in the Result Table are not expected to change.

-

Update Mode - Only the rows that were updated in the Result Table since the last trigger will be written to the external storage (available since Spark 2.1.1). Note that this is different from the Complete Mode in that this mode only outputs the rows that have changed since the last trigger. If the query doesn’t contain aggregations, it will be equivalent to Append mode.

Note that each mode is applicable on certain types of queries. This is discussed in detail later on output-modes. To illustrate the use of this model, let’s understand the model in context of the Quick Example above.

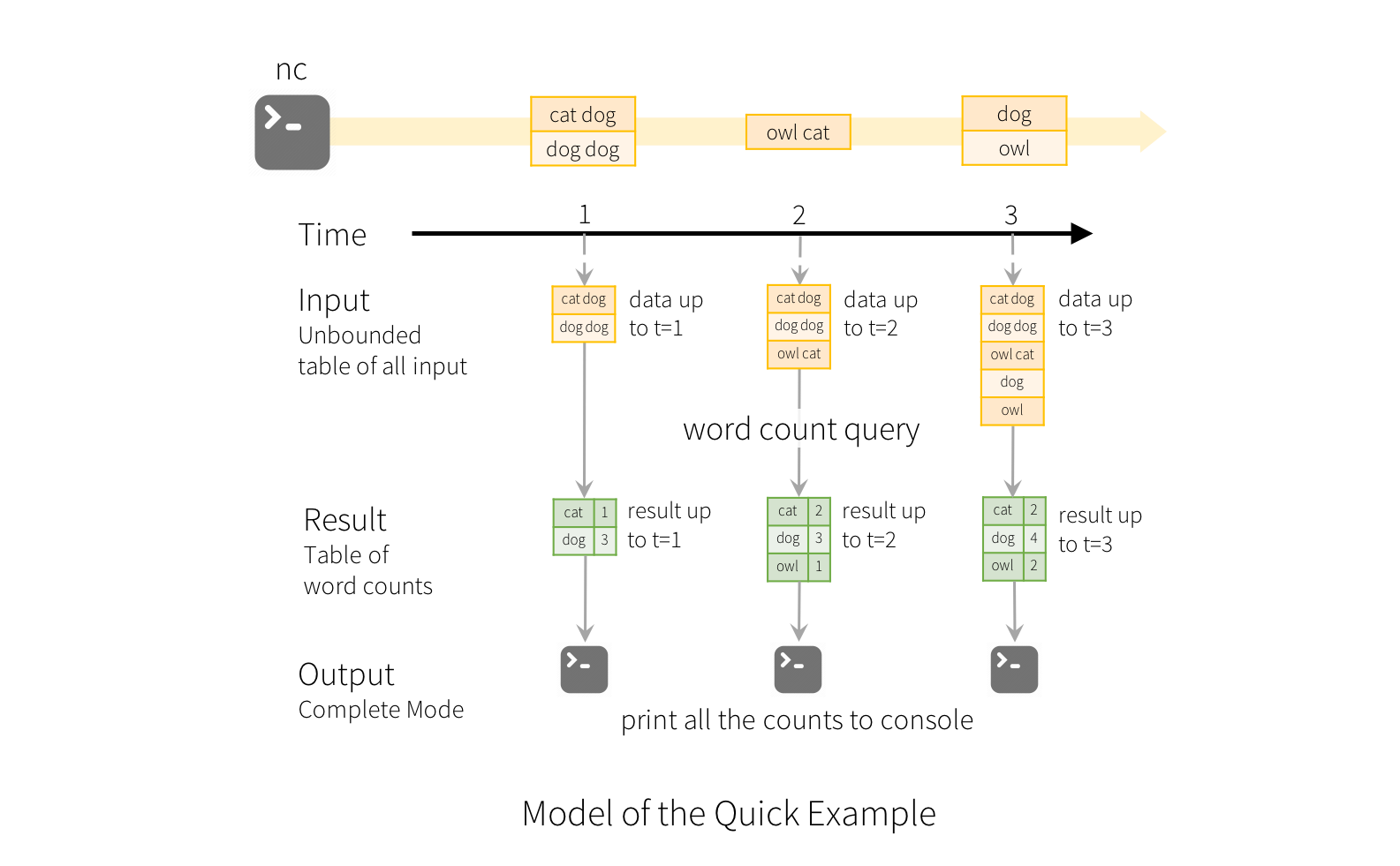

The first streamingLines DataFrame is the input table, and the final wordCounts DataFrame is the result table. Note that the query on streamingLines DataFrame to generate wordCounts is exactly the same as it would be a static DataFrame. However, when this query is started, Spark will continuously check for new data from the directory. If there is new data, Spark will run an “incremental” query that combines the previous running counts with the new data to compute updated counts, as shown below.

This model is significantly different from many other stream processing engines. Many streaming systems require the user to maintain running aggregations themselves, thus having to reason about fault-tolerance, and data consistency (at-least-once, or at-most-once, or exactly-once). In this model, Spark is responsible for updating the Result Table when there is new data, thus relieving the users from reasoning about it. As an example, let’s see how this model handles event-time based processing and late arriving data.

Quick Example

Let’s say you want to maintain a running word count of text data received from a file writer that is writing files into a directory datasets/streamingFiles in the distributed file system. Let’s see how you can express this using Structured Streaming.

Let’s walk through the example step-by-step and understand how it works.

First we need to start a file writing job in the companion notebook 037a_AnimalNamesStructStreamingFiles and then return here.

display(dbutils.fs.ls("/datasets/streamingFiles"))

| path | name | size |

|---|---|---|

| dbfs:/datasets/streamingFiles/20_10.log | 20_10.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_12.log | 20_12.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_14.log | 20_14.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_16.log | 20_16.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_18.log | 20_18.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_20.log | 20_20.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_22.log | 20_22.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_24.log | 20_24.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_26.log | 20_26.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_28.log | 20_28.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_30.log | 20_30.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_32.log | 20_32.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_34.log | 20_34.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_36.log | 20_36.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_38.log | 20_38.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_40.log | 20_40.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_42.log | 20_42.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_44.log | 20_44.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_46.log | 20_46.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_47.log | 20_47.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_48.log | 20_48.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_49.log | 20_49.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_50.log | 20_50.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_51.log | 20_51.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_52.log | 20_52.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_53.log | 20_53.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_54.log | 20_54.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_55.log | 20_55.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_56.log | 20_56.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_57.log | 20_57.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_58.log | 20_58.log | 35.0 |

| dbfs:/datasets/streamingFiles/20_59.log | 20_59.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_00.log | 21_00.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_01.log | 21_01.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_02.log | 21_02.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_03.log | 21_03.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_04.log | 21_04.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_05.log | 21_05.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_06.log | 21_06.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_07.log | 21_07.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_08.log | 21_08.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_09.log | 21_09.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_10.log | 21_10.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_11.log | 21_11.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_12.log | 21_12.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_13.log | 21_13.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_14.log | 21_14.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_15.log | 21_15.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_16.log | 21_16.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_17.log | 21_17.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_18.log | 21_18.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_19.log | 21_19.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_20.log | 21_20.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_21.log | 21_21.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_22.log | 21_22.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_23.log | 21_23.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_24.log | 21_24.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_25.log | 21_25.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_26.log | 21_26.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_27.log | 21_27.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_28.log | 21_28.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_29.log | 21_29.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_30.log | 21_30.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_31.log | 21_31.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_32.log | 21_32.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_33.log | 21_33.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_34.log | 21_34.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_35.log | 21_35.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_36.log | 21_36.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_37.log | 21_37.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_38.log | 21_38.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_39.log | 21_39.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_40.log | 21_40.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_41.log | 21_41.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_42.log | 21_42.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_43.log | 21_43.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_44.log | 21_44.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_45.log | 21_45.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_46.log | 21_46.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_47.log | 21_47.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_48.log | 21_48.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_49.log | 21_49.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_50.log | 21_50.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_51.log | 21_51.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_52.log | 21_52.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_53.log | 21_53.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_54.log | 21_54.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_55.log | 21_55.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_56.log | 21_56.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_57.log | 21_57.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_58.log | 21_58.log | 35.0 |

| dbfs:/datasets/streamingFiles/21_59.log | 21_59.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_00.log | 22_00.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_01.log | 22_01.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_02.log | 22_02.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_03.log | 22_03.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_04.log | 22_04.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_05.log | 22_05.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_06.log | 22_06.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_07.log | 22_07.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_08.log | 22_08.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_09.log | 22_09.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_10.log | 22_10.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_11.log | 22_11.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_12.log | 22_12.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_13.log | 22_13.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_14.log | 22_14.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_15.log | 22_15.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_16.log | 22_16.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_17.log | 22_17.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_18.log | 22_18.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_19.log | 22_19.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_20.log | 22_20.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_21.log | 22_21.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_22.log | 22_22.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_23.log | 22_23.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_24.log | 22_24.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_25.log | 22_25.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_26.log | 22_26.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_27.log | 22_27.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_28.log | 22_28.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_29.log | 22_29.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_30.log | 22_30.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_31.log | 22_31.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_32.log | 22_32.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_33.log | 22_33.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_34.log | 22_34.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_35.log | 22_35.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_36.log | 22_36.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_37.log | 22_37.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_38.log | 22_38.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_39.log | 22_39.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_40.log | 22_40.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_41.log | 22_41.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_42.log | 22_42.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_43.log | 22_43.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_44.log | 22_44.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_45.log | 22_45.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_46.log | 22_46.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_47.log | 22_47.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_48.log | 22_48.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_49.log | 22_49.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_50.log | 22_50.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_51.log | 22_51.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_52.log | 22_52.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_53.log | 22_53.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_54.log | 22_54.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_55.log | 22_55.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_56.log | 22_56.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_57.log | 22_57.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_58.log | 22_58.log | 35.0 |

| dbfs:/datasets/streamingFiles/22_59.log | 22_59.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_00.log | 23_00.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_01.log | 23_01.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_02.log | 23_02.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_03.log | 23_03.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_04.log | 23_04.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_05.log | 23_05.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_06.log | 23_06.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_07.log | 23_07.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_08.log | 23_08.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_09.log | 23_09.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_10.log | 23_10.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_11.log | 23_11.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_12.log | 23_12.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_13.log | 23_13.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_14.log | 23_14.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_15.log | 23_15.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_16.log | 23_16.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_17.log | 23_17.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_18.log | 23_18.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_19.log | 23_19.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_20.log | 23_20.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_21.log | 23_21.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_22.log | 23_22.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_23.log | 23_23.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_24.log | 23_24.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_25.log | 23_25.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_26.log | 23_26.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_27.log | 23_27.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_28.log | 23_28.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_29.log | 23_29.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_30.log | 23_30.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_31.log | 23_31.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_32.log | 23_32.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_33.log | 23_33.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_34.log | 23_34.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_35.log | 23_35.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_36.log | 23_36.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_37.log | 23_37.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_38.log | 23_38.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_39.log | 23_39.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_40.log | 23_40.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_41.log | 23_41.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_42.log | 23_42.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_43.log | 23_43.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_44.log | 23_44.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_45.log | 23_45.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_46.log | 23_46.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_47.log | 23_47.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_48.log | 23_48.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_49.log | 23_49.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_50.log | 23_50.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_51.log | 23_51.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_52.log | 23_52.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_53.log | 23_53.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_54.log | 23_54.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_55.log | 23_55.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_56.log | 23_56.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_57.log | 23_57.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_58.log | 23_58.log | 35.0 |

| dbfs:/datasets/streamingFiles/23_59.log | 23_59.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_00.log | 24_00.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_01.log | 24_01.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_02.log | 24_02.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_03.log | 24_03.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_04.log | 24_04.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_05.log | 24_05.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_06.log | 24_06.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_07.log | 24_07.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_08.log | 24_08.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_09.log | 24_09.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_10.log | 24_10.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_11.log | 24_11.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_12.log | 24_12.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_13.log | 24_13.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_14.log | 24_14.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_15.log | 24_15.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_16.log | 24_16.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_17.log | 24_17.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_18.log | 24_18.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_19.log | 24_19.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_20.log | 24_20.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_21.log | 24_21.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_22.log | 24_22.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_23.log | 24_23.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_24.log | 24_24.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_25.log | 24_25.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_27.log | 24_27.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_29.log | 24_29.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_31.log | 24_31.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_33.log | 24_33.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_35.log | 24_35.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_37.log | 24_37.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_39.log | 24_39.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_41.log | 24_41.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_43.log | 24_43.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_45.log | 24_45.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_47.log | 24_47.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_49.log | 24_49.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_50.log | 24_50.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_51.log | 24_51.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_53.log | 24_53.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_55.log | 24_55.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_57.log | 24_57.log | 35.0 |

| dbfs:/datasets/streamingFiles/24_59.log | 24_59.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_01.log | 25_01.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_03.log | 25_03.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_05.log | 25_05.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_07.log | 25_07.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_09.log | 25_09.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_11.log | 25_11.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_13.log | 25_13.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_15.log | 25_15.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_17.log | 25_17.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_19.log | 25_19.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_21.log | 25_21.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_23.log | 25_23.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_25.log | 25_25.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_27.log | 25_27.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_29.log | 25_29.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_31.log | 25_31.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_33.log | 25_33.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_35.log | 25_35.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_37.log | 25_37.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_39.log | 25_39.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_41.log | 25_41.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_43.log | 25_43.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_45.log | 25_45.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_47.log | 25_47.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_49.log | 25_49.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_51.log | 25_51.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_53.log | 25_53.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_55.log | 25_55.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_57.log | 25_57.log | 35.0 |

| dbfs:/datasets/streamingFiles/25_59.log | 25_59.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_01.log | 26_01.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_03.log | 26_03.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_05.log | 26_05.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_07.log | 26_07.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_09.log | 26_09.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_11.log | 26_11.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_13.log | 26_13.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_15.log | 26_15.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_17.log | 26_17.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_19.log | 26_19.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_21.log | 26_21.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_23.log | 26_23.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_25.log | 26_25.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_27.log | 26_27.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_29.log | 26_29.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_31.log | 26_31.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_33.log | 26_33.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_35.log | 26_35.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_37.log | 26_37.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_39.log | 26_39.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_41.log | 26_41.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_43.log | 26_43.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_45.log | 26_45.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_47.log | 26_47.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_49.log | 26_49.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_51.log | 26_51.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_53.log | 26_53.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_55.log | 26_55.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_57.log | 26_57.log | 35.0 |

| dbfs:/datasets/streamingFiles/26_59.log | 26_59.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_01.log | 27_01.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_03.log | 27_03.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_05.log | 27_05.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_07.log | 27_07.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_09.log | 27_09.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_11.log | 27_11.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_13.log | 27_13.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_15.log | 27_15.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_17.log | 27_17.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_19.log | 27_19.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_21.log | 27_21.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_23.log | 27_23.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_25.log | 27_25.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_27.log | 27_27.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_29.log | 27_29.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_31.log | 27_31.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_33.log | 27_33.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_35.log | 27_35.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_37.log | 27_37.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_39.log | 27_39.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_41.log | 27_41.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_43.log | 27_43.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_45.log | 27_45.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_47.log | 27_47.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_50.log | 27_50.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_52.log | 27_52.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_54.log | 27_54.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_56.log | 27_56.log | 35.0 |

| dbfs:/datasets/streamingFiles/27_58.log | 27_58.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_00.log | 28_00.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_02.log | 28_02.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_04.log | 28_04.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_06.log | 28_06.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_08.log | 28_08.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_10.log | 28_10.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_12.log | 28_12.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_14.log | 28_14.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_16.log | 28_16.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_18.log | 28_18.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_20.log | 28_20.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_22.log | 28_22.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_24.log | 28_24.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_26.log | 28_26.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_28.log | 28_28.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_30.log | 28_30.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_32.log | 28_32.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_34.log | 28_34.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_36.log | 28_36.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_38.log | 28_38.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_40.log | 28_40.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_42.log | 28_42.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_44.log | 28_44.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_46.log | 28_46.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_48.log | 28_48.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_50.log | 28_50.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_52.log | 28_52.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_54.log | 28_54.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_56.log | 28_56.log | 35.0 |

| dbfs:/datasets/streamingFiles/28_58.log | 28_58.log | 35.0 |

| dbfs:/datasets/streamingFiles/29_00.log | 29_00.log | 35.0 |

| dbfs:/datasets/streamingFiles/29_02.log | 29_02.log | 35.0 |

| dbfs:/datasets/streamingFiles/29_04.log | 29_04.log | 35.0 |

| dbfs:/datasets/streamingFiles/29_06.log | 29_06.log | 35.0 |

| dbfs:/datasets/streamingFiles/29_08.log | 29_08.log | 35.0 |

| dbfs:/datasets/streamingFiles/29_10.log | 29_10.log | 35.0 |

| dbfs:/datasets/streamingFiles/29_12.log | 29_12.log | 35.0 |

| dbfs:/datasets/streamingFiles/29_14.log | 29_14.log | 35.0 |

| dbfs:/datasets/streamingFiles/29_16.log | 29_16.log | 35.0 |

| dbfs:/datasets/streamingFiles/29_28.log | 29_28.log | 35.0 |

dbutils.fs.head("/datasets/streamingFiles/20_16.log")

res1: String =

"2020-11-20 13:20:16+00:00; cat pig

"

Next, let’s create a streaming DataFrame that represents text data received from the directory, and transform the DataFrame to calculate word counts.

import org.apache.spark.sql.types._

// Create DataFrame representing the stream of input lines from files in distributed file store

//val textFileSchema = new StructType().add("line", "string") // for a custom schema

val streamingLines = spark

.readStream

//.schema(textFileSchema) // using default -> makes a column of String named value

.option("MaxFilesPerTrigger", 1) // maximum number of new files to be considered in every trigger (default: no max)

.format("text")

.load("/datasets/streamingFiles")

import org.apache.spark.sql.types._

streamingLines: org.apache.spark.sql.DataFrame = [value: string]

This streamingLines DataFrame represents an unbounded table containing the streaming text data. This table contains one column of strings named “value”, and each line in the streaming text data becomes a row in the table. Note, that this is not currently receiving any data as we are just setting up the transformation, and have not yet started it.

display(streamingLines) // display will show you the contents of the DF

| value |

|---|

| 2020-11-20 13:26:26+00:00; bat owl |

| 2020-11-20 13:28:23+00:00; owl cat |

| 2020-11-20 13:29:31+00:00; dog rat |

| 2020-11-20 13:30:19+00:00; cat dog |

| 2020-11-20 13:28:01+00:00; bat cat |

| 2020-11-20 13:30:11+00:00; cat rat |

| 2020-11-20 13:22:07+00:00; cat dog |

| 2020-11-20 13:22:48+00:00; bat rat |

| 2020-11-20 13:27:22+00:00; owl bat |

| 2020-11-20 13:29:15+00:00; dog cat |

| 2020-11-20 13:29:47+00:00; dog cat |

| 2020-11-20 13:30:09+00:00; cat owl |

| 2020-11-20 13:21:45+00:00; bat rat |

| 2020-11-20 13:22:30+00:00; rat pig |

| 2020-11-20 13:22:42+00:00; bat cat |

| 2020-11-20 13:24:50+00:00; dog bat |

| 2020-11-20 13:25:14+00:00; bat rat |

| 2020-11-20 13:21:25+00:00; dog owl |

| 2020-11-20 13:22:17+00:00; rat owl |

| 2020-11-20 13:27:57+00:00; cat pig |

| 2020-11-20 13:28:19+00:00; pig bat |

| 2020-11-20 13:29:13+00:00; cat pig |

| 2020-11-20 13:30:07+00:00; pig dog |

| 2020-11-20 13:26:04+00:00; dog bat |

| 2020-11-20 13:26:58+00:00; rat bat |

| 2020-11-20 13:29:49+00:00; dog pig |

| 2020-11-20 13:24:22+00:00; cat owl |

| 2020-11-20 13:29:35+00:00; dog owl |

| 2020-11-20 13:30:01+00:00; owl rat |

| 2020-11-20 13:22:32+00:00; dog rat |

| 2020-11-20 13:24:12+00:00; bat dog |

| 2020-11-20 13:25:26+00:00; owl dog |

| 2020-11-20 13:28:13+00:00; owl dog |

| 2020-11-20 13:23:12+00:00; pig owl |

| 2020-11-20 13:24:00+00:00; owl dog |

| 2020-11-20 13:25:22+00:00; bat pig |

| 2020-11-20 13:22:34+00:00; bat dog |

| 2020-11-20 13:25:36+00:00; owl bat |

| 2020-11-20 13:23:04+00:00; owl bat |

| 2020-11-20 13:22:54+00:00; cat dog |

| 2020-11-20 13:23:30+00:00; owl dog |

| 2020-11-20 13:24:58+00:00; pig bat |

| 2020-11-20 13:22:01+00:00; dog rat |

| 2020-11-20 13:23:16+00:00; pig cat |

| 2020-11-20 13:20:53+00:00; pig cat |

| 2020-11-20 13:21:21+00:00; pig owl |

| 2020-11-20 13:24:30+00:00; owl dog |

| 2020-11-20 13:29:07+00:00; rat pig |

| 2020-11-20 13:21:39+00:00; rat cat |

| 2020-11-20 13:27:59+00:00; bat rat |

| 2020-11-20 13:26:42+00:00; bat pig |

| 2020-11-20 13:29:09+00:00; pig cat |

| 2020-11-20 13:25:08+00:00; rat bat |

| 2020-11-20 13:22:58+00:00; cat pig |

| 2020-11-20 13:29:23+00:00; rat pig |

| 2020-11-20 13:21:27+00:00; cat dog |

| 2020-11-20 13:22:50+00:00; rat cat |

| 2020-11-20 13:25:42+00:00; bat owl |

| 2020-11-20 13:28:21+00:00; cat rat |

| 2020-11-20 13:22:52+00:00; dog rat |

| 2020-11-20 13:22:15+00:00; pig owl |

| 2020-11-20 13:22:46+00:00; pig rat |

| 2020-11-20 13:27:32+00:00; pig cat |

| 2020-11-20 13:21:13+00:00; owl cat |

| 2020-11-20 13:20:49+00:00; owl dog |

| 2020-11-20 13:21:37+00:00; pig bat |

| 2020-11-20 13:24:56+00:00; owl bat |

| 2020-11-20 13:25:10+00:00; bat dog |

| 2020-11-20 13:21:07+00:00; dog pig |

| 2020-11-20 13:26:36+00:00; dog bat |

| 2020-11-20 13:27:24+00:00; pig rat |

| 2020-11-20 13:26:24+00:00; pig cat |

| 2020-11-20 13:21:55+00:00; owl pig |

| 2020-11-20 13:20:55+00:00; rat pig |

| 2020-11-20 13:22:26+00:00; bat dog |

| 2020-11-20 13:22:25+00:00; owl dog |

| 2020-11-20 13:24:38+00:00; bat rat |

| 2020-11-20 13:21:19+00:00; pig cat |

| 2020-11-20 13:23:52+00:00; bat pig |

| 2020-11-20 13:21:03+00:00; cat rat |

| 2020-11-20 13:20:57+00:00; bat dog |

| 2020-11-20 13:22:09+00:00; bat rat |

| 2020-11-20 13:21:05+00:00; owl bat |

| 2020-11-20 13:21:23+00:00; dog pig |

| 2020-11-20 13:21:11+00:00; cat owl |

| 2020-11-20 13:31:29+00:00; owl dog |

| 2020-11-20 13:31:31+00:00; dog owl |

| 2020-11-20 13:31:41+00:00; dog owl |

| 2020-11-20 13:28:35+00:00; rat pig |

| 2020-11-20 13:28:09+00:00; rat dog |

| 2020-11-20 13:28:55+00:00; pig rat |

| 2020-11-20 13:29:51+00:00; owl pig |

| 2020-11-20 13:27:10+00:00; pig cat |

| 2020-11-20 13:27:36+00:00; pig owl |

| 2020-11-20 13:31:03+00:00; owl pig |

| 2020-11-20 13:25:46+00:00; rat bat |

| 2020-11-20 13:28:17+00:00; rat cat |

| 2020-11-20 13:28:53+00:00; bat pig |

| 2020-11-20 13:21:47+00:00; owl rat |

| 2020-11-20 13:27:16+00:00; cat bat |

| 2020-11-20 13:21:49+00:00; cat bat |

| 2020-11-20 13:23:44+00:00; cat bat |

| 2020-11-20 13:22:05+00:00; owl cat |

| 2020-11-20 13:26:28+00:00; dog pig |

| 2020-11-20 13:24:46+00:00; cat owl |

| 2020-11-20 13:30:27+00:00; cat dog |

| 2020-11-20 13:27:53+00:00; owl pig |

| 2020-11-20 13:23:10+00:00; cat pig |

| 2020-11-20 13:24:16+00:00; pig rat |

| 2020-11-20 13:23:22+00:00; pig rat |

| 2020-11-20 13:23:34+00:00; pig rat |

| 2020-11-20 13:24:42+00:00; pig owl |

| 2020-11-20 13:22:28+00:00; pig dog |

| 2020-11-20 13:20:47+00:00; bat owl |

| 2020-11-20 13:25:18+00:00; pig bat |

| 2020-11-20 13:25:28+00:00; pig rat |

| 2020-11-20 13:23:56+00:00; owl pig |

| 2020-11-20 13:25:30+00:00; bat cat |

| 2020-11-20 13:32:23+00:00; rat pig |

| 2020-11-20 13:32:37+00:00; rat owl |

| 2020-11-20 13:32:29+00:00; cat dog |

| 2020-11-20 13:32:41+00:00; cat rat |

| 2020-11-20 13:33:02+00:00; rat dog |

| 2020-11-20 13:33:00+00:00; dog owl |

| 2020-11-20 13:31:59+00:00; pig cat |

| 2020-11-20 13:21:09+00:00; cat owl |

| 2020-11-20 13:24:24+00:00; pig cat |

| 2020-11-20 13:23:46+00:00; bat owl |

| 2020-11-20 13:31:43+00:00; dog rat |

| 2020-11-20 13:21:53+00:00; owl dog |

| 2020-11-20 13:32:27+00:00; pig bat |

| 2020-11-20 13:22:38+00:00; cat pig |

| 2020-11-20 13:20:59+00:00; rat dog |

| 2020-11-20 13:22:19+00:00; bat cat |

| 2020-11-20 13:31:15+00:00; bat owl |

| 2020-11-20 13:22:23+00:00; owl pig |

| 2020-11-20 13:32:25+00:00; rat bat |

| 2020-11-20 13:22:44+00:00; cat rat |

| 2020-11-20 13:32:57+00:00; cat dog |

| 2020-11-20 13:25:40+00:00; pig rat |

| 2020-11-20 13:30:45+00:00; pig bat |

| 2020-11-20 13:28:39+00:00; owl cat |

| 2020-11-20 13:30:47+00:00; pig owl |

| 2020-11-20 13:29:17+00:00; bat dog |

| 2020-11-20 13:26:12+00:00; cat owl |

| 2020-11-20 13:21:15+00:00; rat pig |

| 2020-11-20 13:22:11+00:00; pig rat |

| 2020-11-20 13:22:40+00:00; pig owl |

| 2020-11-20 13:21:01+00:00; owl pig |

| 2020-11-20 13:25:50+00:00; dog pig |

| 2020-11-20 13:23:06+00:00; dog rat |

| 2020-11-20 13:26:38+00:00; pig owl |

| 2020-11-20 13:23:14+00:00; cat pig |

| 2020-11-20 13:22:36+00:00; owl cat |

| 2020-11-20 13:22:56+00:00; dog rat |

| 2020-11-20 13:27:38+00:00; dog rat |

| 2020-11-20 13:21:57+00:00; cat rat |

| 2020-11-20 13:26:30+00:00; owl bat |

| 2020-11-20 13:26:06+00:00; rat bat |

| 2020-11-20 13:21:17+00:00; bat cat |

| 2020-11-20 13:21:29+00:00; pig cat |

| 2020-11-20 13:26:40+00:00; dog owl |

| 2020-11-20 13:27:42+00:00; cat owl |

| 2020-11-20 13:29:25+00:00; pig owl |

| 2020-11-20 13:21:35+00:00; pig owl |

| 2020-11-20 13:23:32+00:00; bat owl |

| 2020-11-20 13:23:40+00:00; rat bat |

| 2020-11-20 13:26:16+00:00; rat pig |

| 2020-11-20 13:28:29+00:00; owl pig |

| 2020-11-20 13:27:51+00:00; cat rat |

| 2020-11-20 13:30:13+00:00; owl pig |

| 2020-11-20 13:25:04+00:00; bat cat |

| 2020-11-20 13:24:08+00:00; rat bat |

| 2020-11-20 13:31:27+00:00; dog bat |

| 2020-11-20 13:26:56+00:00; pig bat |

| 2020-11-20 13:33:40+00:00; owl cat |

| 2020-11-20 13:33:48+00:00; owl bat |

| 2020-11-20 13:34:14+00:00; dog bat |

| 2020-11-20 13:33:30+00:00; bat pig |

| 2020-11-20 13:33:38+00:00; owl pig |

| 2020-11-20 13:33:24+00:00; cat owl |

| 2020-11-20 13:34:08+00:00; bat owl |

| 2020-11-20 13:33:10+00:00; dog pig |

| 2020-11-20 13:33:32+00:00; bat cat |

| 2020-11-20 13:33:44+00:00; bat rat |

| 2020-11-20 13:30:03+00:00; bat rat |

| 2020-11-20 13:30:31+00:00; cat owl |

| 2020-11-20 13:32:33+00:00; cat pig |

| 2020-11-20 13:24:40+00:00; pig cat |

| 2020-11-20 13:26:22+00:00; owl bat |

| 2020-11-20 13:28:05+00:00; cat bat |

| 2020-11-20 13:31:11+00:00; owl cat |

| 2020-11-20 13:23:58+00:00; owl bat |

| 2020-11-20 13:25:06+00:00; cat pig |

| 2020-11-20 13:31:23+00:00; rat cat |

| 2020-11-20 13:23:48+00:00; dog rat |

| 2020-11-20 13:26:52+00:00; owl bat |

| 2020-11-20 13:23:36+00:00; owl rat |

| 2020-11-20 13:21:51+00:00; rat pig |

| 2020-11-20 13:21:43+00:00; bat owl |

| 2020-11-20 13:32:49+00:00; owl bat |

| 2020-11-20 13:23:20+00:00; rat pig |

| 2020-11-20 13:30:53+00:00; bat owl |

| 2020-11-20 13:26:46+00:00; bat cat |

| 2020-11-20 13:21:31+00:00; cat bat |

| 2020-11-20 13:28:43+00:00; rat pig |

| 2020-11-20 13:30:29+00:00; owl cat |

| 2020-11-20 13:33:22+00:00; dog pig |

| 2020-11-20 13:31:21+00:00; bat owl |

| 2020-11-20 13:32:39+00:00; pig dog |

| 2020-11-20 13:21:41+00:00; pig dog |

| 2020-11-20 13:26:10+00:00; bat rat |

| 2020-11-20 13:27:00+00:00; pig owl |

| 2020-11-20 13:22:03+00:00; cat dog |

| 2020-11-20 13:22:21+00:00; dog pig |

| 2020-11-20 13:30:05+00:00; bat dog |

| 2020-11-20 13:25:02+00:00; cat bat |

| 2020-11-20 13:24:02+00:00; pig bat |

| 2020-11-20 13:27:18+00:00; cat pig |

| 2020-11-20 13:23:08+00:00; cat bat |

| 2020-11-20 13:28:03+00:00; pig cat |

| 2020-11-20 13:31:05+00:00; dog pig |

| 2020-11-20 13:26:18+00:00; owl rat |

| 2020-11-20 13:23:42+00:00; cat dog |

| 2020-11-20 13:23:24+00:00; bat owl |

| 2020-11-20 13:24:14+00:00; dog rat |

| 2020-11-20 13:25:24+00:00; dog bat |

| 2020-11-20 13:27:40+00:00; dog rat |

| 2020-11-20 13:23:50+00:00; cat pig |

| 2020-11-20 13:27:48+00:00; rat cat |

| 2020-11-20 13:30:25+00:00; dog bat |

| 2020-11-20 13:30:21+00:00; dog bat |

| 2020-11-20 13:30:35+00:00; cat owl |

| 2020-11-20 13:25:38+00:00; owl rat |

| 2020-11-20 13:31:01+00:00; owl pig |

| 2020-11-20 13:21:33+00:00; rat bat |

| 2020-11-20 13:28:59+00:00; owl cat |

| 2020-11-20 13:33:16+00:00; cat owl |

| 2020-11-20 13:35:36+00:00; dog bat |

| 2020-11-20 13:34:36+00:00; rat pig |

| 2020-11-20 13:34:34+00:00; pig dog |

| 2020-11-20 13:35:26+00:00; owl rat |

| 2020-11-20 13:34:58+00:00; pig bat |

| 2020-11-20 13:35:24+00:00; cat bat |

| 2020-11-20 13:35:22+00:00; dog pig |

| 2020-11-20 13:35:44+00:00; dog pig |

| 2020-11-20 13:34:26+00:00; cat rat |

| 2020-11-20 13:35:18+00:00; bat dog |

| 2020-11-20 13:29:43+00:00; owl cat |

| 2020-11-20 13:31:39+00:00; bat rat |

| 2020-11-20 13:25:48+00:00; bat pig |

| 2020-11-20 13:27:08+00:00; cat rat |

| 2020-11-20 13:28:47+00:00; pig bat |

| 2020-11-20 13:29:03+00:00; bat rat |

| 2020-11-20 13:31:55+00:00; bat cat |

| 2020-11-20 13:32:43+00:00; cat dog |

| 2020-11-20 13:35:34+00:00; cat bat |

| 2020-11-20 13:24:52+00:00; cat pig |

| 2020-11-20 13:28:15+00:00; cat rat |

| 2020-11-20 13:30:37+00:00; bat cat |

| 2020-11-20 13:24:10+00:00; owl pig |

| 2020-11-20 13:25:34+00:00; pig rat |

| 2020-11-20 13:31:51+00:00; dog owl |

| 2020-11-20 13:29:39+00:00; rat dog |

| 2020-11-20 13:24:32+00:00; bat owl |

Next, we will convert the DataFrame to a Dataset of String using .as[String], so that we can apply the flatMap operation to split each line into multiple words. The resultant words Dataset contains all the words.

val words = streamingLines.as[String]

.map(line => line.split(";").drop(1)(0)) // this is to simply cut out the timestamp from this stream

.flatMap(_.split(" ")) // flat map by splitting the animal words separated by whitespace

.filter( _ != "") // remove empty words that may be artifacts of opening whitespace

words: org.apache.spark.sql.Dataset[String] = [value: string]

Finally, we define the wordCounts DataFrame by grouping by the unique values in the Dataset and counting them. Note that this is a streaming DataFrame which represents the running word counts of the stream.

// Generate running word count

val wordCounts = words

.groupBy("value").count() // this does the word count

.orderBy($"count".desc) // we are simply sorting by the most frequent words

wordCounts: org.apache.spark.sql.Dataset[org.apache.spark.sql.Row] = [value: string, count: bigint]

We have now set up the query on the streaming data. All that is left is to actually start receiving data and computing the counts. To do this, we set it up to print the complete set of counts (specified by outputMode("complete")) to the console every time they are updated. And then start the streaming computation using start().

// Start running the query that prints the running counts to the console

val query = wordCounts.writeStream

.outputMode("complete")

.format("console")

.start()

query.awaitTermination() // hit cancel to terminate - killall the bash script in 037a_AnimalNamesStructStreamingFiles

-------------------------------------------

Batch: 0

-------------------------------------------

+-----+-----+

|value|count|

+-----+-----+

| cat| 1|

| owl| 1|

+-----+-----+

-------------------------------------------

Batch: 1

-------------------------------------------

+-----+-----+

|value|count|

+-----+-----+

| cat| 2|

| pig| 1|

| owl| 1|

+-----+-----+

-------------------------------------------

Batch: 2

-------------------------------------------

+-----+-----+

|value|count|

+-----+-----+

| cat| 2|

| pig| 2|

| owl| 1|

| dog| 1|

+-----+-----+

-------------------------------------------

Batch: 3

-------------------------------------------

+-----+-----+

|value|count|

+-----+-----+

| cat| 3|

| dog| 2|

| pig| 2|

| owl| 1|

+-----+-----+

-------------------------------------------

Batch: 4

-------------------------------------------

+-----+-----+

|value|count|

+-----+-----+

| cat| 4|

| pig| 3|

| dog| 2|

| owl| 1|

+-----+-----+

-------------------------------------------

Batch: 5

-------------------------------------------

+-----+-----+

|value|count|

+-----+-----+

| pig| 4|

| cat| 4|

| dog| 2|

| owl| 1|

| rat| 1|

+-----+-----+

-------------------------------------------

Batch: 6

-------------------------------------------

+-----+-----+

|value|count|

+-----+-----+

| pig| 5|

| cat| 4|

| dog| 2|

| owl| 2|

| rat| 1|

+-----+-----+

-------------------------------------------

Batch: 7

-------------------------------------------

+-----+-----+

|value|count|

+-----+-----+

| pig| 5|

| cat| 4|

| owl| 3|

| dog| 2|

| rat| 1|

| bat| 1|

+-----+-----+

-------------------------------------------

Batch: 8

-------------------------------------------

+-----+-----+

|value|count|

+-----+-----+

| pig| 5|

| cat| 4|

| owl| 4|

| dog| 2|

| bat| 2|

| rat| 1|

+-----+-----+

-------------------------------------------

Batch: 9

-------------------------------------------

+-----+-----+

|value|count|

+-----+-----+

| pig| 5|

| owl| 5|

| cat| 4|

| bat| 3|

| dog| 2|

| rat| 1|

+-----+-----+

-------------------------------------------

Batch: 10

-------------------------------------------

+-----+-----+

|value|count|

+-----+-----+

| owl| 5|

| pig| 5|

| bat| 4|

| cat| 4|

| dog| 2|

| rat| 2|

+-----+-----+

After this code is executed, the streaming computation will have started in the background. The query object is a handle to that active streaming query, and we have decided to wait for the termination of the query using awaitTermination() to prevent the process from exiting while the query is active.

Handling Event-time and Late Data

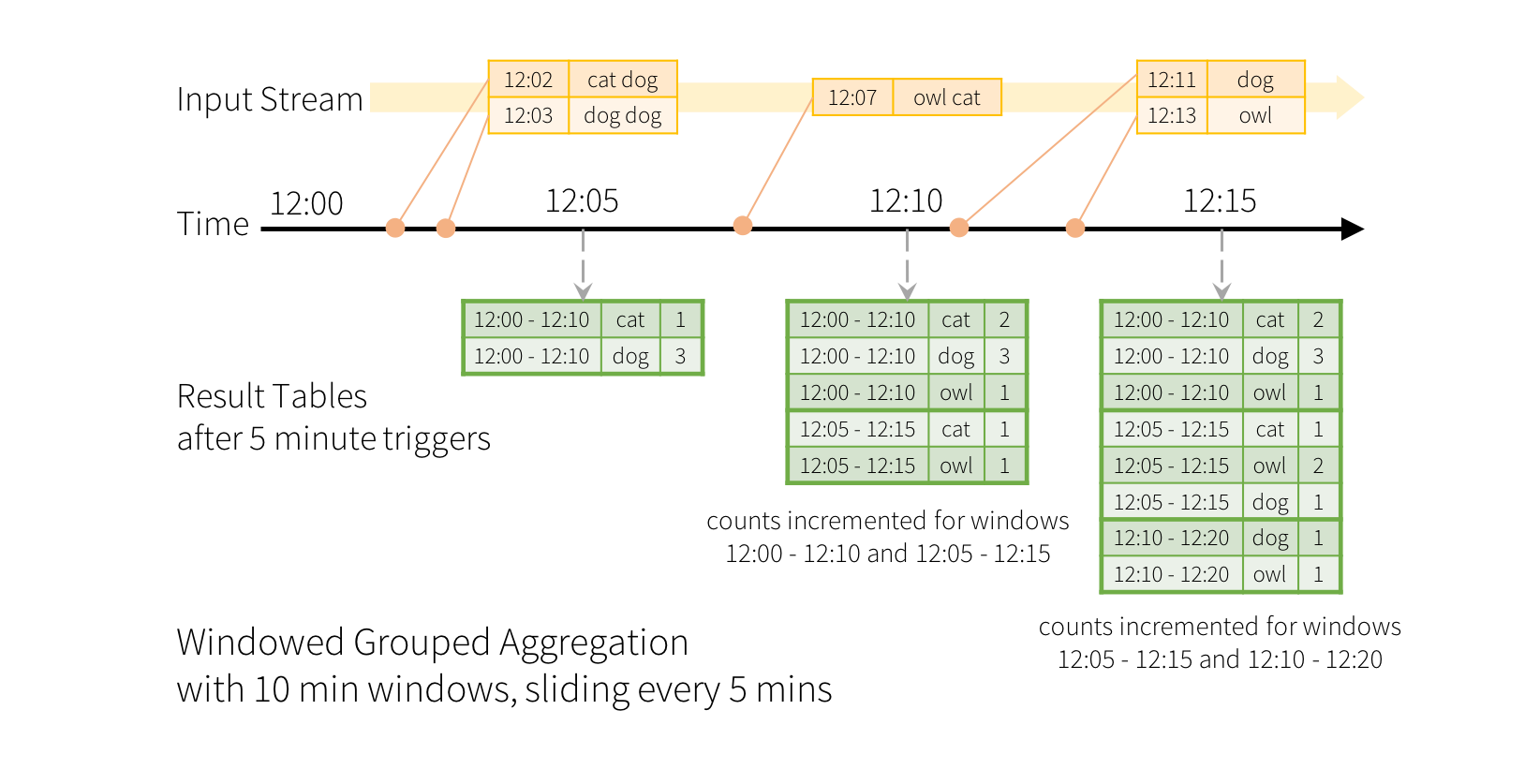

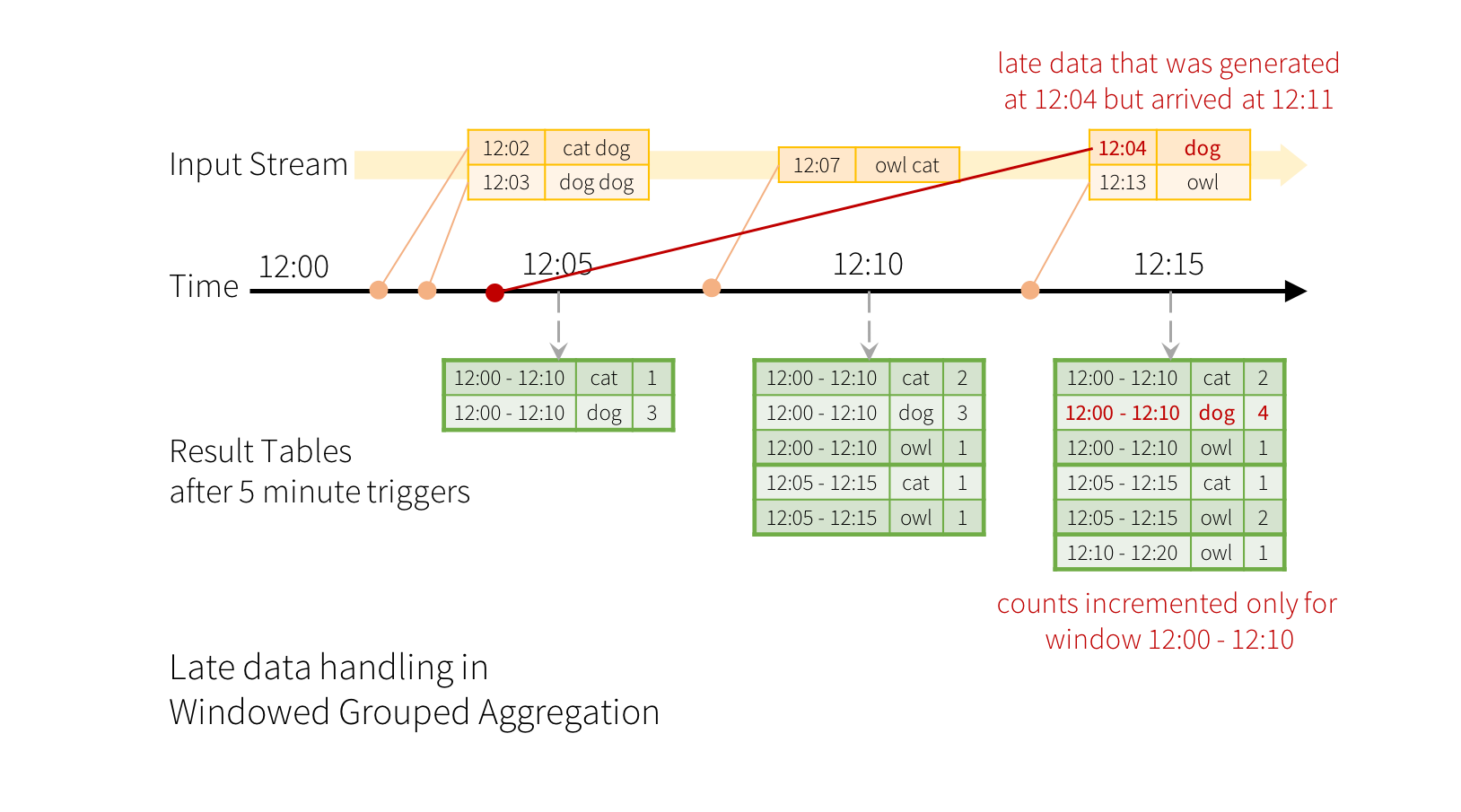

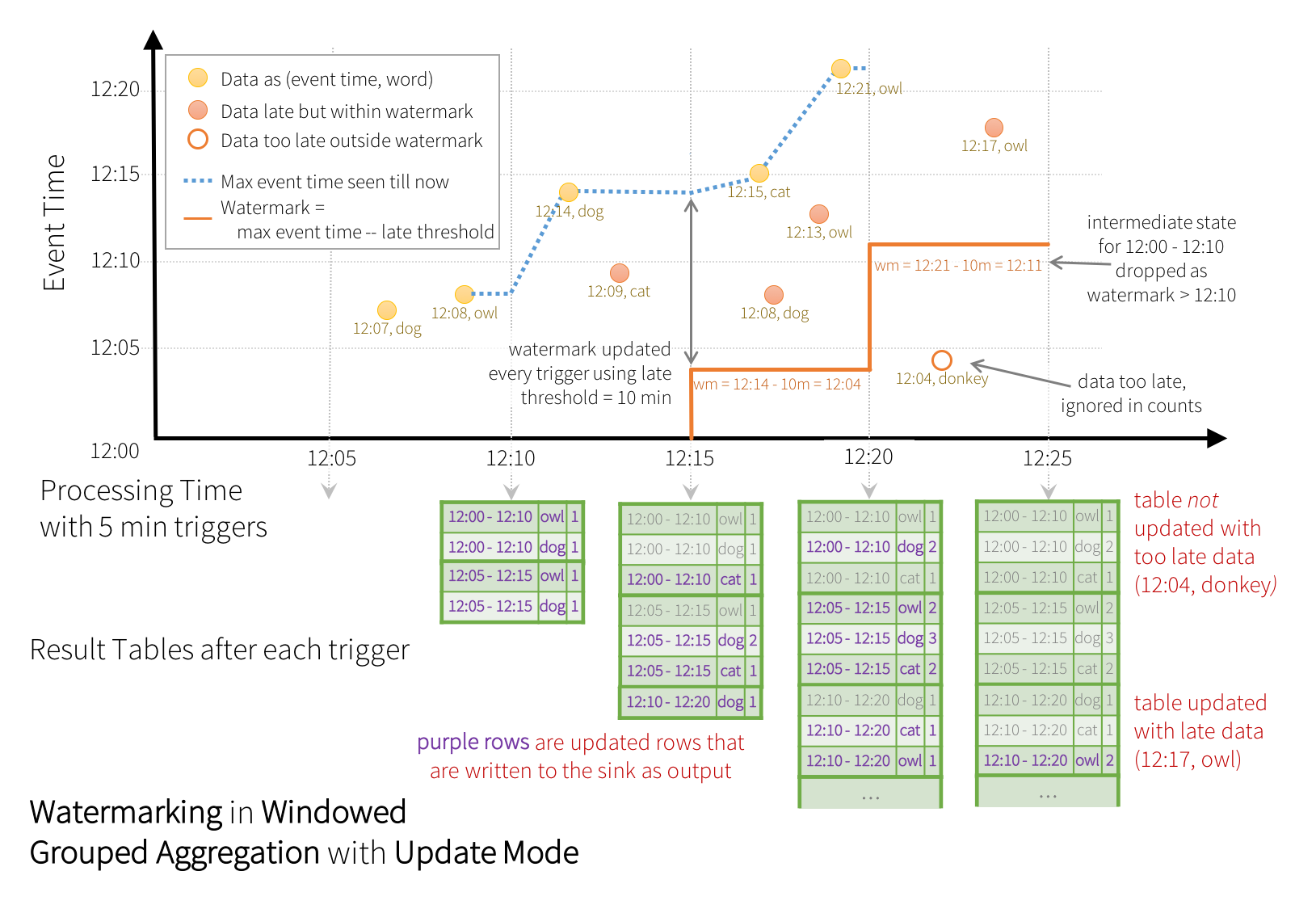

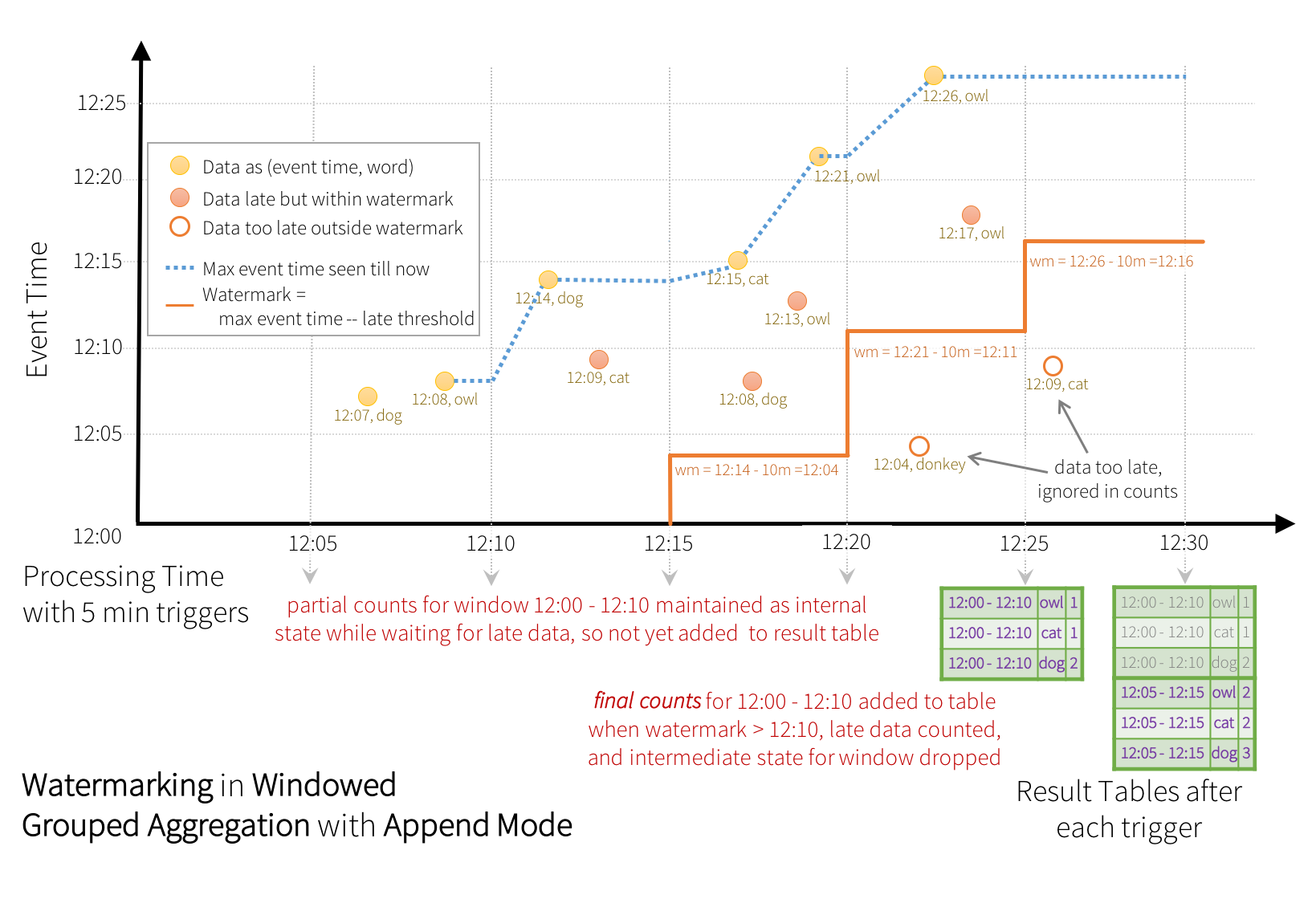

Event-time is the time embedded in the data itself. For many applications, you may want to operate on this event-time. For example, if you want to get the number of events generated by IoT devices every minute, then you probably want to use the time when the data was generated (that is, event-time in the data), rather than the time Spark receives them. This event-time is very naturally expressed in this model – each event from the devices is a row in the table, and event-time is a column value in the row. This allows window-based aggregations (e.g. number of events every minute) to be just a special type of grouping and aggregation on the event-time column – each time window is a group and each row can belong to multiple windows/groups. Therefore, such event-time-window-based aggregation queries can be defined consistently on both a static dataset (e.g. from collected device events logs) as well as on a data stream, making the life of the user much easier.

Furthermore, this model naturally handles data that has arrived later than expected based on its event-time. Since Spark is updating the Result Table, it has full control over updating old aggregates when there is late data, as well as cleaning up old aggregates to limit the size of intermediate state data. Since Spark 2.1, we have support for watermarking which allows the user to specify the threshold of late data, and allows the engine to accordingly clean up old state. These are explained later in more detail in the Window Operations section below.

Fault Tolerance Semantics

Delivering end-to-end exactly-once semantics was one of key goals behind the design of Structured Streaming. To achieve that, we have designed the Structured Streaming sources, the sinks and the execution engine to reliably track the exact progress of the processing so that it can handle any kind of failure by restarting and/or reprocessing. Every streaming source is assumed to have offsets (similar to Kafka offsets, or Kinesis sequence numbers) to track the read position in the stream. The engine uses checkpointing and write ahead logs to record the offset range of the data being processed in each trigger. The streaming sinks are designed to be idempotent for handling reprocessing. Together, using replayable sources and idempotent sinks, Structured Streaming can ensure end-to-end exactly-once semantics under any failure.

API using Datasets and DataFrames

Since Spark 2.0, DataFrames and Datasets can represent static, bounded data, as well as streaming, unbounded data. Similar to static Datasets/DataFrames, you can use the common entry point SparkSession (Scala/Java/Python/R docs) to create streaming DataFrames/Datasets from streaming sources, and apply the same operations on them as static DataFrames/Datasets. If you are not familiar with Datasets/DataFrames, you are strongly advised to familiarize yourself with them using the DataFrame/Dataset Programming Guide.

Creating streaming DataFrames and streaming Datasets

Streaming DataFrames can be created through the DataStreamReader interface (Scala/Java/Python docs) returned by SparkSession.readStream(). In R, with the read.stream() method. Similar to the read interface for creating static DataFrame, you can specify the details of the source – data format, schema, options, etc.

Input Sources

In Spark 2.0, there are a few built-in sources.

-

File source - Reads files written in a directory as a stream of data. Supported file formats are text, csv, json, parquet. See the docs of the DataStreamReader interface for a more up-to-date list, and supported options for each file format. Note that the files must be atomically placed in the given directory, which in most file systems, can be achieved by file move operations.

-

Kafka source - Poll data from Kafka. It’s compatible with Kafka broker versions 0.10.0 or higher. See the Kafka Integration Guide for more details.

-

Socket source (for testing) - Reads UTF8 text data from a socket connection. The listening server socket is at the driver. Note that this should be used only for testing as this does not provide end-to-end fault-tolerance guarantees.

Some sources are not fault-tolerant because they do not guarantee that data can be replayed using checkpointed offsets after a failure. See the earlier section on fault-tolerance semantics. Here are the details of all the sources in Spark.

| Source | Options | Fault-tolerant | Notes |

|---|---|---|---|

| File source |

path: path to the input directory, and common to all file formats.

maxFilesPerTrigger: maximum number of new files to be considered in every trigger (default: no max)

latestFirst: whether to processs the latest new files first, useful when there is a large backlog of files (default: false)

fileNameOnly: whether to check new files based on only the filename instead of on the full path (default: false). With this set to `true`, the following files would be considered as the same file, because their filenames, "dataset.txt", are the same:

· "file:///dataset.txt" · "s3://a/dataset.txt" · "s3n://a/b/dataset.txt" · "s3a://a/b/c/dataset.txt" | ||

| Socket Source |

host: host to connect to, must be specifiedport: port to connect to, must be specified

|

No | |

| Kafka Source | See the Kafka Integration Guide. | Yes | |

See https://spark.apache.org/docs/2.2.0/structured-streaming-programming-guide.html#input-sources.

Schema inference and partition of streaming DataFrames/Datasets

By default, Structured Streaming from file based sources requires you to specify the schema, rather than rely on Spark to infer it automatically (this is what we did with userSchema above). This restriction ensures a consistent schema will be used for the streaming query, even in the case of failures. For ad-hoc use cases, you can reenable schema inference by setting spark.sql.streaming.schemaInference to true.

Partition discovery does occur when subdirectories that are named /key=value/ are present and listing will automatically recurse into these directories. If these columns appear in the user provided schema, they will be filled in by Spark based on the path of the file being read. The directories that make up the partitioning scheme must be present when the query starts and must remain static. For example, it is okay to add /data/year=2016/ when /data/year=2015/ was present, but it is invalid to change the partitioning column (i.e. by creating the directory /data/date=2016-04-17/).

Operations on streaming DataFrames/Datasets

You can apply all kinds of operations on streaming DataFrames/Datasets – ranging from untyped, SQL-like operations (e.g. select, where, groupBy), to typed RDD-like operations (e.g. map, filter, flatMap). See the SQL programming guide for more details. Let’s take a look at a few example operations that you can use.

Basic Operations - Selection, Projection, Aggregation

Most of the common operations on DataFrame/Dataset are supported for streaming. The few operations that are not supported are discussed later in unsupported-operations section.

case class DeviceData(device: String, deviceType: String, signal: Double, time: DateTime)

val df: DataFrame = ... // streaming DataFrame with IOT device data with schema { device: string, deviceType: string, signal: double, time: string }

val ds: Dataset[DeviceData] = df.as[DeviceData] // streaming Dataset with IOT device data

// Select the devices which have signal more than 10

df.select("device").where("signal > 10") // using untyped APIs

ds.filter(_.signal > 10).map(_.device) // using typed APIs

// Running count of the number of updates for each device type

df.groupBy("deviceType").count() // using untyped API

// Running average signal for each device type

import org.apache.spark.sql.expressions.scalalang.typed

ds.groupByKey(_.deviceType).agg(typed.avg(_.signal)) // using typed API

A Quick Mixture Example

We will work below with a file stream that simulates random animal names or a simple mixture of two Normal Random Variables.

The two file streams can be acieved by running the codes in the following two databricks notebooks in the same cluster:

037a_AnimalNamesStructStreamingFiles037b_Mix2NormalsStructStreamingFiles

You should have the following set of csv files (it won't be exactly the same names depending on when you start the stream of files).

display(dbutils.fs.ls("/datasets/streamingFilesNormalMixture/"))

| path | name | size |

|---|---|---|

| dbfs:/datasets/streamingFilesNormalMixture/48_11/ | 48_11/ | 0.0 |

| dbfs:/datasets/streamingFilesNormalMixture/48_19/ | 48_19/ | 0.0 |

| dbfs:/datasets/streamingFilesNormalMixture/48_26/ | 48_26/ | 0.0 |

| dbfs:/datasets/streamingFilesNormalMixture/48_36/ | 48_36/ | 0.0 |

| dbfs:/datasets/streamingFilesNormalMixture/48_43/ | 48_43/ | 0.0 |

Static and Streaming DataFrames

Let's check out the files and their contents both via static as well as streaming DataFrames.

This will also cement the fact that structured streaming allows interoperability between static and streaming data and can be useful for debugging.

val peekIn = spark.read.format("csv").load("/datasets/streamingFilesNormalMixture/*/*.csv")

peekIn.count() // total count of all the samples in all the files

peekIn: org.apache.spark.sql.DataFrame = [_c0: string, _c1: string]

res8: Long = 500

peekIn.show(5, false) // let's take a quick peek at what's in the CSV files

+-----------------------+--------------------+

|_c0 |_c1 |

+-----------------------+--------------------+

|2020-11-16 10:48:25.294|0.21791376679544772 |

|2020-11-16 10:48:25.299|0.011291967445604012|

|2020-11-16 10:48:25.304|-0.30293144696154806|

|2020-11-16 10:48:25.309|0.4303254534802833 |

|2020-11-16 10:48:25.314|1.5521304466388752 |

+-----------------------+--------------------+

only showing top 5 rows

// Read all the csv files written atomically from a directory

import org.apache.spark.sql.types._

//make a user-specified schema - this is needed for structured streaming from files

val userSchema = new StructType()

.add("time", "timestamp")

.add("score", "Double")

// a static DF is convenient

val csvStaticDF = spark

.read

.option("sep", ",") // delimiter is ','

.schema(userSchema) // Specify schema of the csv files as pre-defined by user

.csv("/datasets/streamingFilesNormalMixture/*/*.csv") // Equivalent to format("csv").load("/path/to/directory")

// streaming DF

val csvStreamingDF = spark

.readStream

.option("sep", ",") // delimiter is ','

.schema(userSchema) // Specify schema of the csv files as pre-defined by user

.option("MaxFilesPerTrigger", 1) // maximum number of new files to be considered in every trigger (default: no max)

.csv("/datasets/streamingFilesNormalMixture/*/*.csv") // Equivalent to format("csv").load("/path/to/directory")

import org.apache.spark.sql.types._

userSchema: org.apache.spark.sql.types.StructType = StructType(StructField(time,TimestampType,true), StructField(score,DoubleType,true))

csvStaticDF: org.apache.spark.sql.DataFrame = [time: timestamp, score: double]

csvStreamingDF: org.apache.spark.sql.DataFrame = [time: timestamp, score: double]

csvStreamingDF.isStreaming // Returns True for DataFrames that have streaming sources

res12: Boolean = true

csvStreamingDF.printSchema

root

|-- time: timestamp (nullable = true)

|-- score: double (nullable = true)

display(csvStreamingDF) // if you want to see the stream coming at you as csvDF

| time | score |

|---|---|

| 2020-11-16T10:48:11.194+0000 | 0.2576188264990721 |

| 2020-11-16T10:48:11.199+0000 | -0.13149698512045327 |

| 2020-11-16T10:48:11.204+0000 | 1.4139063973267458 |

| 2020-11-16T10:48:11.209+0000 | -2.3833875968513496e-2 |

| 2020-11-16T10:48:11.215+0000 | 0.7274784426774964 |

| 2020-11-16T10:48:11.220+0000 | -1.0658630481235276 |

| 2020-11-16T10:48:11.225+0000 | 0.746959841932221 |

| 2020-11-16T10:48:11.230+0000 | 0.30477096247050206 |

| 2020-11-16T10:48:11.235+0000 | -6.407620682061621e-2 |

| 2020-11-16T10:48:11.241+0000 | 1.8464307210258604 |

| 2020-11-16T10:48:11.246+0000 | 2.0786529531264355 |

| 2020-11-16T10:48:11.251+0000 | 0.685838993990332 |

| 2020-11-16T10:48:11.256+0000 | 2.3056211153362485 |

| 2020-11-16T10:48:11.261+0000 | -0.7435548094085835 |

| 2020-11-16T10:48:11.267+0000 | -0.36946067155650786 |

| 2020-11-16T10:48:11.272+0000 | 1.1178132434092503 |

| 2020-11-16T10:48:11.277+0000 | 1.0672400098827672 |

| 2020-11-16T10:48:11.282+0000 | 2.403799182291664 |

| 2020-11-16T10:48:11.287+0000 | 2.7905949803662926 |

| 2020-11-16T10:48:11.293+0000 | 2.3901047303648846 |

| 2020-11-16T10:48:11.298+0000 | 2.2391322699010967 |

| 2020-11-16T10:48:11.303+0000 | 0.7102559487906945 |

| 2020-11-16T10:48:11.308+0000 | -0.1875570296359037 |

| 2020-11-16T10:48:11.313+0000 | 2.0036998039560725 |

| 2020-11-16T10:48:11.318+0000 | 2.028162246705019 |

| 2020-11-16T10:48:11.324+0000 | -1.1084782237141253 |

| 2020-11-16T10:48:11.329+0000 | 2.7320985336302965 |

| 2020-11-16T10:48:11.334+0000 | 1.7953021498619885 |

| 2020-11-16T10:48:11.339+0000 | 1.3332433299615185 |

| 2020-11-16T10:48:11.344+0000 | 1.2842120504662247 |

| 2020-11-16T10:48:11.349+0000 | 2.0013530061962186 |

| 2020-11-16T10:48:11.355+0000 | 1.2596569236824775 |

| 2020-11-16T10:48:11.360+0000 | 2.46479668588018 |

| 2020-11-16T10:48:11.365+0000 | -0.7015927727061835 |

| 2020-11-16T10:48:11.370+0000 | -0.510611131534981 |

| 2020-11-16T10:48:11.375+0000 | 0.9403812557496112 |

| 2020-11-16T10:48:11.381+0000 | 2.2306482205877427 |

| 2020-11-16T10:48:11.386+0000 | -0.29781070820511246 |

| 2020-11-16T10:48:11.391+0000 | 4.107241990001628 |

| 2020-11-16T10:48:11.396+0000 | 0.7420568724108764 |

| 2020-11-16T10:48:11.401+0000 | 1.4652231673746594 |

| 2020-11-16T10:48:11.407+0000 | 0.8793849318247119 |

| 2020-11-16T10:48:11.412+0000 | 1.7671614106752898 |

| 2020-11-16T10:48:11.417+0000 | 1.1995772213743607 |

| 2020-11-16T10:48:11.422+0000 | 1.1351566745099897 |

| 2020-11-16T10:48:11.427+0000 | 0.16150528245701323 |

| 2020-11-16T10:48:11.432+0000 | 2.459849452657596 |

| 2020-11-16T10:48:11.438+0000 | 1.0796739450956971 |

| 2020-11-16T10:48:11.443+0000 | -1.2079899446434252 |

| 2020-11-16T10:48:11.448+0000 | 0.7019279468450133 |

| 2020-11-16T10:48:11.453+0000 | -2.5906759976580096e-2 |

| 2020-11-16T10:48:11.458+0000 | 1.025799236502406 |

| 2020-11-16T10:48:11.463+0000 | 2.423754193708396 |

| 2020-11-16T10:48:11.469+0000 | 1.0100073192180106 |

| 2020-11-16T10:48:11.474+0000 | 1.2308412912433588 |

| 2020-11-16T10:48:11.479+0000 | 2.2142939785873326 |

| 2020-11-16T10:48:11.484+0000 | 9.639219241219372 |

| 2020-11-16T10:48:11.489+0000 | 0.8964067897832677 |

| 2020-11-16T10:48:11.494+0000 | 2.583753664296168 |

| 2020-11-16T10:48:11.499+0000 | 1.7326439212827238 |

| 2020-11-16T10:48:11.505+0000 | 0.7516388863094139 |

| 2020-11-16T10:48:11.510+0000 | 0.8725633940449549 |

| 2020-11-16T10:48:11.515+0000 | -0.9407676766254014 |

| 2020-11-16T10:48:11.520+0000 | 1.0542712925875175 |

| 2020-11-16T10:48:11.525+0000 | 0.794535189312687 |

| 2020-11-16T10:48:11.530+0000 | 0.5813794557982226 |

| 2020-11-16T10:48:11.536+0000 | 0.4891368786472011 |

| 2020-11-16T10:48:11.541+0000 | 2.3296394918008474 |

| 2020-11-16T10:48:11.546+0000 | 1.425296303524094 |

| 2020-11-16T10:48:11.551+0000 | 1.9276679925454094 |

| 2020-11-16T10:48:11.556+0000 | 0.6178050147872097 |

| 2020-11-16T10:48:11.561+0000 | 1.135269636375052 |

| 2020-11-16T10:48:11.567+0000 | 1.3074367248762568 |

| 2020-11-16T10:48:11.572+0000 | 0.6105659268751382 |

| 2020-11-16T10:48:11.577+0000 | 1.7812955395572572 |

| 2020-11-16T10:48:11.582+0000 | -1.3547368916771827 |

| 2020-11-16T10:48:11.587+0000 | 1.580412775615275 |

| 2020-11-16T10:48:11.592+0000 | 1.5731144914401023 |

| 2020-11-16T10:48:11.597+0000 | -5.725067553082108e-2 |

| 2020-11-16T10:48:11.603+0000 | 0.19580347035995105 |

| 2020-11-16T10:48:11.608+0000 | -2.1501122555202867e-2 |

| 2020-11-16T10:48:11.613+0000 | 1.5783579658949254 |

| 2020-11-16T10:48:11.618+0000 | 1.371796305513024 |

| 2020-11-16T10:48:11.623+0000 | 0.648919899258448 |

| 2020-11-16T10:48:11.628+0000 | -0.7875773550339058 |

| 2020-11-16T10:48:11.633+0000 | 1.3233945353130716 |

| 2020-11-16T10:48:11.639+0000 | 2.5685224032022127 |

| 2020-11-16T10:48:11.644+0000 | 2.7331317575905807 |

| 2020-11-16T10:48:11.649+0000 | 0.2521381731074053 |

| 2020-11-16T10:48:11.654+0000 | 2.2408918489807905 |

| 2020-11-16T10:48:11.659+0000 | 1.4924862197354933 |

| 2020-11-16T10:48:11.664+0000 | 1.194657083531184 |

| 2020-11-16T10:48:11.670+0000 | 0.7067352811215412 |

| 2020-11-16T10:48:11.675+0000 | 2.7701718519244745e-2 |

| 2020-11-16T10:48:11.681+0000 | 0.279797547315617 |

| 2020-11-16T10:48:11.686+0000 | -0.21953266770586133 |

| 2020-11-16T10:48:11.691+0000 | 1.1402931320647434 |

| 2020-11-16T10:48:11.696+0000 | 0.904724947360263 |

| 2020-11-16T10:48:11.702+0000 | 0.6677145203694429 |

| 2020-11-16T10:48:11.707+0000 | 2.019977647420342 |

| 2020-11-16T10:48:18.539+0000 | -0.5190278662580565 |

| 2020-11-16T10:48:18.545+0000 | 1.2549405940975034 |

| 2020-11-16T10:48:18.550+0000 | 2.4267606721380233 |

| 2020-11-16T10:48:18.555+0000 | 0.21858105660909444 |

| 2020-11-16T10:48:18.560+0000 | 1.7701229392924476 |

| 2020-11-16T10:48:18.566+0000 | 8.326770280505069e-2 |

| 2020-11-16T10:48:18.571+0000 | 11.539205812425335 |

| 2020-11-16T10:48:18.576+0000 | 0.612370126029857 |

| 2020-11-16T10:48:18.581+0000 | 1.299073306785623 |

| 2020-11-16T10:48:18.586+0000 | 2.6939073650678083 |

| 2020-11-16T10:48:18.592+0000 | 2.5320627406973344 |

| 2020-11-16T10:48:18.597+0000 | 2.781337457744293e-2 |

| 2020-11-16T10:48:18.602+0000 | 0.3272489908510584 |

| 2020-11-16T10:48:18.607+0000 | -0.9427386544836929 |

| 2020-11-16T10:48:18.613+0000 | 0.9364640268126377 |

| 2020-11-16T10:48:18.618+0000 | 1.919225736153371 |

| 2020-11-16T10:48:18.623+0000 | 0.38826998132506296 |

| 2020-11-16T10:48:18.628+0000 | -0.38655650387475715 |

| 2020-11-16T10:48:18.633+0000 | 1.0433731216978939 |

| 2020-11-16T10:48:18.638+0000 | 1.1500718903613745 |

| 2020-11-16T10:48:18.644+0000 | -0.3661280681150447 |

| 2020-11-16T10:48:18.649+0000 | 0.883444064705467 |

| 2020-11-16T10:48:18.654+0000 | -0.9126173899348853 |

| 2020-11-16T10:48:18.659+0000 | 0.3838114564837034 |

| 2020-11-16T10:48:18.665+0000 | 0.7935189081504388 |

| 2020-11-16T10:48:18.670+0000 | 1.928137393349846 |

| 2020-11-16T10:48:18.675+0000 | 4.7092811957255676e-2 |

| 2020-11-16T10:48:18.680+0000 | 0.4684849965794433 |

| 2020-11-16T10:48:18.685+0000 | 0.6745536358089256 |

| 2020-11-16T10:48:18.691+0000 | 2.100439331925503 |

| 2020-11-16T10:48:18.696+0000 | 1.0053957395581328 |

| 2020-11-16T10:48:18.701+0000 | 1.1651633690031988 |

| 2020-11-16T10:48:18.706+0000 | 1.1620631665685186 |

| 2020-11-16T10:48:18.711+0000 | 0.5686294459758102 |

| 2020-11-16T10:48:18.717+0000 | 5.4695916815372114e-2 |

| 2020-11-16T10:48:18.722+0000 | 0.3673527645506809 |

| 2020-11-16T10:48:18.727+0000 | 1.1825682382920246 |

| 2020-11-16T10:48:18.732+0000 | 2.590900208851957 |

| 2020-11-16T10:48:18.738+0000 | 0.9580677196122074 |

| 2020-11-16T10:48:18.743+0000 | 0.14058634902492095 |

| 2020-11-16T10:48:18.748+0000 | 1.835715236145623 |

| 2020-11-16T10:48:18.753+0000 | 1.0262133311924941 |

| 2020-11-16T10:48:18.758+0000 | 2.3956360313411276 |

| 2020-11-16T10:48:18.763+0000 | -0.42622276533874537 |

| 2020-11-16T10:48:18.769+0000 | 1.532866051791267 |

| 2020-11-16T10:48:18.774+0000 | 0.33837135147986275 |

| 2020-11-16T10:48:18.779+0000 | 0.5993221970260502 |

| 2020-11-16T10:48:18.784+0000 | 0.5268259369536397 |

| 2020-11-16T10:48:18.789+0000 | 0.9338448405595184 |

| 2020-11-16T10:48:18.795+0000 | 1.5020324977316601 |

| 2020-11-16T10:48:18.800+0000 | -0.21633343524824378 |

| 2020-11-16T10:48:18.805+0000 | 0.8387080531274844 |

| 2020-11-16T10:48:18.810+0000 | 1.3278878139665884e-2 |

| 2020-11-16T10:48:18.815+0000 | 1.3291762275434373 |

| 2020-11-16T10:48:18.820+0000 | 0.4837833343304839 |

| 2020-11-16T10:48:18.826+0000 | 0.4918446444728072 |

| 2020-11-16T10:48:18.831+0000 | 1.354678573169704 |

| 2020-11-16T10:48:18.836+0000 | 0.2524216007924791 |

| 2020-11-16T10:48:18.841+0000 | 0.5965026762340784 |

| 2020-11-16T10:48:18.846+0000 | 2.000850130836448 |

| 2020-11-16T10:48:18.851+0000 | 2.217169275505519 |

| 2020-11-16T10:48:18.857+0000 | 0.6876140376775531 |

| 2020-11-16T10:48:18.862+0000 | 1.0508210912529563 |

| 2020-11-16T10:48:18.867+0000 | 1.65676102704454 |

| 2020-11-16T10:48:18.872+0000 | 2.155047641017994 |

| 2020-11-16T10:48:18.877+0000 | 1.0866488363653375 |

| 2020-11-16T10:48:18.882+0000 | 1.0691398773308363 |

| 2020-11-16T10:48:18.888+0000 | 0.6120836384011098 |

| 2020-11-16T10:48:18.893+0000 | 0.24914099314834415 |

| 2020-11-16T10:48:18.898+0000 | 2.8691481936548744 |

| 2020-11-16T10:48:18.903+0000 | 0.7633561289177443 |

| 2020-11-16T10:48:18.908+0000 | 1.4483835248568062 |

| 2020-11-16T10:48:18.913+0000 | 2.6108825545691863 |

| 2020-11-16T10:48:18.918+0000 | 1.2751533422561458 |

| 2020-11-16T10:48:18.924+0000 | 1.0131179898567302 |

| 2020-11-16T10:48:18.929+0000 | 0.46308679994249036 |

| 2020-11-16T10:48:18.935+0000 | 0.7793261962344651 |

| 2020-11-16T10:48:18.940+0000 | 1.1671037114122738 |

| 2020-11-16T10:48:18.945+0000 | 2.143874895015684 |

| 2020-11-16T10:48:18.950+0000 | 1.2344250301306705 |

| 2020-11-16T10:48:18.955+0000 | 1.7402355361851662 |

| 2020-11-16T10:48:18.960+0000 | 1.0396911219696297 |

| 2020-11-16T10:48:18.966+0000 | 1.8089030277370215 |

| 2020-11-16T10:48:18.971+0000 | 2.1235708326267533 |

| 2020-11-16T10:48:18.976+0000 | -0.33938888075466234 |

| 2020-11-16T10:48:18.981+0000 | 1.090463095441436 |

| 2020-11-16T10:48:18.986+0000 | 1.3101016219338661 |

| 2020-11-16T10:48:18.992+0000 | -0.6251493773996968 |

| 2020-11-16T10:48:18.998+0000 | 1.7223308331307168 |

| 2020-11-16T10:48:19.003+0000 | 1.0299845635585438 |

| 2020-11-16T10:48:19.009+0000 | 1.962846046162154 |

| 2020-11-16T10:48:19.014+0000 | -1.8537289273720337e-2 |

| 2020-11-16T10:48:19.019+0000 | 0.7977254725466605 |

| 2020-11-16T10:48:19.024+0000 | -0.21427479370557312 |

| 2020-11-16T10:48:19.029+0000 | -1.6661289018266037 |

| 2020-11-16T10:48:19.034+0000 | 1.144457447997468 |

| 2020-11-16T10:48:19.043+0000 | 0.6503516296653954 |

| 2020-11-16T10:48:19.048+0000 | 6.581335919503728e-2 |

| 2020-11-16T10:48:19.053+0000 | 1.5478749815243467 |

| 2020-11-16T10:48:19.058+0000 | 1.5497411627601851 |

| 2020-11-16T10:48:25.294+0000 | 0.21791376679544772 |

| 2020-11-16T10:48:25.299+0000 | 1.1291967445604012e-2 |

| 2020-11-16T10:48:25.304+0000 | -0.30293144696154806 |

| 2020-11-16T10:48:25.309+0000 | 0.4303254534802833 |

| 2020-11-16T10:48:25.314+0000 | 1.5521304466388752 |

| 2020-11-16T10:48:25.319+0000 | 2.2910302464408394 |

| 2020-11-16T10:48:25.325+0000 | 0.4374695472538803 |

| 2020-11-16T10:48:25.330+0000 | 0.4085186427342812 |

| 2020-11-16T10:48:25.335+0000 | -6.531316403553289e-2 |

| 2020-11-16T10:48:25.340+0000 | 6.39812257122474e-3 |

| 2020-11-16T10:48:25.345+0000 | 0.24840501087934996 |

| 2020-11-16T10:48:25.350+0000 | -1.021974709142702 |

| 2020-11-16T10:48:25.355+0000 | -9.233941622902653e-2 |

| 2020-11-16T10:48:25.361+0000 | 0.41027379764960337 |

| 2020-11-16T10:48:25.366+0000 | 1.864567223228712 |

| 2020-11-16T10:48:25.371+0000 | 1.5393474896194466 |

| 2020-11-16T10:48:25.376+0000 | 1.124907339909468 |

| 2020-11-16T10:48:25.381+0000 | 2.0206475875654997 |

| 2020-11-16T10:48:25.386+0000 | -0.7058862229186389 |

| 2020-11-16T10:48:25.392+0000 | 1.2344926787652002 |

| 2020-11-16T10:48:25.397+0000 | 1.1406194673922239 |

| 2020-11-16T10:48:25.402+0000 | 1.4084552620839659 |

| 2020-11-16T10:48:25.407+0000 | 0.739931161380885 |

| 2020-11-16T10:48:25.412+0000 | 0.29958396894640427 |

| 2020-11-16T10:48:25.417+0000 | -0.9379262816791101 |

| 2020-11-16T10:48:25.422+0000 | 0.8259556704405835 |

| 2020-11-16T10:48:25.428+0000 | -0.3199802616466474 |

| 2020-11-16T10:48:25.433+0000 | 1.9656420693625898 |

| 2020-11-16T10:48:25.438+0000 | 0.8789984776053141 |

| 2020-11-16T10:48:25.443+0000 | 2.4965042040211793 |

| 2020-11-16T10:48:25.448+0000 | 1.714778861431627 |

| 2020-11-16T10:48:25.454+0000 | 0.8669641143187272 |

| 2020-11-16T10:48:25.459+0000 | 1.0757413525008879 |

| 2020-11-16T10:48:25.464+0000 | 1.9658378382249264e-2 |

| 2020-11-16T10:48:25.469+0000 | 0.7165095911306543 |

| 2020-11-16T10:48:25.474+0000 | 1.2251547673860115 |

| 2020-11-16T10:48:25.479+0000 | 1.5869187313570912 |

| 2020-11-16T10:48:25.485+0000 | 0.3928727449886338 |

| 2020-11-16T10:48:25.490+0000 | 1.7722759642539445 |

| 2020-11-16T10:48:25.495+0000 | 1.0350331272239843 |

| 2020-11-16T10:48:25.500+0000 | -1.4234008750858624 |

| 2020-11-16T10:48:25.505+0000 | 0.6054572828043063 |

| 2020-11-16T10:48:25.511+0000 | 0.3024585268617903 |

| 2020-11-16T10:48:25.516+0000 | 2.9432999768948087e-2 |

| 2020-11-16T10:48:25.521+0000 | 0.9382472473173075 |

| 2020-11-16T10:48:25.526+0000 | 2.11287419383702 |

| 2020-11-16T10:48:25.531+0000 | 1.0876022969280528 |

| 2020-11-16T10:48:25.536+0000 | 0.36548993902899596 |

| 2020-11-16T10:48:25.542+0000 | -2.005053653271253 |

| 2020-11-16T10:48:25.547+0000 | 2.0367928918435894 |

| 2020-11-16T10:48:25.552+0000 | 9.261254419611942e-2 |

| 2020-11-16T10:48:25.557+0000 | 2.156248406806113 |

| 2020-11-16T10:48:25.562+0000 | -0.5295405173638772 |

| 2020-11-16T10:48:25.568+0000 | 2.452318995994742 |

| 2020-11-16T10:48:25.573+0000 | 0.8636413385915132 |

| 2020-11-16T10:48:25.578+0000 | 0.31460938814139794 |

| 2020-11-16T10:48:25.583+0000 | -2.0257131370059023e-2 |

| 2020-11-16T10:48:25.588+0000 | 1.3213739526626505 |

| 2020-11-16T10:48:25.593+0000 | 0.9463001869917488 |

| 2020-11-16T10:48:25.599+0000 | 0.986171393681171 |

| 2020-11-16T10:48:25.604+0000 | 0.12492672949874628 |

| 2020-11-16T10:48:25.609+0000 | 0.9908400692267174 |

| 2020-11-16T10:48:25.614+0000 | 1.0695623856543282 |

| 2020-11-16T10:48:25.621+0000 | 1.0221220766637027 |

| 2020-11-16T10:48:25.627+0000 | 2.8492797946693904 |

| 2020-11-16T10:48:25.632+0000 | 1.0609742751901396 |

| 2020-11-16T10:48:25.637+0000 | 1.6409490831011158 |

| 2020-11-16T10:48:25.642+0000 | 1.5427085071446491 |

| 2020-11-16T10:48:25.647+0000 | 1.7312859942989034 |

| 2020-11-16T10:48:25.653+0000 | 1.2947069326850533 |

| 2020-11-16T10:48:25.658+0000 | 0.3756138591369289 |

| 2020-11-16T10:48:25.663+0000 | 1.4349084022701803 |

| 2020-11-16T10:48:25.668+0000 | 0.37649651121290106 |

| 2020-11-16T10:48:25.673+0000 | 0.7071860096564935 |

| 2020-11-16T10:48:25.679+0000 | 1.5065536846394356 |

| 2020-11-16T10:48:25.684+0000 | 0.15009861698305105 |

| 2020-11-16T10:48:25.689+0000 | 3.5084734586888766e-2 |

| 2020-11-16T10:48:25.695+0000 | 1.9474563946729155 |

| 2020-11-16T10:48:25.700+0000 | 9.423175513609095 |

| 2020-11-16T10:48:25.705+0000 | 2.4871634825039015 |

| 2020-11-16T10:48:25.710+0000 | 2.8472676324820685 |

| 2020-11-16T10:48:25.715+0000 | 1.5999488876250578 |

| 2020-11-16T10:48:25.720+0000 | -0.2693864675719999 |

| 2020-11-16T10:48:25.725+0000 | 1.6304414331783441 |

| 2020-11-16T10:48:25.731+0000 | 0.39324529792831353 |

| 2020-11-16T10:48:25.736+0000 | 0.4053253263569069 |

| 2020-11-16T10:48:25.741+0000 | 0.9270234970247857 |

| 2020-11-16T10:48:25.746+0000 | 1.4509585503273819 |

| 2020-11-16T10:48:25.751+0000 | 0.8878267401905819 |

| 2020-11-16T10:48:25.756+0000 | 1.1883024549090635 |

| 2020-11-16T10:48:25.761+0000 | 1.0163155722641077 |

| 2020-11-16T10:48:25.767+0000 | -0.8003099498427713 |

| 2020-11-16T10:48:25.772+0000 | -0.9483216075980454 |

| 2020-11-16T10:48:25.777+0000 | 1.0437451610964232 |

| 2020-11-16T10:48:25.782+0000 | 2.19837214407137 |

| 2020-11-16T10:48:25.787+0000 | 2.070797890483533 |

| 2020-11-16T10:48:25.792+0000 | 1.2067096088561005 |

| 2020-11-16T10:48:25.798+0000 | 0.5043809533024068 |