Christoffer Långström

This section aims to recreate & elaborate on the material presented in the following talk at the 2018 Spark AI summit by Sim Semeonov & Slater Victoroff. Following the advent of the General Data Protection Regulation in the EU, many data scientists are concerned about how this legislation will impact them & what steps they should take in order to protect their data and themselves (as holders of the data) in terms of privacy. Here we focus on two vital aspects of GDPR compliant learning, keeping only pseudonymous identifiers and ensuring that subjects can ask what data is being kept about them.

Watch the video below to see the full talk:

Great Models with Great Privacy: Optimizing ML and AI Under GDPR by Sim Simeonov & Slater Victoroff

Why Do We Need Pseudonymization?

We want to have identifiers for our data, but personal identifiers (name, social numbers) are to sensitive to store. By storing (strong) pseudonyms we satisfy this requirement. However, the GDPR stipulates that we must be able to answer the question "What data do you have on me?" from any individual in the data set (The Right To Know)

What we need:

- Consistent serialization of data

- Assign a unique ID to each subject in the database

- Be able to identify individuals in the set, given their identifiers

- Ensure that adversarial parties may not decode these IDs

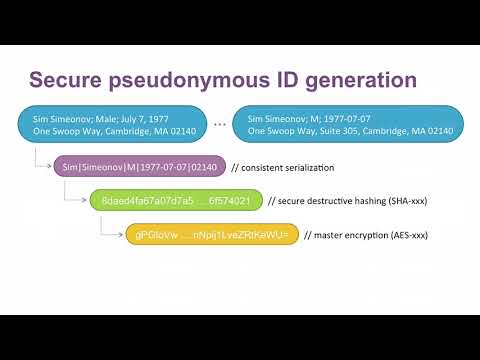

Below is a flowchart showing the process at a high level. Each step is explained later in the guide.

1) Data serialization & Randomization

All data is not formatted equally, and if we wish any processing procedure to be consistent it is important to ensure proper formating. The formating employed here is for a row with fields firstname, lastname, gender, date of birth which is serialized according to the rule: firstname, lastname, gender, dob --> Firstname|Lastname|M/F|YYYY-MM-DD. All of this information will be used in generating the pseudonymous ID, which will help prevent collisions caused by people having the same name, etc.

An important point to be made here is that there should also be some random shuffling of the rows, to decrease further the possibility of adversaries using additional information to divulge sensitive attributes from your table. If the original data set is in alphabetical order, and the output (even thought it has been treated) remains in the same order, we would be vulnerable to attacks if someone compared alphabetical lists of names run through common hash functions.

Note:

It is often necessary to "fuzzy" the data to some extent in the sense that we decrease the level of accuracy by, for instance, reducing a day of birth to year of birth to decrease the power of quasi-identifiers. As mentioned in part 1, quasi-identifiers are fields that do not individually provide sensitive information but can be utilized together to identify a member of the set. This is discussed further in the next section, for now we implement a basic version of distilling a date of birth to a year of birth. Similar approaches would be to only store initials of names, cities instead of adresses, or dropping information all together (such as genders).

import org.apache.spark.sql.functions.rand

// Define the class for pii

case class pii(firstName: String, lastName: String, gender: String, dob: String)

"""

firstName,lastName,gender,dob

Howard,Philips,M,1890-08-20

Edgar,Allan,M,1809-01-19

Arthur,Ignatius,M,1859-05-22

Mary,Wollstonecraft,F,1797-08-30

"""

// Read the data from a csv file, then convert to dataset // /FileStore/tables/demo_pii.txt was uploaded

val IDs = spark.read.option("header", "true").csv("/FileStore/tables/demo_pii.txt").orderBy(rand())

val ids = IDs.as[pii]

ids.show

+---------+--------------+------+----------+

|firstName| lastName|gender| dob|

+---------+--------------+------+----------+

| Mary|Wollstonecraft| F|1797-08-30|

| Edgar| Allan| M|1809-01-19|

| Arthur| Ignatius| M|1859-05-22|

| Howard| Philips| M|1890-08-20|

+---------+--------------+------+----------+

notebook:6: warning: a pure expression does nothing in statement position; you may be omitting necessary parentheses

"""

^

import org.apache.spark.sql.functions.rand

defined class pii

IDs: org.apache.spark.sql.Dataset[org.apache.spark.sql.Row] = [firstName: string, lastName: string ... 2 more fields]

ids: org.apache.spark.sql.Dataset[pii] = [firstName: string, lastName: string ... 2 more fields]

import org.apache.spark.sql.functions.rand

// Define the class for pii

case class pii(firstName: String, lastName: String, gender: String, dob: String)

"""

firstName,lastName,gender,dob

Howard,Philips,M,1890-08-20

Edgar,Allan,M,1809-01-19

Arthur,Ignatius,M,1859-05-22

Mary,Wollstonecraft,F,1797-08-30

"""

// Read the data from a csv file, then convert to dataset // /FileStore/tables/demo_pii.txt was uploaded

val IDs = spark.read.option("header", "true").csv("/FileStore/tables/demo_pii.txt").orderBy(rand())

val ids = IDs.as[pii]

ids.show

+---------+--------------+------+----------+

|firstName| lastName|gender| dob|

+---------+--------------+------+----------+

| Arthur| Ignatius| M|1859-05-22|

| Howard| Philips| M|1890-08-20|

| Edgar| Allan| M|1809-01-19|

| Mary|Wollstonecraft| F|1797-08-30|

+---------+--------------+------+----------+

notebook:6: warning: a pure expression does nothing in statement position; you may be omitting necessary parentheses

"""

^

import org.apache.spark.sql.functions.rand

defined class pii

IDs: org.apache.spark.sql.Dataset[org.apache.spark.sql.Row] = [firstName: string, lastName: string ... 2 more fields]

ids: org.apache.spark.sql.Dataset[pii] = [firstName: string, lastName: string ... 2 more fields]

- Hashing

Hash functions apply mathematical algorithms to map abitrary size inputs to fixed length bit string (referred to as "the hash"), and cryptographic hash functions do so in a one-way fashion, i.e it is not possible to reverse this procedure. This makes them good candidates for generating pseudo-IDs since they cannot be reverse engineered but we may easily hash any given identifier and search for it in the database. However, the procedure is not completely satisfactory in terms of safety. Creating good hash functions is very hard, and so commonly a standard hash function is used such as the SHA series. This means unfortunately that anyone can hash common names, passwords, etc using these standard hash functions and then search for them in our database in what is known as a rainbow attack.

A realistic way of solving this problem is then to encrypt the resulting hashes using a strong encryption algorithm, making them safe for storage by the database holder.

It is important to choose a secure destructive hash function, MD5, SHA-1 and SHA-2 are all vulnerable to length extension attacks, for a concrete example see here.

import java.security.MessageDigest

import java.util.Base64

import org.apache.spark.sql._

import org.apache.spark.sql.functions._

import org.apache.spark.sql.types.{IntegerType}

// Perform SHA-256 hashin

def sha_256(in: String): String = {

val md: MessageDigest = MessageDigest.getInstance("SHA-256") // Instantiate MD with algo SHA-256

new String(Base64.getEncoder.encode(md.digest(in.getBytes)),"UTF-8") // Encode the resulting byte array as a base64 string

}

// Generate UDFs from the above functions (not directly evaluated functions)

// If you wish to call these functions directly then use the names in parenthesis, if you wish to use them in a transform use the udf() names.

val sha__256 = udf(sha_256 _)

import java.security.MessageDigest

import java.util.Base64

import org.apache.spark.sql._

import org.apache.spark.sql.functions._

import org.apache.spark.sql.types.IntegerType

sha_256: (in: String)String

sha__256: org.apache.spark.sql.expressions.UserDefinedFunction = UserDefinedFunction(<function1>,StringType,Some(List(StringType)))

val myStr = "Hello!" // Input is a string

val myHash = sha_256(myStr)

println(myHash) // The basic function takes a strings and outputs a string of bits in base64 ("=" represents padding becuse of the block based hashes)

M00Bb3Vc1txYxTqG4YOIL47BT1L7BTRYh8il7dQsh7c=

myStr: String = Hello!

myHash: String = M00Bb3Vc1txYxTqG4YOIL47BT1L7BTRYh8il7dQsh7c=

// Concantination with a pipe character

val p = lit("|")

// Define our serialization rules as a column

val rules: Column = {

//Use all of the pii for the pseudo ID

concat(upper('firstName), p, upper('lastName), p, upper('gender), p,'dob)

}

p: org.apache.spark.sql.Column = |

rules: org.apache.spark.sql.Column = concat(upper(firstName), |, upper(lastName), |, upper(gender), |, dob)

3) Encryption

Encrypting our hashed IDs via a symmetric key cipher ensures that the data holder can encrypt the hashed identifiers for storage and avoid the hash table attacks discussed above, and can decrypt the values at will to lookup entries. The choice of encryption standard for these implementations is AES-128. When provided with a key (a 128 bit string in our case), the AES algorithm encrypts the data into a sequence of bits, traditionally formated in base 64 binary to text encoding. The key should be pseudorandomly generated, which is a standard functionality in Java implementions.

import javax.crypto.KeyGenerator

import javax.crypto.Cipher

import javax.crypto.spec.SecretKeySpec

var myKey: String = ""

// Perform AES-128 encryption

def encrypt(in: String): String = {

val raw = KeyGenerator.getInstance("AES").generateKey.getEncoded() //Initiates a key generator object of type AES, generates the key, and encodes & returns the key

myKey = new String(Base64.getEncoder.encode(raw),"UTF-8") //String representation of the key for decryption

val skeySpec = new SecretKeySpec(raw, "AES") //Creates a secret key object from our generated key

val cipher = Cipher.getInstance("AES") //Initate a cipher object of type AES

cipher.init(Cipher.ENCRYPT_MODE, skeySpec) // Initialize the cipher with our secret key object, specify encryption

new String(Base64.getEncoder.encode(cipher.doFinal(in.getBytes)),"UTF-8") // Encode the resulting byte array as a base64 string

}

// Perform AES-128 decryption

def decrypt(in: String): String = {

val k = new SecretKeySpec(Base64.getDecoder.decode(myKey.getBytes), "AES") //Decode the key from base 64 representation

val cipher = Cipher.getInstance("AES") //Initate a cipher object of type AES

cipher.init(Cipher.DECRYPT_MODE, k) // Initialize the cipher with our secret key object, specify decryption

new String((cipher.doFinal(Base64.getDecoder.decode(in))),"UTF-8") // Encode the resulting byte array as a base64 string

}

val myEnc = udf(encrypt _)

val myDec = udf(decrypt _)

import javax.crypto.KeyGenerator

import javax.crypto.Cipher

import javax.crypto.spec.SecretKeySpec

myKey: String = ""

encrypt: (in: String)String

decrypt: (in: String)String

myEnc: org.apache.spark.sql.expressions.UserDefinedFunction = UserDefinedFunction(<function1>,StringType,Some(List(StringType)))

myDec: org.apache.spark.sql.expressions.UserDefinedFunction = UserDefinedFunction(<function1>,StringType,Some(List(StringType)))

val secretStr = encrypt(myStr) //Output is a bit array encoded as a string in base64

println("Encrypted: ".concat(secretStr))

println("Decrypted: ".concat(decrypt(secretStr)))

Encrypted: qrg96tS/xhbWAKymqZQd+w==

Decrypted: Hello!

secretStr: String = qrg96tS/xhbWAKymqZQd+w==

4) Define Mappings

Now we are ready to define the mappings that make up our pseudonymization procedure:

val psids = {

//Serialize -> Hash -> Encrypt

myEnc(

sha__256(

rules)

).as(s"pseudoID")

}

psids: org.apache.spark.sql.Column = UDF(UDF(concat(upper(firstName), |, upper(lastName), |, upper(gender), |, dob))) AS `pseudoID`

val quasiIdCols: Seq[Column] = Seq(

//Fuzzify data

'gender,

'dob.substr(1, 4).cast(IntegerType).as("yob")

)

quasiIdCols: Seq[org.apache.spark.sql.Column] = List(gender, CAST(substring(dob, 1, 4) AS INT) AS `yob`)

// Here we define the PII transformation to be applied to our dataset, which outputs a dataframe modified according to our specifications.

def removePII(ds: Dataset[_]): DataFrame =

ds.toDF.select(quasiIdCols ++ Seq(psids): _*)

removePII: (ds: org.apache.spark.sql.Dataset[_])org.apache.spark.sql.DataFrame

5) Pseudonymize the Data

Here we perform the actual transformation, saving the output in a new dataframe. This is the data we should be storing in our databases for future use.

val masterIds = ids.transform(removePII)

display(masterIds)

| gender | yob | pseudoID |

|---|---|---|

| M | 1859.0 | b48AjgbsA4w7SWIhkpBVVVYORZksKJeCF/CF/xIdGkmneOYVr4HlKeh2N5YjE24M |

| M | 1890.0 | 04AG3WaElWdPv7g6thR7KqiPcrDD6uwGzMvNyzkmbwXvw5b5X10bZdliUr4Ts0cx |

| M | 1809.0 | 7sJpA5IjhYN5C+nqDCf5pT602OhTvClenIJ1DX3HeMLkc1JoAuplHNguAK1S8WAG |

| F | 1797.0 | bOc7c9e3bl5tF9EdlEFJmtnmv0HSUC+vEx3fo4v3LS4goGRx47/iQpT8ARrTyvuq |

6) Partner Specific Encoding

In practice it may be desirable to, as the trusted data holder, send out partial data sets to different partners. Here, the main concern is that if we send two partial data sets to two different partners, those two partners may merge their data sets together without our knowledge, and obtain more information than we intended. This issue is discussed further in the next part, where attacks to divulge sensitive information from pseudonymized data sets is handled, but for now we will show how to limit the dangers using partner specific encoding.

// If we do not need partner specific passwords, it is sufficient to simply call the transform twice since the randomized key will be different each time.

val partnerIdsA = ids.transform(removePII)

val partnerIdsB = ids.transform(removePII)

display(partnerIdsB)

| gender | yob | pseudoID |

|---|---|---|

| M | 1859.0 | ZXfGvFpVfvrsqYcWlyKZ/R9OZIgGq7IAeaN4ZUdVEOkwsOCr0iLZkyYpNF9s+ycx |

| M | 1890.0 | npQ386CleB7dL3BM9E513SPzvd9jMFlTtN0rC27m6eFOTwPsDrUGJmZ9L4DgAN58 |

| M | 1809.0 | 1Gyh7ynu2lwz43YfCyiu9jFiONehWZF11qzM1d9g2XWTl8y1VXpn80IJ29jEUHA8 |

| F | 1797.0 | zi0ZYjYUX5g254CRANgeLtwt4zZDz9n9DrQ8h5tsLBrQQXSSr/P1T0zpJxxJHdXD |

display(partnerIdsA)

| gender | yob | pseudoID |

|---|---|---|

| M | 1859.0 | 3XwROq3GyPYJdiO3ZN+OuUowX4jRix6J+NNmkEMuOqTu2k3QssXp9b7Bi0O/LXAZ |

| M | 1890.0 | XwLU5HKLQFGefeq2EX+GwhF9tEyeU/Q6/aKgy8ZMYJ0AD/3aBPyi1/k/NrP8Su1j |

| M | 1809.0 | E3RQ+PodF86W4AOMRcoNL1OoXDB1PmFsyXj+ynlK6rl0XkJFeDz8nfVYAX7+6MHu |

| F | 1797.0 | wbS+eZ4QgpW6SONneBm9+jTPHE2J3bWba/ZwL3+iWZo5K+kElhmFm7DjpFyAmp37 |

The data set above is now pseudonymized, but is still vulnerable to other forms of privacy breaches. For instance, since there is only one female in the set, an adversary who only knows that their target is in the set and is a woman, her information is still subject to attacks. For more information see K-anonymity, see also the original paper