This notebook series was updated from the previous one sds-2-x-dl to work with Spark 3.0.1 and Python3+. The notebook series was updated on 2021-01-18. See changes from previous version in table below as well as current flaws that needs revision.

Thanks to Christian von Koch and William Anzén for their contributions towards making these materials Spark 3.0.1 and Python 3+ compliant.

Table of changes and current flaws

| Notebook | Changes | Current flaws |

|---|---|---|

| 049 | cmd6 & cmd15: Picture missing | |

| 051 | cmd9: Changed syntax with dataframes in Pandas. Changed to df.loc for it to work properly. Also changed all .asmatrix() to .values since asmatrix() will be depreciated in future versions | |

| 053 | cmd4: Python WARNING:tensorflow:From /databricks/python/lib/python3.7/site-packages/tensorflow/python/framework/opdeflibrary.py:263: colocatewith (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version. Instructions for updating: Colocations handled automatically by placer. <tf.Variable 'y:0' shape=() dtype=int32ref> | |

| 054 | cmd15: Python WARNING:tensorflow:From /databricks/python/lib/python3.7/site-packages/tensorflow/python/framework/opdeflibrary.py:263: colocatewith (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version. Instructions for updating: Colocations handled automatically by placer. <tf.Variable 'y:0' shape=() dtype=int32ref> | |

| 055 | cmd25: Changed parameter in Dropout layer to rate=1-keepprob (see comments in code) since keepprob will be depreciated in future versions. | |

| 057 | cmd9: Changed parameter in Dropout layer to rate=1-keepprob (see comments in code) since keepprob will be depreciated in future versions. | cmd4: Python WARNING:tensorflow:From /databricks/python/lib/python3.7/site-packages/tensorflow/python/framework/opdeflibrary.py:263: colocatewith (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version. Instructions for updating: Colocations handled automatically by placer. <tf.Variable 'y:0' shape=() dtype=int32ref>, WARNING:tensorflow:From /databricks/python/lib/python3.7/site-packages/tensorflow/python/ops/mathops.py:3066: toint32 (from tensorflow.python.ops.math_ops) is deprecated and will be removed in a future version. Instructions for updating: Use tf.cast instead. |

| 058 | cmd7 & cmd9: Updated path to cifar-10-batches-py | cmd17: Python WARNING:tensorflow:From /databricks/python/lib/python3.7/site-packages/tensorflow/python/framework/opdeflibrary.py:263: colocatewith (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version. Instructions for updating: Colocations handled automatically by placer. <tf.Variable 'y:0' shape=() dtype=int32ref>, WARNING:tensorflow:From /databricks/python/lib/python3.7/site-packages/tensorflow/python/ops/mathops.py:3066: toint32 (from tensorflow.python.ops.math_ops) is deprecated and will be removed in a future version. Instructions for updating: Use tf.cast instead. |

| 060 | cmd2: Python WARNING:tensorflow:From /databricks/python/lib/python3.7/site-packages/tensorflow/python/framework/opdeflibrary.py:263: colocatewith (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version. Instructions for updating: Colocations handled automatically by placer. <tf.Variable 'y:0' shape=() dtype=int32ref>, WARNING:tensorflow:From /databricks/python/lib/python3.7/site-packages/tensorflow/python/ops/mathops.py:3066: toint32 (from tensorflow.python.ops.math_ops) is deprecated and will be removed in a future version. Instructions for updating: Use tf.cast instead. | |

| 062 | cmd21: Changed parameter in Dropout layer to rate=1-keepprob (see comments in code) since keepprob will be depreciated in future versions. | |

| 063 | cmd16-cmd18: Does not work to mount on dbfs directly with save_weights() in keras. Workaround: Save first locally on tmp and then move files to dbfs. See https://github.com/h5py/h5py/issues/709 | cmd16: Python WARNING:tensorflow:From /databricks/python/lib/python3.7/site-packages/tensorflow/python/framework/opdeflibrary.py:263: colocatewith (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version. Instructions for updating: Colocations handled automatically by placer. <tf.Variable 'y:0' shape=() dtype=int32ref>, WARNING:tensorflow:From /databricks/python/lib/python3.7/site-packages/tensorflow/python/ops/mathops.py:3066: toint32 (from tensorflow.python.ops.math_ops) is deprecated and will be removed in a future version. Instructions for updating: Use tf.cast instead. |

ScaDaMaLe Course site and book

Thanks to Christian von Koch and William Anzén for their contributions towards making these materials Spark 3.0.1 and Python 3+ compliant.

This is Raaz's update of Siva's whirl-wind compression of the free Google's DL course in Udacity https://www.youtube.com/watch?v=iDyeK3GvFpo for Adam Briendel's DL modules that will follow.

Deep learning: A Crash Introduction

This notebook provides an introduction to Deep Learning. It is meant to help you descend more fully into these learning resources and references:

- Udacity's course on Deep Learning https://www.udacity.com/course/deep-learning--ud730 by Google engineers: Arpan Chakraborty and Vincent Vanhoucke and their full video playlist:

- Neural networks and deep learning http://neuralnetworksanddeeplearning.com/ by Michael Nielsen

- Deep learning book http://www.deeplearningbook.org/ by Ian Goodfellow, Yoshua Bengio and Aaron Courville

- Deep learning - buzzword for Artifical Neural Networks

- What is it?

- Supervised learning model - Classifier

- Unsupervised model - Anomaly detection (say via auto-encoders)

- Needs lots of data

- Online learning model - backpropogation

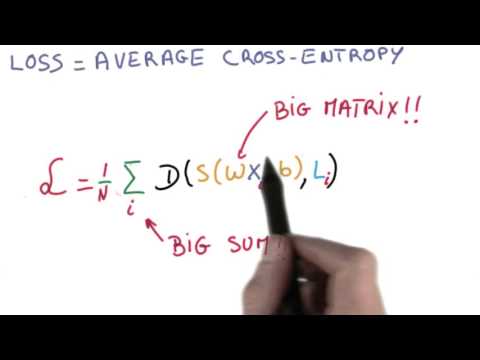

- Optimization - Stochastic gradient descent

- Regularization - L1, L2, Dropout

- Supervised

- Fully connected network

- Convolutional neural network - Eg: For classifying images

- Recurrent neural networks - Eg: For use on text, speech

- Unsupervised

- Autoencoder

A quick recap of logistic regression / linear models

(watch now 46 seconds from 4 to 50):

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

Regression

y = mx + c

Another way to look at a linear model

-- Image Credit: Michael Nielsen

Recap - Gradient descent

(1:54 seconds):

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

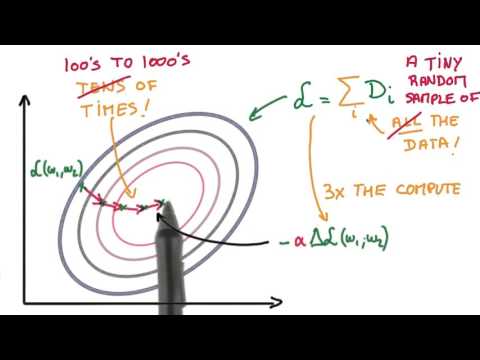

Recap - Stochastic Gradient descent

(2:25 seconds):

(1:28 seconds):

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

HOGWILD! Parallel SGD without locks http://i.stanford.edu/hazy/papers/hogwild-nips.pdf

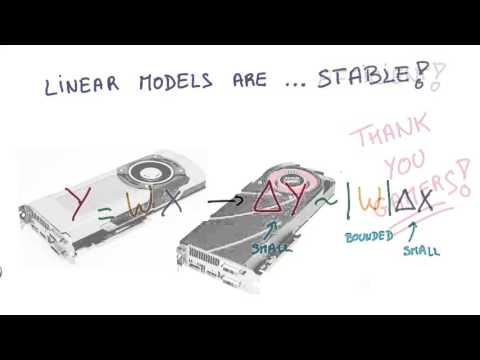

Why deep learning? - Linear model

(24 seconds - 15 to 39):

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

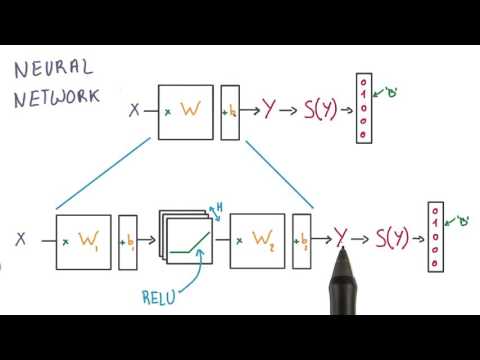

ReLU - Rectified linear unit or Rectifier - max(0, x)

-- Image Credit: Wikipedia

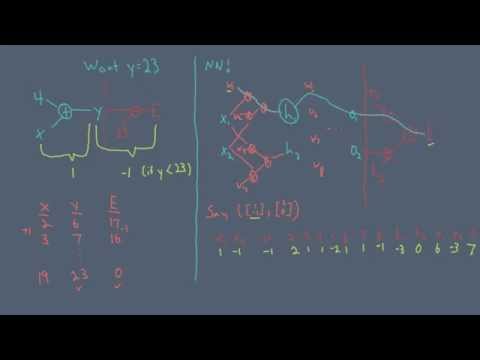

Neural Network

Watch now (45 seconds, 0-45)

*** -- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

*** -- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

Is decision tree a linear model? http://datascience.stackexchange.com/questions/6787/is-decision-tree-algorithm-a-linear-or-nonlinear-algorithm

Neural Network ***  *** -- Image credit: Wikipedia

*** -- Image credit: Wikipedia

Multiple hidden layers

*** -- Image credit: Michael Nielsen

*** -- Image credit: Michael Nielsen

What does it mean to go deep? What do each of the hidden layers learn?

Watch now (1:13 seconds)

*** -- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

*** -- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

Chain rule

\[ (f \circ g)\prime = (f\prime \circ g) \cdot g\prime \] *** ***

Chain rule in neural networks

Watch later (55 seconds)

*** -- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

*** -- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

Backpropogation

To properly understand this you are going to minimally need 20 minutes or so, depending on how rusty your maths is now.

First go through this carefully: * https://stats.stackexchange.com/questions/224140/step-by-step-example-of-reverse-mode-automatic-differentiation

Watch later (9:55 seconds)

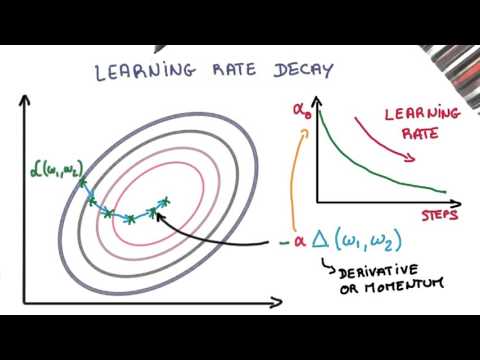

How do you set the learning rate? - Step size in SGD?

there is a lot more... including newer frameworks for automating these knows using probabilistic programs (but in non-distributed settings as of Dec 2017).

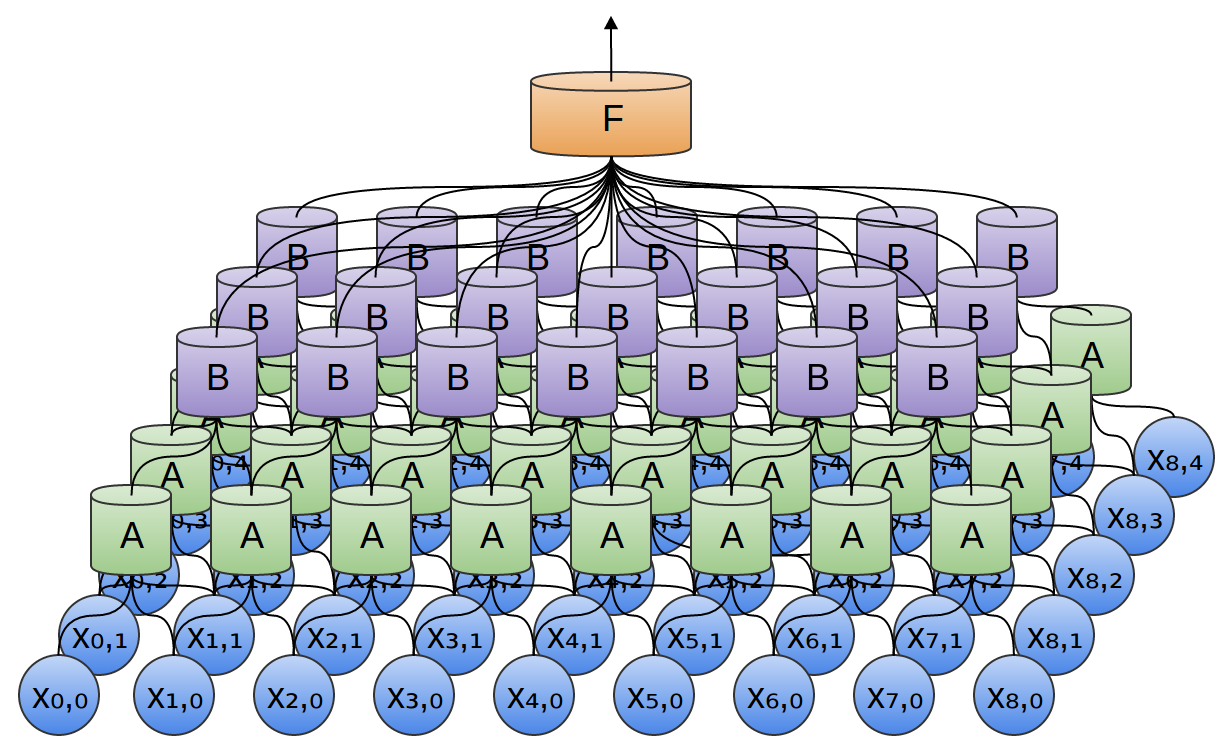

So far we have only seen fully connected neural networks, now let's move into more interesting ones that exploit spatial locality and nearness patterns inherent in certain classes of data, such as image data.

Convolutional Neural Networks

*** Watch now (3:55)

[](https://www.youtube.com/watch?v=jajksuQW4mc)

***

*** Watch now (3:55)

[](https://www.youtube.com/watch?v=jajksuQW4mc)

***

- Alex Krizhevsky, Ilya Sutskever, Geoffrey E. Hinton - https://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf

- Convolutional Neural networks blog - http://colah.github.io/posts/2014-07-Conv-Nets-Modular/

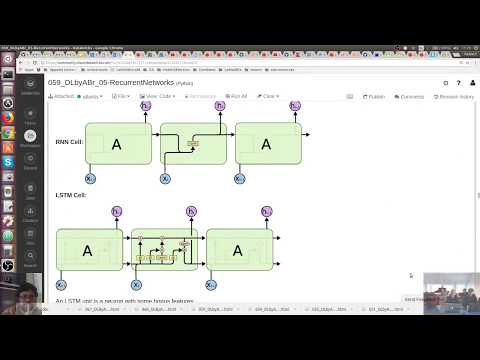

Recurrent neural network

http://colah.github.io/posts/2015-08-Understanding-LSTMs/

http://colah.github.io/posts/2015-08-Understanding-LSTMs/

http://karpathy.github.io/2015/05/21/rnn-effectiveness/

*** Watch (3:55)

LSTM - Long short term memory

GRU - Gated recurrent unit

The more recent improvement over CNNs are called capsule networks by Hinton. Check them out here if you want to prepare for your future interview question in 2017/2018 or so...:

ScaDaMaLe Course site and book

This is a 2019-2021 augmentation and update of Adam Breindel's initial notebooks.

Thanks to Christian von Koch and William Anzén for their contributions towards making these materials Spark 3.0.1 and Python 3+ compliant.

Introduction to Deep Learning

Theory and Practice with TensorFlow and Keras

https://arxiv.org/abs/1508.06576

by the end of this course, this paper and project will be accessible to you!

Schedule

- Intro

- TensorFlow Basics

- Artificial Neural Networks

- Multilayer ("Deep") Feed-Forward Networks

- Training Neural Nets

- Convolutional Networks

- Recurrent Nets, LSTM, GRU

- Generative Networks / Patterns

- Intro to Reinforcement Learning

- Operations in the Real World

Instructor: Adam Breindel

Contact: https://www.linkedin.com/in/adbreind - adbreind@gmail.com

- Almost 20 years building systems for startups and large enterprises

- 10 years teaching front- and back-end technology

Interesting projects...

- My first full-time job in tech involved streaming neural net fraud scoring (debit cards)

- Realtime & offline analytics for banking

- Music synchronization and licensing for networked jukeboxes

Industries

- Finance / Insurance, Travel, Media / Entertainment, Government

Class Goals

- Understand deep learning!

- Acquire an intiution and feeling for how and why and when it works, so you can use it!

- No magic! (or at least very little magic)

- We don't want to have a workshop where we install and demo some magical, fairly complicated thing, and we watch it do something awesome, and handwave, and go home

- That's great for generating excitement, but leaves

- Theoretical mysteries -- what's going on? do I need a Ph.D. in Math or Statistics to do this?

- Practical problems -- I have 10 lines of code but they never run because my tensor is the wrong shape!

- That's great for generating excitement, but leaves

- We'll focus on TensorFlow and Keras

- But 95% should be knowledge you can use with frameworks too: Intel BigDL, Baidu PaddlePaddle, NVIDIA Digits, MXNet, etc.

Deep Learning is About Machines Finding Patterns and Solving Problems

So let's start by diving right in and discussing an interesing problem:

MNIST Digits Dataset

Mixed National Institute of Standards and Technology

Called the "Drosophila" of Machine Learning

Likely the most common single dataset out there in deep learning, just complex enough to be interesting and useful for benchmarks.

"If your code works on MNIST, that doesn't mean it will work everywhere; but if it doesn't work on MNIST, it probably won't work anywhere" :)

What is the goal?

Convert an image of a handwritten character into the correct classification (i.e., which character is it?)

This is nearly trivial for a human to do! Most toddlers can do this with near 100% accuracy, even though they may not be able to count beyond 10 or perform addition.

Traditionally this had been quite a hard task for a computer to do. 99% was not achieved until ~1998. Consistent, easy success at that level was not until 2003 or so.

Let's describe the specific problem in a little more detail

-

Each image is a 28x28 pixel image

- originally monochrome; smoothed to gray; typically inverted, so that "blank" pixels are black (zeros)

-

So the predictors are 784 (28 * 28 = 784) values, ranging from 0 (black / no ink when inverted) to 255 (white / full ink when inverted)

-

The response -- what we're trying to predict -- is the number that the image represents

- the response is a value from 0 to 9

- since there are a small number of discrete catagories for the responses, this is a classification problem, not a regression problem

- there are 10 classes

-

We have, for each data record, predictors and a response to train against, so this is a supervised learning task

-

The dataset happens to come partitioned into a training set (60,000 records) and a test set (10,000 records)

- We'll "hold out" the test set and not train on it

-

Once our model is trained, we'll use it to predict (or perform inference) on the test records, and see how well our trained model performs on unseen test data

- We might want to further split the training set into a validation set or even several K-fold partitions to evaluate as we go

-

As humans, we'll probably measure our success by using accuracy as a metric: What fraction of the examples are correctly classified by the model?

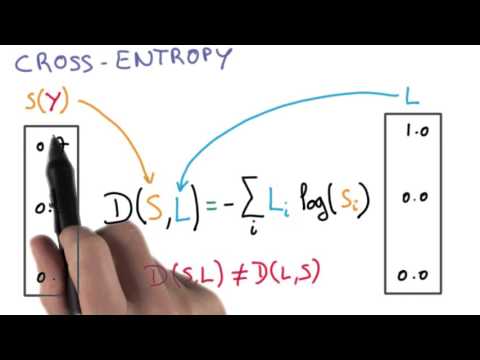

- However, for training, it makes more sense to use cross-entropy to measure, correct, and improve the model. Cross-entropy has the advantage that instead of just counting "right or wrong" answers, it provides a continuous measure of "how wrong (or right)" an answer is. For example, if the correct answer is "1" then the answer "probably a 7, maybe a 1" is wrong, but less wrong than the answer "definitely a 7"

-

Do we need to pre-process the data? Depending on the model we use, we may want to ...

- Scale the values, so that they range from 0 to 1, or so that they measure in standard deviations

- Center the values so that 0 corresponds to the (raw central) value 127.5, or so that 0 corresponds to the mean

What might be characteristics of a good solution?

- As always, we need to balance variance (malleability of the model in the face of variation in the sample training data) and bias (strength/inflexibility of assumptions built in to the modeling method)

- We a model with a good amount of capacity to represent different patterns in the training data (e.g., different handwriting styles) while not overfitting and learning too much about specific training instances

- We'd like a probabalistic model that tells us the most likely classes given the data and assumptions (for example, in the U.S., a one is often written with a vertical stroke, whereas in Germany it's usually written with 2 strokes, closer to a U.S. 7)

Going a little further,

- an ideal modeling approach might perform feature selection on its own deciding which pixels and combinations of pixels are most informative

- in order to be robust to varying data, a good model might learn hierarchical or abstract features like lines, angles, curves and loops that we as humans use to teach, learn, and distinguish Arabic numerals from each other

- it would be nice to add some basic domain knowledge like these features aren't arbitrary slots in a vector, but are parts of a 2-dimensional image where the contents are roughly axis-aligned and translation invariant -- after all, a "7" is still a "7" even if we move it around a bit on the page

Lastly, it would be great to have a framework that is flexible enough to adapt to similar tasks -- say, Greek, Cyrillic, or Chinese handwritten characters, not just digits.

Let's compare some modeling techniques...

Decision Tree

👍 High capacity

👎 Can be hard to generalize; prone to overfit; fragile for this kind of task

👎 Dedicated training algorithm (traditional approach is not directly a gradient-descent optimization problem)

👍 Performs feature selection / PCA implicitly

(Multiclass) Logistic Regression

👎 Low capacity/variance -> High bias

👍 Less overfitting

👎 Less fitting (accuracy)

Kernelized Support Vector Machine (e.g., RBF)

👍 Robust capacity, good bias-variance balance

👎 Expensive to scale in terms of features or instances

👍 Amenable to "online" learning (http://www.isn.ucsd.edu/papers/nips00_inc.pdf)

👍 State of the art for MNIST prior to the widespread use of deep learning!

Deep Learning

It turns out that a model called a convolutional neural network meets all of our goals and can be trained to human-level accuracy on this task in just a few minutes. We will solve MNIST with this sort of model today.

But we will build up to it starting with the simplest neural model.

Mathematical statistical caveat: Note that ML algorithmic performance measures such as 99% or 99.99% as well as their justification by comparisons to "typical" human performance measures from a randomised surveyable population actually often make significant mathematical assumptions that may be violated under the carpet. Some concrete examples include, the size and nature of the training data and their generalizability to live decision problems based on empirical risk minisation principles like cross-validation. These assumpitons are usually harmless and can be time-saving for most problems like recommending songs in Spotify or shoes in Amazon. It is important to bear in mind that there are problems that should guarantee worst case scenario avoidance, like accidents with self-driving cars or global extinction event cause by mathematically ambiguous assumptions in the learning algorithms of say near-Earth-Asteroid mining artificially intelligent robots!

Installations (PyPI rules)

- tensorflow==1.13.1 (worked also on 1.3.0)

- keras==2.2.4 (worked also on 2.0.6)

- dist-keras==0.2.1 (worked also on 0.2.0) Python 3.0 compatible. So make sure cluster has Python 3.0

ScaDaMaLe Course site and book

This is a 2019-2021 augmentation and update of Adam Breindel's initial notebooks.

Thanks to Christian von Koch and William Anzén for their contributions towards making these materials Spark 3.0.1 and Python 3+ compliant.

Artificial Neural Network - Perceptron

The field of artificial neural networks started out with an electromechanical binary unit called a perceptron.

The perceptron took a weighted set of input signals and chose an ouput state (on/off or high/low) based on a threshold.

(raaz) Thus, the perceptron is defined by:

\[ f(1, x_1,x_2,\ldots , x_n , ; , w_0,w_1,w_2,\ldots , w_n) = \begin{cases} 1 & \text{if} \quad \sum_{i=0}^n w_i x_i > 0 \ 0 & \text{otherwise} \end{cases} \] and implementable with the following arithmetical and logical unit (ALU) operations in a machine:

- n inputs from one \(n\)-dimensional data point: \(x_1,x_2,\ldots x_n , \in , \mathbb{R}^n\)

- arithmetic operations

- n+1 multiplications

- n additions

- boolean operations

- one if-then on an inequality

- one output \(o \in {0,1}\), i.e., \(o\) belongs to the set containing \(0\) and \(1\)

- n+1 parameters of interest

This is just a hyperplane given by a dot product of \(n+1\) known inputs and \(n+1\) unknown parameters that can be estimated. This hyperplane can be used to define a hyperplane that partitions \(\mathbb{R}^{n+1}\), the real Euclidean space, into two parts labelled by the outputs \(0\) and \(1\).

The problem of finding estimates of the parameters, \((\hat{w}_0,\hat{w}_1,\hat{w}_2,\ldots \hat{w}_n) \in \mathbb{R}^{(n+1)}\), in some statistically meaningful manner for a predicting task by using the training data given by, say \(k\) labelled points, where you know both the input and output: \[ \left( ( , 1, x_1^{(1)},x_2^{(1)}, \ldots x_n^{(1)}), (o^{(1)}) , ), , ( , 1, x_1^{(2)},x_2^{(2)}, \ldots x_n^{(2)}), (o^{(2)}) , ), , \ldots , , ( , 1, x_1^{(k)},x_2^{(k)}, \ldots x_n^{(k)}), (o^{(k)}) , ) \right) , \in , (\mathbb{R}^{n+1} \times { 0,1 } )^k \] is the machine learning problem here.

Succinctly, we are after a random mapping, denoted below by \(\mapsto_{\rightsquigarrow}\), called the estimator: \[

(\mathbb{R}^{n+1} \times {0,1})^k \mapsto_{\rightsquigarrow} , \left( , \mathtt{model}( (1,x_1,x_2,\ldots,x_n) ,;, (\hat{w}_0,\hat{w}_1,\hat{w}_2,\ldots \hat{w}_n)) : \mathbb{R}^{n+1} \to {0,1} , \right)

\] which takes random labelled dataset (to understand random here think of two scientists doing independent experiments to get their own training datasets) of size \(k\) and returns a model. These mathematical notions correspond exactly to the estimator and model (which is a transformer) in the language of Apache Spark's Machine Learning Pipleines we have seen before.

We can use this transformer for prediction of unlabelled data where we only observe the input and what to know the output under some reasonable assumptions.

Of course we want to be able to generalize so we don't overfit to the training data using some empirical risk minisation rule such as cross-validation. Again, we have seen these in Apache Spark for other ML methods like linear regression and decision trees.

If the output isn't right, we can adjust the weights, threshold, or bias (\(x_0\) above)

The model was inspired by discoveries about the neurons of animals, so hopes were quite high that it could lead to a sophisticated machine. This model can be extended by adding multiple neurons in parallel. And we can use linear output instead of a threshold if we like for the output.

If we were to do so, the output would look like \({x \cdot w} + w_0\) (this is where the vector multiplication and, eventually, matrix multiplication, comes in)

When we look at the math this way, we see that despite this being an interesting model, it's really just a fancy linear calculation.

And, in fact, the proof that this model -- being linear -- could not solve any problems whose solution was nonlinear ... led to the first of several "AI / neural net winters" when the excitement was quickly replaced by disappointment, and most research was abandoned.

Linear Perceptron

We'll get to the non-linear part, but the linear perceptron model is a great way to warm up and bridge the gap from traditional linear regression to the neural-net flavor.

Let's look at a problem -- the diamonds dataset from R -- and analyze it using two traditional methods in Scikit-Learn, and then we'll start attacking it with neural networks!

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.tree import DecisionTreeRegressor

from sklearn.metrics import mean_squared_error

input_file = "/dbfs/databricks-datasets/Rdatasets/data-001/csv/ggplot2/diamonds.csv"

df = pd.read_csv(input_file, header = 0)

import IPython.display as disp

pd.set_option('display.width', 200)

disp.display(df[:10])

Unnamed: 0 carat cut color clarity ... table price x y z

0 1 0.23 Ideal E SI2 ... 55.0 326 3.95 3.98 2.43

1 2 0.21 Premium E SI1 ... 61.0 326 3.89 3.84 2.31

2 3 0.23 Good E VS1 ... 65.0 327 4.05 4.07 2.31

3 4 0.29 Premium I VS2 ... 58.0 334 4.20 4.23 2.63

4 5 0.31 Good J SI2 ... 58.0 335 4.34 4.35 2.75

5 6 0.24 Very Good J VVS2 ... 57.0 336 3.94 3.96 2.48

6 7 0.24 Very Good I VVS1 ... 57.0 336 3.95 3.98 2.47

7 8 0.26 Very Good H SI1 ... 55.0 337 4.07 4.11 2.53

8 9 0.22 Fair E VS2 ... 61.0 337 3.87 3.78 2.49

9 10 0.23 Very Good H VS1 ... 61.0 338 4.00 4.05 2.39

[10 rows x 11 columns]

df2 = df.drop(df.columns[0], axis=1)

disp.display(df2[:3])

carat cut color clarity depth table price x y z

0 0.23 Ideal E SI2 61.5 55.0 326 3.95 3.98 2.43

1 0.21 Premium E SI1 59.8 61.0 326 3.89 3.84 2.31

2 0.23 Good E VS1 56.9 65.0 327 4.05 4.07 2.31

df3 = pd.get_dummies(df2) # this gives a one-hot encoding of categorial variables

disp.display(df3.iloc[:3, 7:18])

cut_Fair cut_Good cut_Ideal ... color_G color_H color_I

0 0 0 1 ... 0 0 0

1 0 0 0 ... 0 0 0

2 0 1 0 ... 0 0 0

[3 rows x 11 columns]

# pre-process to get y

y = df3.iloc[:,3:4].values.flatten()

y.flatten()

# preprocess and reshape X as a matrix

X = df3.drop(df3.columns[3], axis=1).values

np.shape(X)

# break the dataset into training and test set with a 75% and 25% split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=42)

# Define a decisoin tree model with max depth 10

dt = DecisionTreeRegressor(random_state=0, max_depth=10)

# fit the decision tree to the training data to get a fitted model

model = dt.fit(X_train, y_train)

# predict the features or X values of the test data using the fitted model

y_pred = model.predict(X_test)

# print the MSE performance measure of the fit by comparing the predicted versus the observed values of y

print("RMSE %f" % np.sqrt(mean_squared_error(y_test, y_pred)) )

RMSE 727.042036

from sklearn import linear_model

# Do the same with linear regression and not a worse MSE

lr = linear_model.LinearRegression()

linear_model = lr.fit(X_train, y_train)

y_pred = linear_model.predict(X_test)

print("RMSE %f" % np.sqrt(mean_squared_error(y_test, y_pred)) )

RMSE 1124.086095

Now that we have a baseline, let's build a neural network -- linear at first -- and go further.

Neural Network with Keras

Keras is a High-Level API for Neural Networks and Deep Learning

"Being able to go from idea to result with the least possible delay is key to doing good research."

Maintained by Francois Chollet at Google, it provides

- High level APIs

- Pluggable backends for Theano, TensorFlow, CNTK, MXNet

- CPU/GPU support

- The now-officially-endorsed high-level wrapper for TensorFlow; a version ships in TF

- Model persistence and other niceties

- JavaScript, iOS, etc. deployment

- Interop with further frameworks, like DeepLearning4J, Spark DL Pipelines ...

Well, with all this, why would you ever not use Keras?

As an API/Facade, Keras doesn't directly expose all of the internals you might need for something custom and low-level ... so you might need to implement at a lower level first, and then perhaps wrap it to make it easily usable in Keras.

Mr. Chollet compiles stats (roughly quarterly) on "[t]he state of the deep learning landscape: GitHub activity of major libraries over the past quarter (tickets, forks, and contributors)."

(October 2017: https://twitter.com/fchollet/status/915366704401719296; https://twitter.com/fchollet/status/915626952408436736)

GitHub | Research |

Keras has wide adoption in industry

We'll build a "Dense Feed-Forward Shallow" Network:

(the number of units in the following diagram does not exactly match ours)

Grab a Keras API cheat sheet from https://s3.amazonaws.com/assets.datacamp.com/blogassets/KerasCheatSheetPython.pdf

from keras.models import Sequential

from keras.layers import Dense

# we are going to add layers sequentially one after the other (feed-forward) to our neural network model

model = Sequential()

# the first layer has 30 nodes (or neurons) with input dimension 26 for our diamonds data

# we will use Nomal or Guassian kernel to initialise the weights we want to estimate

# our activation function is linear (to mimic linear regression)

model.add(Dense(30, input_dim=26, kernel_initializer='normal', activation='linear'))

# the next layer is for the response y and has only one node

model.add(Dense(1, kernel_initializer='normal', activation='linear'))

# compile the model with other specifications for loss and type of gradient descent optimisation routine

model.compile(loss='mean_squared_error', optimizer='adam', metrics=['mean_squared_error'])

# fit the model to the training data using stochastic gradient descent with a batch-size of 200 and 10% of data held out for validation

history = model.fit(X_train, y_train, epochs=10, batch_size=200, validation_split=0.1)

scores = model.evaluate(X_test, y_test)

print()

print("test set RMSE: %f" % np.sqrt(scores[1]))

Using TensorFlow backend.

WARNING:tensorflow:From /databricks/python/lib/python3.7/site-packages/tensorflow/python/framework/op_def_library.py:263: colocate_with (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version.

Instructions for updating:

Colocations handled automatically by placer.

WARNING:tensorflow:From /databricks/python/lib/python3.7/site-packages/tensorflow/python/ops/math_ops.py:3066: to_int32 (from tensorflow.python.ops.math_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use tf.cast instead.

Train on 36409 samples, validate on 4046 samples

Epoch 1/10

200/36409 [..............................] - ETA: 30s - loss: 24515974.0000 - mean_squared_error: 24515974.0000

8600/36409 [======>.......................] - ETA: 0s - loss: 30972551.0233 - mean_squared_error: 30972551.0233

16400/36409 [============>.................] - ETA: 0s - loss: 31092082.1707 - mean_squared_error: 31092082.1707

24400/36409 [===================>..........] - ETA: 0s - loss: 30821887.3934 - mean_squared_error: 30821887.3934

33200/36409 [==========================>...] - ETA: 0s - loss: 30772256.5783 - mean_squared_error: 30772256.5783

36409/36409 [==============================] - 0s 12us/step - loss: 30662987.2877 - mean_squared_error: 30662987.2877 - val_loss: 30057002.5457 - val_mean_squared_error: 30057002.5457

Epoch 2/10

200/36409 [..............................] - ETA: 0s - loss: 27102510.0000 - mean_squared_error: 27102510.0000

9000/36409 [======>.......................] - ETA: 0s - loss: 28506919.5556 - mean_squared_error: 28506919.5556

17600/36409 [=============>................] - ETA: 0s - loss: 27940962.7727 - mean_squared_error: 27940962.7727

26200/36409 [====================>.........] - ETA: 0s - loss: 27211811.0076 - mean_squared_error: 27211811.0076

34800/36409 [===========================>..] - ETA: 0s - loss: 26426392.1034 - mean_squared_error: 26426392.1034

36409/36409 [==============================] - 0s 6us/step - loss: 26167957.1320 - mean_squared_error: 26167957.1320 - val_loss: 23785895.3554 - val_mean_squared_error: 23785895.3554

Epoch 3/10

200/36409 [..............................] - ETA: 0s - loss: 17365908.0000 - mean_squared_error: 17365908.0000

7200/36409 [====>.........................] - ETA: 0s - loss: 22444847.5556 - mean_squared_error: 22444847.5556

16000/36409 [============>.................] - ETA: 0s - loss: 21373980.3000 - mean_squared_error: 21373980.3000

24000/36409 [==================>...........] - ETA: 0s - loss: 21005080.0500 - mean_squared_error: 21005080.0500

31800/36409 [=========================>....] - ETA: 0s - loss: 20236407.7170 - mean_squared_error: 20236407.7170

36409/36409 [==============================] - 0s 7us/step - loss: 20020372.1159 - mean_squared_error: 20020372.1159 - val_loss: 18351806.8710 - val_mean_squared_error: 18351806.8710

Epoch 4/10

200/36409 [..............................] - ETA: 0s - loss: 17831442.0000 - mean_squared_error: 17831442.0000

8400/36409 [=====>........................] - ETA: 0s - loss: 17513972.2857 - mean_squared_error: 17513972.2857

17000/36409 [=============>................] - ETA: 0s - loss: 16829699.6941 - mean_squared_error: 16829699.6941

25600/36409 [====================>.........] - ETA: 0s - loss: 16673756.4375 - mean_squared_error: 16673756.4375

33800/36409 [==========================>...] - ETA: 0s - loss: 16443651.8225 - mean_squared_error: 16443651.8225

36409/36409 [==============================] - 0s 6us/step - loss: 16317392.6930 - mean_squared_error: 16317392.6930 - val_loss: 16164358.2887 - val_mean_squared_error: 16164358.2887

Epoch 5/10

200/36409 [..............................] - ETA: 0s - loss: 20413018.0000 - mean_squared_error: 20413018.0000

7400/36409 [=====>........................] - ETA: 0s - loss: 15570987.0000 - mean_squared_error: 15570987.0000

14800/36409 [===========>..................] - ETA: 0s - loss: 15013196.5405 - mean_squared_error: 15013196.5405

23400/36409 [==================>...........] - ETA: 0s - loss: 15246935.8034 - mean_squared_error: 15246935.8034

32000/36409 [=========================>....] - ETA: 0s - loss: 15250803.7375 - mean_squared_error: 15250803.7375

36409/36409 [==============================] - 0s 7us/step - loss: 15255931.8414 - mean_squared_error: 15255931.8414 - val_loss: 15730755.8908 - val_mean_squared_error: 15730755.8908

Epoch 6/10

200/36409 [..............................] - ETA: 0s - loss: 18564152.0000 - mean_squared_error: 18564152.0000

7600/36409 [=====>........................] - ETA: 0s - loss: 15086204.8421 - mean_squared_error: 15086204.8421

16200/36409 [============>.................] - ETA: 0s - loss: 15104538.2593 - mean_squared_error: 15104538.2593

24600/36409 [===================>..........] - ETA: 0s - loss: 15172120.3008 - mean_squared_error: 15172120.3008

32600/36409 [=========================>....] - ETA: 0s - loss: 15123702.1043 - mean_squared_error: 15123702.1043

36409/36409 [==============================] - 0s 7us/step - loss: 15066138.8398 - mean_squared_error: 15066138.8398 - val_loss: 15621212.2521 - val_mean_squared_error: 15621212.2521

Epoch 7/10

200/36409 [..............................] - ETA: 0s - loss: 12937932.0000 - mean_squared_error: 12937932.0000

8400/36409 [=====>........................] - ETA: 0s - loss: 15215220.5238 - mean_squared_error: 15215220.5238

16400/36409 [============>.................] - ETA: 0s - loss: 15116822.9268 - mean_squared_error: 15116822.9268

24600/36409 [===================>..........] - ETA: 0s - loss: 14993875.2439 - mean_squared_error: 14993875.2439

32600/36409 [=========================>....] - ETA: 0s - loss: 14956622.0184 - mean_squared_error: 14956622.0184

36409/36409 [==============================] - 0s 7us/step - loss: 14981999.6368 - mean_squared_error: 14981999.6368 - val_loss: 15533945.2353 - val_mean_squared_error: 15533945.2353

Epoch 8/10

200/36409 [..............................] - ETA: 0s - loss: 17393156.0000 - mean_squared_error: 17393156.0000

7800/36409 [=====>........................] - ETA: 0s - loss: 15290136.5128 - mean_squared_error: 15290136.5128

16200/36409 [============>.................] - ETA: 0s - loss: 15074332.1235 - mean_squared_error: 15074332.1235

24600/36409 [===================>..........] - ETA: 0s - loss: 14987445.0488 - mean_squared_error: 14987445.0488

33000/36409 [==========================>...] - ETA: 0s - loss: 14853941.5394 - mean_squared_error: 14853941.5394

36409/36409 [==============================] - 0s 7us/step - loss: 14896132.4141 - mean_squared_error: 14896132.4141 - val_loss: 15441119.5566 - val_mean_squared_error: 15441119.5566

Epoch 9/10

200/36409 [..............................] - ETA: 0s - loss: 12659630.0000 - mean_squared_error: 12659630.0000

8600/36409 [======>.......................] - ETA: 0s - loss: 14682766.8605 - mean_squared_error: 14682766.8605

17000/36409 [=============>................] - ETA: 0s - loss: 14851612.5882 - mean_squared_error: 14851612.5882

25600/36409 [====================>.........] - ETA: 0s - loss: 14755020.0234 - mean_squared_error: 14755020.0234

34200/36409 [===========================>..] - ETA: 0s - loss: 14854599.4737 - mean_squared_error: 14854599.4737

36409/36409 [==============================] - 0s 6us/step - loss: 14802259.4853 - mean_squared_error: 14802259.4853 - val_loss: 15339340.7177 - val_mean_squared_error: 15339340.7177

Epoch 10/10

200/36409 [..............................] - ETA: 0s - loss: 14473119.0000 - mean_squared_error: 14473119.0000

8600/36409 [======>.......................] - ETA: 0s - loss: 14292346.6512 - mean_squared_error: 14292346.6512

16200/36409 [============>.................] - ETA: 0s - loss: 14621621.4938 - mean_squared_error: 14621621.4938

24600/36409 [===================>..........] - ETA: 0s - loss: 14648206.4228 - mean_squared_error: 14648206.4228

33200/36409 [==========================>...] - ETA: 0s - loss: 14746160.4398 - mean_squared_error: 14746160.4398

36409/36409 [==============================] - 0s 7us/step - loss: 14699508.8054 - mean_squared_error: 14699508.8054 - val_loss: 15226518.1542 - val_mean_squared_error: 15226518.1542

32/13485 [..............................] - ETA: 0s

4096/13485 [========>.....................] - ETA: 0s

7040/13485 [==============>...............] - ETA: 0s

9920/13485 [=====================>........] - ETA: 0s

13485/13485 [==============================] - 0s 15us/step

test set RMSE: 3800.812819

model.summary() # do you understand why the number of parameters in layer 1 is 810? 26*30+30=810

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_1 (Dense) (None, 30) 810

_________________________________________________________________

dense_2 (Dense) (None, 1) 31

=================================================================

Total params: 841

Trainable params: 841

Non-trainable params: 0

_________________________________________________________________

Notes:

- We didn't have to explicitly write the "input" layer, courtesy of the Keras API. We just said

input_dim=26on the first (and only) hidden layer. kernel_initializer='normal'is a simple (though not always optimal) weight initialization- Epoch: 1 pass over all of the training data

- Batch: Records processes together in a single training pass

How is our RMSE vs. the std dev of the response?

y.std()

Let's look at the error ...

import matplotlib.pyplot as plt

fig, ax = plt.subplots()

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'val'], loc='upper left')

display(fig)

Let's set up a "long-running" training. This will take a few minutes to converge to the same performance we got more or less instantly with our sklearn linear regression :)

While it's running, we can talk about the training.

from keras.models import Sequential

from keras.layers import Dense

import numpy as np

import pandas as pd

input_file = "/dbfs/databricks-datasets/Rdatasets/data-001/csv/ggplot2/diamonds.csv"

df = pd.read_csv(input_file, header = 0)

df.drop(df.columns[0], axis=1, inplace=True)

df = pd.get_dummies(df, prefix=['cut_', 'color_', 'clarity_'])

y = df.iloc[:,3:4].values.flatten()

y.flatten()

X = df.drop(df.columns[3], axis=1).values

np.shape(X)

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=42)

model = Sequential()

model.add(Dense(30, input_dim=26, kernel_initializer='normal', activation='linear'))

model.add(Dense(1, kernel_initializer='normal', activation='linear'))

model.compile(loss='mean_squared_error', optimizer='adam', metrics=['mean_squared_error'])

history = model.fit(X_train, y_train, epochs=250, batch_size=100, validation_split=0.1, verbose=2)

scores = model.evaluate(X_test, y_test)

print("\nroot %s: %f" % (model.metrics_names[1], np.sqrt(scores[1])))

Train on 36409 samples, validate on 4046 samples

Epoch 1/250

- 1s - loss: 28387336.4384 - mean_squared_error: 28387336.4384 - val_loss: 23739923.0816 - val_mean_squared_error: 23739923.0816

Epoch 2/250

- 0s - loss: 18190573.9659 - mean_squared_error: 18190573.9659 - val_loss: 16213271.2121 - val_mean_squared_error: 16213271.2121

Epoch 3/250

- 0s - loss: 15172630.1573 - mean_squared_error: 15172630.1573 - val_loss: 15625113.6841 - val_mean_squared_error: 15625113.6841

Epoch 4/250

- 0s - loss: 14945745.1874 - mean_squared_error: 14945745.1874 - val_loss: 15453493.5042 - val_mean_squared_error: 15453493.5042

Epoch 5/250

- 0s - loss: 14767640.2591 - mean_squared_error: 14767640.2591 - val_loss: 15254403.9486 - val_mean_squared_error: 15254403.9486

Epoch 6/250

- 0s - loss: 14557887.6100 - mean_squared_error: 14557887.6100 - val_loss: 15019167.1374 - val_mean_squared_error: 15019167.1374

Epoch 7/250

- 0s - loss: 14309593.5448 - mean_squared_error: 14309593.5448 - val_loss: 14737183.8052 - val_mean_squared_error: 14737183.8052

Epoch 8/250

- 0s - loss: 14013285.0941 - mean_squared_error: 14013285.0941 - val_loss: 14407560.3356 - val_mean_squared_error: 14407560.3356

Epoch 9/250

- 0s - loss: 13656069.2042 - mean_squared_error: 13656069.2042 - val_loss: 13997285.5230 - val_mean_squared_error: 13997285.5230

Epoch 10/250

- 0s - loss: 13216458.2848 - mean_squared_error: 13216458.2848 - val_loss: 13489102.3277 - val_mean_squared_error: 13489102.3277

Epoch 11/250

- 0s - loss: 12677035.7927 - mean_squared_error: 12677035.7927 - val_loss: 12879791.1409 - val_mean_squared_error: 12879791.1409

Epoch 12/250

- 0s - loss: 12026548.9956 - mean_squared_error: 12026548.9956 - val_loss: 12144681.5783 - val_mean_squared_error: 12144681.5783

Epoch 13/250

- 0s - loss: 11261201.3992 - mean_squared_error: 11261201.3992 - val_loss: 11298467.2511 - val_mean_squared_error: 11298467.2511

Epoch 14/250

- 0s - loss: 10394848.5657 - mean_squared_error: 10394848.5657 - val_loss: 10359981.5892 - val_mean_squared_error: 10359981.5892

Epoch 15/250

- 0s - loss: 9464690.1582 - mean_squared_error: 9464690.1582 - val_loss: 9377032.8176 - val_mean_squared_error: 9377032.8176

Epoch 16/250

- 0s - loss: 8522523.9198 - mean_squared_error: 8522523.9198 - val_loss: 8407744.1396 - val_mean_squared_error: 8407744.1396

Epoch 17/250

- 0s - loss: 7614509.1284 - mean_squared_error: 7614509.1284 - val_loss: 7483504.2244 - val_mean_squared_error: 7483504.2244

Epoch 18/250

- 0s - loss: 6773874.9790 - mean_squared_error: 6773874.9790 - val_loss: 6642935.4123 - val_mean_squared_error: 6642935.4123

Epoch 19/250

- 0s - loss: 6029308.8020 - mean_squared_error: 6029308.8020 - val_loss: 5907274.1873 - val_mean_squared_error: 5907274.1873

Epoch 20/250

- 0s - loss: 5389141.6630 - mean_squared_error: 5389141.6630 - val_loss: 5281166.3648 - val_mean_squared_error: 5281166.3648

Epoch 21/250

- 0s - loss: 4860038.6980 - mean_squared_error: 4860038.6980 - val_loss: 4764607.7313 - val_mean_squared_error: 4764607.7313

Epoch 22/250

- 0s - loss: 4431739.1137 - mean_squared_error: 4431739.1137 - val_loss: 4351510.2142 - val_mean_squared_error: 4351510.2142

Epoch 23/250

- 0s - loss: 4094045.5128 - mean_squared_error: 4094045.5128 - val_loss: 4021251.9317 - val_mean_squared_error: 4021251.9317

Epoch 24/250

- 0s - loss: 3829986.6989 - mean_squared_error: 3829986.6989 - val_loss: 3757295.1629 - val_mean_squared_error: 3757295.1629

Epoch 25/250

- 0s - loss: 3623823.6510 - mean_squared_error: 3623823.6510 - val_loss: 3552794.5538 - val_mean_squared_error: 3552794.5538

Epoch 26/250

- 0s - loss: 3465635.4962 - mean_squared_error: 3465635.4962 - val_loss: 3393235.2467 - val_mean_squared_error: 3393235.2467

Epoch 27/250

- 0s - loss: 3340038.8348 - mean_squared_error: 3340038.8348 - val_loss: 3269279.0370 - val_mean_squared_error: 3269279.0370

Epoch 28/250

- 0s - loss: 3236200.2134 - mean_squared_error: 3236200.2134 - val_loss: 3156617.4010 - val_mean_squared_error: 3156617.4010

Epoch 29/250

- 0s - loss: 3150650.9059 - mean_squared_error: 3150650.9059 - val_loss: 3065790.8180 - val_mean_squared_error: 3065790.8180

Epoch 30/250

- 0s - loss: 3075868.5099 - mean_squared_error: 3075868.5099 - val_loss: 2992714.7820 - val_mean_squared_error: 2992714.7820

Epoch 31/250

- 0s - loss: 3011537.2513 - mean_squared_error: 3011537.2513 - val_loss: 2916240.5889 - val_mean_squared_error: 2916240.5889

Epoch 32/250

- 0s - loss: 2950834.9374 - mean_squared_error: 2950834.9374 - val_loss: 2853476.3283 - val_mean_squared_error: 2853476.3283

Epoch 33/250

- 0s - loss: 2896146.2107 - mean_squared_error: 2896146.2107 - val_loss: 2797956.3931 - val_mean_squared_error: 2797956.3931

Epoch 34/250

- 0s - loss: 2845359.1844 - mean_squared_error: 2845359.1844 - val_loss: 2756268.6799 - val_mean_squared_error: 2756268.6799

Epoch 35/250

- 0s - loss: 2799651.4802 - mean_squared_error: 2799651.4802 - val_loss: 2696497.8046 - val_mean_squared_error: 2696497.8046

Epoch 36/250

- 0s - loss: 2756932.6045 - mean_squared_error: 2756932.6045 - val_loss: 2652229.5122 - val_mean_squared_error: 2652229.5122

Epoch 37/250

- 0s - loss: 2718146.0056 - mean_squared_error: 2718146.0056 - val_loss: 2610052.9367 - val_mean_squared_error: 2610052.9367

Epoch 38/250

- 0s - loss: 2681826.8783 - mean_squared_error: 2681826.8783 - val_loss: 2576333.5379 - val_mean_squared_error: 2576333.5379

Epoch 39/250

- 0s - loss: 2646768.1726 - mean_squared_error: 2646768.1726 - val_loss: 2535503.5141 - val_mean_squared_error: 2535503.5141

Epoch 40/250

- 0s - loss: 2614777.8154 - mean_squared_error: 2614777.8154 - val_loss: 2503085.7453 - val_mean_squared_error: 2503085.7453

Epoch 41/250

- 0s - loss: 2585534.7258 - mean_squared_error: 2585534.7258 - val_loss: 2473390.2650 - val_mean_squared_error: 2473390.2650

Epoch 42/250

- 0s - loss: 2560146.6899 - mean_squared_error: 2560146.6899 - val_loss: 2444374.3726 - val_mean_squared_error: 2444374.3726

Epoch 43/250

- 0s - loss: 2532669.1185 - mean_squared_error: 2532669.1185 - val_loss: 2418029.5618 - val_mean_squared_error: 2418029.5618

Epoch 44/250

- 0s - loss: 2509591.7315 - mean_squared_error: 2509591.7315 - val_loss: 2393902.2946 - val_mean_squared_error: 2393902.2946

Epoch 45/250

- 0s - loss: 2485903.8244 - mean_squared_error: 2485903.8244 - val_loss: 2374926.1074 - val_mean_squared_error: 2374926.1074

Epoch 46/250

- 0s - loss: 2468145.9276 - mean_squared_error: 2468145.9276 - val_loss: 2353132.2925 - val_mean_squared_error: 2353132.2925

Epoch 47/250

- 0s - loss: 2449389.6913 - mean_squared_error: 2449389.6913 - val_loss: 2330850.7967 - val_mean_squared_error: 2330850.7967

Epoch 48/250

- 0s - loss: 2430694.4924 - mean_squared_error: 2430694.4924 - val_loss: 2315977.1396 - val_mean_squared_error: 2315977.1396

Epoch 49/250

- 0s - loss: 2416348.0670 - mean_squared_error: 2416348.0670 - val_loss: 2295317.3459 - val_mean_squared_error: 2295317.3459

Epoch 50/250

- 0s - loss: 2400174.9707 - mean_squared_error: 2400174.9707 - val_loss: 2280247.3585 - val_mean_squared_error: 2280247.3585

Epoch 51/250

- 0s - loss: 2386847.7805 - mean_squared_error: 2386847.7805 - val_loss: 2269282.1988 - val_mean_squared_error: 2269282.1988

Epoch 52/250

- 0s - loss: 2373865.5490 - mean_squared_error: 2373865.5490 - val_loss: 2253546.8710 - val_mean_squared_error: 2253546.8710

Epoch 53/250

- 0s - loss: 2362687.3404 - mean_squared_error: 2362687.3404 - val_loss: 2241699.1739 - val_mean_squared_error: 2241699.1739

Epoch 54/250

- 0s - loss: 2350158.3027 - mean_squared_error: 2350158.3027 - val_loss: 2229586.8293 - val_mean_squared_error: 2229586.8293

Epoch 55/250

- 0s - loss: 2340199.4378 - mean_squared_error: 2340199.4378 - val_loss: 2223216.2266 - val_mean_squared_error: 2223216.2266

Epoch 56/250

- 0s - loss: 2328815.8881 - mean_squared_error: 2328815.8881 - val_loss: 2215892.9633 - val_mean_squared_error: 2215892.9633

Epoch 57/250

- 0s - loss: 2319769.2307 - mean_squared_error: 2319769.2307 - val_loss: 2202649.4680 - val_mean_squared_error: 2202649.4680

Epoch 58/250

- 0s - loss: 2311106.1876 - mean_squared_error: 2311106.1876 - val_loss: 2190911.9708 - val_mean_squared_error: 2190911.9708

Epoch 59/250

- 0s - loss: 2303140.6940 - mean_squared_error: 2303140.6940 - val_loss: 2190453.6531 - val_mean_squared_error: 2190453.6531

Epoch 60/250

- 0s - loss: 2295309.2408 - mean_squared_error: 2295309.2408 - val_loss: 2173813.5528 - val_mean_squared_error: 2173813.5528

Epoch 61/250

- 0s - loss: 2286456.1451 - mean_squared_error: 2286456.1451 - val_loss: 2167401.4068 - val_mean_squared_error: 2167401.4068

Epoch 62/250

- 0s - loss: 2278925.0642 - mean_squared_error: 2278925.0642 - val_loss: 2158559.3234 - val_mean_squared_error: 2158559.3234

Epoch 63/250

- 0s - loss: 2272597.3724 - mean_squared_error: 2272597.3724 - val_loss: 2151993.7547 - val_mean_squared_error: 2151993.7547

Epoch 64/250

- 0s - loss: 2265358.6924 - mean_squared_error: 2265358.6924 - val_loss: 2146581.5500 - val_mean_squared_error: 2146581.5500

Epoch 65/250

- 0s - loss: 2259204.1708 - mean_squared_error: 2259204.1708 - val_loss: 2138775.9063 - val_mean_squared_error: 2138775.9063

Epoch 66/250

- 0s - loss: 2251698.1355 - mean_squared_error: 2251698.1355 - val_loss: 2132688.4142 - val_mean_squared_error: 2132688.4142

Epoch 67/250

- 0s - loss: 2245842.9347 - mean_squared_error: 2245842.9347 - val_loss: 2127250.3298 - val_mean_squared_error: 2127250.3298

Epoch 68/250

- 0s - loss: 2239787.1670 - mean_squared_error: 2239787.1670 - val_loss: 2128124.5670 - val_mean_squared_error: 2128124.5670

Epoch 69/250

- 0s - loss: 2233091.2950 - mean_squared_error: 2233091.2950 - val_loss: 2120944.2249 - val_mean_squared_error: 2120944.2249

Epoch 70/250

- 0s - loss: 2227085.7098 - mean_squared_error: 2227085.7098 - val_loss: 2114163.3953 - val_mean_squared_error: 2114163.3953

Epoch 71/250

- 0s - loss: 2220383.1575 - mean_squared_error: 2220383.1575 - val_loss: 2119813.9272 - val_mean_squared_error: 2119813.9272

Epoch 72/250

- 0s - loss: 2215016.5886 - mean_squared_error: 2215016.5886 - val_loss: 2098265.5178 - val_mean_squared_error: 2098265.5178

Epoch 73/250

- 0s - loss: 2209031.0828 - mean_squared_error: 2209031.0828 - val_loss: 2093349.4299 - val_mean_squared_error: 2093349.4299

Epoch 74/250

- 0s - loss: 2203458.1824 - mean_squared_error: 2203458.1824 - val_loss: 2087530.1435 - val_mean_squared_error: 2087530.1435

Epoch 75/250

- 0s - loss: 2197507.4423 - mean_squared_error: 2197507.4423 - val_loss: 2084310.7636 - val_mean_squared_error: 2084310.7636

Epoch 76/250

- 0s - loss: 2191870.9516 - mean_squared_error: 2191870.9516 - val_loss: 2078751.1230 - val_mean_squared_error: 2078751.1230

Epoch 77/250

- 0s - loss: 2186590.2370 - mean_squared_error: 2186590.2370 - val_loss: 2073632.4209 - val_mean_squared_error: 2073632.4209

Epoch 78/250

- 0s - loss: 2180675.0755 - mean_squared_error: 2180675.0755 - val_loss: 2067455.0566 - val_mean_squared_error: 2067455.0566

Epoch 79/250

- 0s - loss: 2175871.8791 - mean_squared_error: 2175871.8791 - val_loss: 2062080.2746 - val_mean_squared_error: 2062080.2746

Epoch 80/250

- 0s - loss: 2170960.8270 - mean_squared_error: 2170960.8270 - val_loss: 2076134.0571 - val_mean_squared_error: 2076134.0571

Epoch 81/250

- 0s - loss: 2165095.7891 - mean_squared_error: 2165095.7891 - val_loss: 2060063.3091 - val_mean_squared_error: 2060063.3091

Epoch 82/250

- 0s - loss: 2159210.9014 - mean_squared_error: 2159210.9014 - val_loss: 2054764.6278 - val_mean_squared_error: 2054764.6278

Epoch 83/250

- 0s - loss: 2154804.6965 - mean_squared_error: 2154804.6965 - val_loss: 2042656.1980 - val_mean_squared_error: 2042656.1980

Epoch 84/250

- 0s - loss: 2151000.8027 - mean_squared_error: 2151000.8027 - val_loss: 2041542.0261 - val_mean_squared_error: 2041542.0261

Epoch 85/250

- 0s - loss: 2144402.9209 - mean_squared_error: 2144402.9209 - val_loss: 2034710.8433 - val_mean_squared_error: 2034710.8433

Epoch 86/250

- 0s - loss: 2139382.6877 - mean_squared_error: 2139382.6877 - val_loss: 2029521.7206 - val_mean_squared_error: 2029521.7206

Epoch 87/250

- 0s - loss: 2134508.9045 - mean_squared_error: 2134508.9045 - val_loss: 2025544.5526 - val_mean_squared_error: 2025544.5526

Epoch 88/250

- 0s - loss: 2130530.0560 - mean_squared_error: 2130530.0560 - val_loss: 2020708.7063 - val_mean_squared_error: 2020708.7063

Epoch 89/250

- 0s - loss: 2124692.8997 - mean_squared_error: 2124692.8997 - val_loss: 2016661.2367 - val_mean_squared_error: 2016661.2367

Epoch 90/250

- 0s - loss: 2119100.6322 - mean_squared_error: 2119100.6322 - val_loss: 2024581.7835 - val_mean_squared_error: 2024581.7835

Epoch 91/250

- 0s - loss: 2115483.7229 - mean_squared_error: 2115483.7229 - val_loss: 2008754.8749 - val_mean_squared_error: 2008754.8749

Epoch 92/250

- 0s - loss: 2110360.7427 - mean_squared_error: 2110360.7427 - val_loss: 2007724.7695 - val_mean_squared_error: 2007724.7695

Epoch 93/250

- 0s - loss: 2104714.5825 - mean_squared_error: 2104714.5825 - val_loss: 2008926.0319 - val_mean_squared_error: 2008926.0319

Epoch 94/250

- 0s - loss: 2100296.9009 - mean_squared_error: 2100296.9009 - val_loss: 1995537.1630 - val_mean_squared_error: 1995537.1630

Epoch 95/250

- 0s - loss: 2095775.2807 - mean_squared_error: 2095775.2807 - val_loss: 1998770.4627 - val_mean_squared_error: 1998770.4627

Epoch 96/250

- 0s - loss: 2090610.8210 - mean_squared_error: 2090610.8210 - val_loss: 1991205.4927 - val_mean_squared_error: 1991205.4927

Epoch 97/250

- 0s - loss: 2085764.6586 - mean_squared_error: 2085764.6586 - val_loss: 1982456.1479 - val_mean_squared_error: 1982456.1479

Epoch 98/250

- 0s - loss: 2081778.4795 - mean_squared_error: 2081778.4795 - val_loss: 1984038.7297 - val_mean_squared_error: 1984038.7297

Epoch 99/250

- 0s - loss: 2076921.0596 - mean_squared_error: 2076921.0596 - val_loss: 1974410.2433 - val_mean_squared_error: 1974410.2433

Epoch 100/250

- 0s - loss: 2071642.4116 - mean_squared_error: 2071642.4116 - val_loss: 1970844.3068 - val_mean_squared_error: 1970844.3068

Epoch 101/250

- 0s - loss: 2067992.1074 - mean_squared_error: 2067992.1074 - val_loss: 1966067.1146 - val_mean_squared_error: 1966067.1146

Epoch 102/250

- 0s - loss: 2062705.6920 - mean_squared_error: 2062705.6920 - val_loss: 1964978.2044 - val_mean_squared_error: 1964978.2044

Epoch 103/250

- 1s - loss: 2058635.8663 - mean_squared_error: 2058635.8663 - val_loss: 1960653.1206 - val_mean_squared_error: 1960653.1206

Epoch 104/250

- 1s - loss: 2053453.2750 - mean_squared_error: 2053453.2750 - val_loss: 1956940.2522 - val_mean_squared_error: 1956940.2522

Epoch 105/250

- 0s - loss: 2049969.1384 - mean_squared_error: 2049969.1384 - val_loss: 1950447.4171 - val_mean_squared_error: 1950447.4171

Epoch 106/250

- 0s - loss: 2044646.2613 - mean_squared_error: 2044646.2613 - val_loss: 1946335.1700 - val_mean_squared_error: 1946335.1700

Epoch 107/250

- 0s - loss: 2040847.5135 - mean_squared_error: 2040847.5135 - val_loss: 1950765.8945 - val_mean_squared_error: 1950765.8945

Epoch 108/250

- 0s - loss: 2035333.6843 - mean_squared_error: 2035333.6843 - val_loss: 1943112.7308 - val_mean_squared_error: 1943112.7308

Epoch 109/250

- 0s - loss: 2031570.0148 - mean_squared_error: 2031570.0148 - val_loss: 1937313.6635 - val_mean_squared_error: 1937313.6635

Epoch 110/250

- 0s - loss: 2026515.8787 - mean_squared_error: 2026515.8787 - val_loss: 1930995.4182 - val_mean_squared_error: 1930995.4182

Epoch 111/250

- 0s - loss: 2023262.6958 - mean_squared_error: 2023262.6958 - val_loss: 1926765.3571 - val_mean_squared_error: 1926765.3571

Epoch 112/250

- 0s - loss: 2018275.2594 - mean_squared_error: 2018275.2594 - val_loss: 1923056.6220 - val_mean_squared_error: 1923056.6220

Epoch 113/250

- 0s - loss: 2013793.3882 - mean_squared_error: 2013793.3882 - val_loss: 1920843.6845 - val_mean_squared_error: 1920843.6845

Epoch 114/250

- 0s - loss: 2009802.3657 - mean_squared_error: 2009802.3657 - val_loss: 1916405.0942 - val_mean_squared_error: 1916405.0942

Epoch 115/250

- 0s - loss: 2005557.3843 - mean_squared_error: 2005557.3843 - val_loss: 1920216.2247 - val_mean_squared_error: 1920216.2247

Epoch 116/250

- 0s - loss: 2000834.9872 - mean_squared_error: 2000834.9872 - val_loss: 1913231.6625 - val_mean_squared_error: 1913231.6625

Epoch 117/250

- 0s - loss: 1996924.4391 - mean_squared_error: 1996924.4391 - val_loss: 1905361.8010 - val_mean_squared_error: 1905361.8010

Epoch 118/250

- 0s - loss: 1991738.4791 - mean_squared_error: 1991738.4791 - val_loss: 1901166.0414 - val_mean_squared_error: 1901166.0414

Epoch 119/250

- 0s - loss: 1987464.5905 - mean_squared_error: 1987464.5905 - val_loss: 1900646.9869 - val_mean_squared_error: 1900646.9869

Epoch 120/250

- 0s - loss: 1982866.4694 - mean_squared_error: 1982866.4694 - val_loss: 1896726.6348 - val_mean_squared_error: 1896726.6348

Epoch 121/250

- 0s - loss: 1979190.1764 - mean_squared_error: 1979190.1764 - val_loss: 1889626.5674 - val_mean_squared_error: 1889626.5674

Epoch 122/250

- 0s - loss: 1974747.3787 - mean_squared_error: 1974747.3787 - val_loss: 1889790.7669 - val_mean_squared_error: 1889790.7669

Epoch 123/250

- 0s - loss: 1970600.7402 - mean_squared_error: 1970600.7402 - val_loss: 1881613.5393 - val_mean_squared_error: 1881613.5393

Epoch 124/250

- 0s - loss: 1966440.0516 - mean_squared_error: 1966440.0516 - val_loss: 1881952.2009 - val_mean_squared_error: 1881952.2009

Epoch 125/250

- 0s - loss: 1963144.5010 - mean_squared_error: 1963144.5010 - val_loss: 1874578.3827 - val_mean_squared_error: 1874578.3827

Epoch 126/250

- 0s - loss: 1958002.3114 - mean_squared_error: 1958002.3114 - val_loss: 1881907.8777 - val_mean_squared_error: 1881907.8777

Epoch 127/250

- 0s - loss: 1953781.4717 - mean_squared_error: 1953781.4717 - val_loss: 1866496.5014 - val_mean_squared_error: 1866496.5014

Epoch 128/250

- 0s - loss: 1949579.8435 - mean_squared_error: 1949579.8435 - val_loss: 1862999.9314 - val_mean_squared_error: 1862999.9314

Epoch 129/250

- 0s - loss: 1944837.7633 - mean_squared_error: 1944837.7633 - val_loss: 1859692.0708 - val_mean_squared_error: 1859692.0708

Epoch 130/250

- 0s - loss: 1941181.8801 - mean_squared_error: 1941181.8801 - val_loss: 1870401.3512 - val_mean_squared_error: 1870401.3512

Epoch 131/250

- 0s - loss: 1937206.9816 - mean_squared_error: 1937206.9816 - val_loss: 1855640.7789 - val_mean_squared_error: 1855640.7789

Epoch 132/250

- 1s - loss: 1932482.7280 - mean_squared_error: 1932482.7280 - val_loss: 1853098.5790 - val_mean_squared_error: 1853098.5790

Epoch 133/250

- 1s - loss: 1928831.6393 - mean_squared_error: 1928831.6393 - val_loss: 1845656.8632 - val_mean_squared_error: 1845656.8632

Epoch 134/250

- 0s - loss: 1923718.3025 - mean_squared_error: 1923718.3025 - val_loss: 1841296.4944 - val_mean_squared_error: 1841296.4944

Epoch 135/250

- 0s - loss: 1919285.1301 - mean_squared_error: 1919285.1301 - val_loss: 1839304.6138 - val_mean_squared_error: 1839304.6138

Epoch 136/250

- 0s - loss: 1915512.9725 - mean_squared_error: 1915512.9725 - val_loss: 1833941.8848 - val_mean_squared_error: 1833941.8848

Epoch 137/250

- 0s - loss: 1910338.2096 - mean_squared_error: 1910338.2096 - val_loss: 1829266.4789 - val_mean_squared_error: 1829266.4789

Epoch 138/250

- 0s - loss: 1906807.1133 - mean_squared_error: 1906807.1133 - val_loss: 1826667.6707 - val_mean_squared_error: 1826667.6707

Epoch 139/250

- 0s - loss: 1901958.9682 - mean_squared_error: 1901958.9682 - val_loss: 1822769.3903 - val_mean_squared_error: 1822769.3903

Epoch 140/250

- 0s - loss: 1898180.0895 - mean_squared_error: 1898180.0895 - val_loss: 1818848.6565 - val_mean_squared_error: 1818848.6565

Epoch 141/250

- 0s - loss: 1893384.4182 - mean_squared_error: 1893384.4182 - val_loss: 1825904.4577 - val_mean_squared_error: 1825904.4577

Epoch 142/250

- 0s - loss: 1888857.0500 - mean_squared_error: 1888857.0500 - val_loss: 1812047.4093 - val_mean_squared_error: 1812047.4093

Epoch 143/250

- 0s - loss: 1885649.5577 - mean_squared_error: 1885649.5577 - val_loss: 1815988.5720 - val_mean_squared_error: 1815988.5720

Epoch 144/250

- 0s - loss: 1882728.8948 - mean_squared_error: 1882728.8948 - val_loss: 1804221.2971 - val_mean_squared_error: 1804221.2971

Epoch 145/250

- 0s - loss: 1878070.7728 - mean_squared_error: 1878070.7728 - val_loss: 1800390.6373 - val_mean_squared_error: 1800390.6373

Epoch 146/250

- 0s - loss: 1873359.5868 - mean_squared_error: 1873359.5868 - val_loss: 1796718.0096 - val_mean_squared_error: 1796718.0096

Epoch 147/250

- 0s - loss: 1870450.4402 - mean_squared_error: 1870450.4402 - val_loss: 1793502.3480 - val_mean_squared_error: 1793502.3480

Epoch 148/250

- 0s - loss: 1864935.4132 - mean_squared_error: 1864935.4132 - val_loss: 1790719.0737 - val_mean_squared_error: 1790719.0737

Epoch 149/250

- 0s - loss: 1860335.5980 - mean_squared_error: 1860335.5980 - val_loss: 1787109.7601 - val_mean_squared_error: 1787109.7601

Epoch 150/250

- 0s - loss: 1857528.6761 - mean_squared_error: 1857528.6761 - val_loss: 1782251.8564 - val_mean_squared_error: 1782251.8564

Epoch 151/250

- 0s - loss: 1853086.1135 - mean_squared_error: 1853086.1135 - val_loss: 1779972.3222 - val_mean_squared_error: 1779972.3222

Epoch 152/250

- 0s - loss: 1849172.5880 - mean_squared_error: 1849172.5880 - val_loss: 1775980.5159 - val_mean_squared_error: 1775980.5159

Epoch 153/250

- 0s - loss: 1844933.4104 - mean_squared_error: 1844933.4104 - val_loss: 1771529.7060 - val_mean_squared_error: 1771529.7060

Epoch 154/250

- 0s - loss: 1839683.2720 - mean_squared_error: 1839683.2720 - val_loss: 1767939.3851 - val_mean_squared_error: 1767939.3851

Epoch 155/250

- 0s - loss: 1836064.3526 - mean_squared_error: 1836064.3526 - val_loss: 1764414.1924 - val_mean_squared_error: 1764414.1924

Epoch 156/250

- 0s - loss: 1832377.5910 - mean_squared_error: 1832377.5910 - val_loss: 1761295.6983 - val_mean_squared_error: 1761295.6983

Epoch 157/250

- 0s - loss: 1828378.2116 - mean_squared_error: 1828378.2116 - val_loss: 1756511.1158 - val_mean_squared_error: 1756511.1158

Epoch 158/250

- 0s - loss: 1824890.7548 - mean_squared_error: 1824890.7548 - val_loss: 1754338.1330 - val_mean_squared_error: 1754338.1330

Epoch 159/250

- 0s - loss: 1820081.3972 - mean_squared_error: 1820081.3972 - val_loss: 1751247.4256 - val_mean_squared_error: 1751247.4256

Epoch 160/250

- 0s - loss: 1816636.5487 - mean_squared_error: 1816636.5487 - val_loss: 1756630.1609 - val_mean_squared_error: 1756630.1609

Epoch 161/250

- 0s - loss: 1811579.5376 - mean_squared_error: 1811579.5376 - val_loss: 1743509.7337 - val_mean_squared_error: 1743509.7337

Epoch 162/250

- 0s - loss: 1807536.9920 - mean_squared_error: 1807536.9920 - val_loss: 1742150.8448 - val_mean_squared_error: 1742150.8448

Epoch 163/250

- 0s - loss: 1803971.9994 - mean_squared_error: 1803971.9994 - val_loss: 1736175.7619 - val_mean_squared_error: 1736175.7619

Epoch 164/250

- 0s - loss: 1800349.7850 - mean_squared_error: 1800349.7850 - val_loss: 1743091.8461 - val_mean_squared_error: 1743091.8461

Epoch 165/250

- 0s - loss: 1794217.3592 - mean_squared_error: 1794217.3592 - val_loss: 1727758.6505 - val_mean_squared_error: 1727758.6505

Epoch 166/250

- 0s - loss: 1791519.7027 - mean_squared_error: 1791519.7027 - val_loss: 1749954.5221 - val_mean_squared_error: 1749954.5221

Epoch 167/250

- 0s - loss: 1789118.2473 - mean_squared_error: 1789118.2473 - val_loss: 1721773.4305 - val_mean_squared_error: 1721773.4305

Epoch 168/250

- 0s - loss: 1784228.6996 - mean_squared_error: 1784228.6996 - val_loss: 1717498.3095 - val_mean_squared_error: 1717498.3095

Epoch 169/250

- 0s - loss: 1779127.8237 - mean_squared_error: 1779127.8237 - val_loss: 1713949.0157 - val_mean_squared_error: 1713949.0157

Epoch 170/250

- 0s - loss: 1775922.7718 - mean_squared_error: 1775922.7718 - val_loss: 1711449.6809 - val_mean_squared_error: 1711449.6809

Epoch 171/250

- 0s - loss: 1771560.6863 - mean_squared_error: 1771560.6863 - val_loss: 1709218.2926 - val_mean_squared_error: 1709218.2926

Epoch 172/250

- 0s - loss: 1768529.1427 - mean_squared_error: 1768529.1427 - val_loss: 1706059.7845 - val_mean_squared_error: 1706059.7845

Epoch 173/250

- 0s - loss: 1764611.6279 - mean_squared_error: 1764611.6279 - val_loss: 1704987.3673 - val_mean_squared_error: 1704987.3673

Epoch 174/250

- 0s - loss: 1759797.3488 - mean_squared_error: 1759797.3488 - val_loss: 1696676.8063 - val_mean_squared_error: 1696676.8063

Epoch 175/250

- 0s - loss: 1756353.1683 - mean_squared_error: 1756353.1683 - val_loss: 1693596.5106 - val_mean_squared_error: 1693596.5106

Epoch 176/250

- 0s - loss: 1752005.8658 - mean_squared_error: 1752005.8658 - val_loss: 1689543.5374 - val_mean_squared_error: 1689543.5374

Epoch 177/250

- 0s - loss: 1747951.0195 - mean_squared_error: 1747951.0195 - val_loss: 1686419.0251 - val_mean_squared_error: 1686419.0251

Epoch 178/250

- 0s - loss: 1744804.0021 - mean_squared_error: 1744804.0021 - val_loss: 1682881.5861 - val_mean_squared_error: 1682881.5861

Epoch 179/250

- 0s - loss: 1741434.6224 - mean_squared_error: 1741434.6224 - val_loss: 1691390.7072 - val_mean_squared_error: 1691390.7072

Epoch 180/250

- 0s - loss: 1737770.1080 - mean_squared_error: 1737770.1080 - val_loss: 1675420.7468 - val_mean_squared_error: 1675420.7468

Epoch 181/250

- 0s - loss: 1732393.8311 - mean_squared_error: 1732393.8311 - val_loss: 1675718.3392 - val_mean_squared_error: 1675718.3392

Epoch 182/250

- 0s - loss: 1728406.2045 - mean_squared_error: 1728406.2045 - val_loss: 1668087.5327 - val_mean_squared_error: 1668087.5327

Epoch 183/250

- 0s - loss: 1724420.8879 - mean_squared_error: 1724420.8879 - val_loss: 1664868.2181 - val_mean_squared_error: 1664868.2181

Epoch 184/250

- 0s - loss: 1720156.1015 - mean_squared_error: 1720156.1015 - val_loss: 1669234.7821 - val_mean_squared_error: 1669234.7821

Epoch 185/250

- 0s - loss: 1716196.7695 - mean_squared_error: 1716196.7695 - val_loss: 1669442.6978 - val_mean_squared_error: 1669442.6978

Epoch 186/250

- 0s - loss: 1712488.4788 - mean_squared_error: 1712488.4788 - val_loss: 1653888.1396 - val_mean_squared_error: 1653888.1396

Epoch 187/250

- 0s - loss: 1708032.9664 - mean_squared_error: 1708032.9664 - val_loss: 1650641.3101 - val_mean_squared_error: 1650641.3101

Epoch 188/250

- 0s - loss: 1704076.8909 - mean_squared_error: 1704076.8909 - val_loss: 1647999.1042 - val_mean_squared_error: 1647999.1042

Epoch 189/250

- 0s - loss: 1701110.1930 - mean_squared_error: 1701110.1930 - val_loss: 1646898.5643 - val_mean_squared_error: 1646898.5643

Epoch 190/250

- 0s - loss: 1697091.7084 - mean_squared_error: 1697091.7084 - val_loss: 1642208.3946 - val_mean_squared_error: 1642208.3946

Epoch 191/250

- 0s - loss: 1693360.1219 - mean_squared_error: 1693360.1219 - val_loss: 1636948.2817 - val_mean_squared_error: 1636948.2817

Epoch 192/250

- 0s - loss: 1689616.1710 - mean_squared_error: 1689616.1710 - val_loss: 1634705.0452 - val_mean_squared_error: 1634705.0452

Epoch 193/250

- 0s - loss: 1685067.2261 - mean_squared_error: 1685067.2261 - val_loss: 1630226.0061 - val_mean_squared_error: 1630226.0061

Epoch 194/250

- 0s - loss: 1681237.7659 - mean_squared_error: 1681237.7659 - val_loss: 1639004.3018 - val_mean_squared_error: 1639004.3018

Epoch 195/250

- 0s - loss: 1678298.5193 - mean_squared_error: 1678298.5193 - val_loss: 1623961.1689 - val_mean_squared_error: 1623961.1689

Epoch 196/250

- 0s - loss: 1673192.0288 - mean_squared_error: 1673192.0288 - val_loss: 1619567.3824 - val_mean_squared_error: 1619567.3824

Epoch 197/250

- 0s - loss: 1669092.1021 - mean_squared_error: 1669092.1021 - val_loss: 1619046.1713 - val_mean_squared_error: 1619046.1713

Epoch 198/250

- 0s - loss: 1664606.5096 - mean_squared_error: 1664606.5096 - val_loss: 1615387.4159 - val_mean_squared_error: 1615387.4159

Epoch 199/250

- 0s - loss: 1662564.2851 - mean_squared_error: 1662564.2851 - val_loss: 1609142.0948 - val_mean_squared_error: 1609142.0948

Epoch 200/250

- 0s - loss: 1657685.3866 - mean_squared_error: 1657685.3866 - val_loss: 1605894.4098 - val_mean_squared_error: 1605894.4098

Epoch 201/250

- 0s - loss: 1654749.7125 - mean_squared_error: 1654749.7125 - val_loss: 1606032.8303 - val_mean_squared_error: 1606032.8303

Epoch 202/250

- 0s - loss: 1649906.3164 - mean_squared_error: 1649906.3164 - val_loss: 1598597.9039 - val_mean_squared_error: 1598597.9039

Epoch 203/250

- 0s - loss: 1646614.9474 - mean_squared_error: 1646614.9474 - val_loss: 1600799.1936 - val_mean_squared_error: 1600799.1936

Epoch 204/250

- 0s - loss: 1643058.2099 - mean_squared_error: 1643058.2099 - val_loss: 1596763.3237 - val_mean_squared_error: 1596763.3237

Epoch 205/250

- 0s - loss: 1638300.3648 - mean_squared_error: 1638300.3648 - val_loss: 1593418.9744 - val_mean_squared_error: 1593418.9744

Epoch 206/250

- 0s - loss: 1634605.7919 - mean_squared_error: 1634605.7919 - val_loss: 1591944.4761 - val_mean_squared_error: 1591944.4761

Epoch 207/250

- 0s - loss: 1632396.7522 - mean_squared_error: 1632396.7522 - val_loss: 1594654.7668 - val_mean_squared_error: 1594654.7668

Epoch 208/250

- 0s - loss: 1626509.9061 - mean_squared_error: 1626509.9061 - val_loss: 1581620.4115 - val_mean_squared_error: 1581620.4115

Epoch 209/250

- 0s - loss: 1623937.1295 - mean_squared_error: 1623937.1295 - val_loss: 1579898.4873 - val_mean_squared_error: 1579898.4873

Epoch 210/250

- 0s - loss: 1621066.5514 - mean_squared_error: 1621066.5514 - val_loss: 1571717.8550 - val_mean_squared_error: 1571717.8550

Epoch 211/250

- 0s - loss: 1616139.5395 - mean_squared_error: 1616139.5395 - val_loss: 1569710.2550 - val_mean_squared_error: 1569710.2550

Epoch 212/250

- 0s - loss: 1612243.5690 - mean_squared_error: 1612243.5690 - val_loss: 1567843.0187 - val_mean_squared_error: 1567843.0187

Epoch 213/250

- 0s - loss: 1609260.8194 - mean_squared_error: 1609260.8194 - val_loss: 1572308.2530 - val_mean_squared_error: 1572308.2530

Epoch 214/250

- 0s - loss: 1605613.5753 - mean_squared_error: 1605613.5753 - val_loss: 1557996.3463 - val_mean_squared_error: 1557996.3463

Epoch 215/250

- 0s - loss: 1600952.7402 - mean_squared_error: 1600952.7402 - val_loss: 1555764.3567 - val_mean_squared_error: 1555764.3567

Epoch 216/250

- 0s - loss: 1597516.2842 - mean_squared_error: 1597516.2842 - val_loss: 1552335.2397 - val_mean_squared_error: 1552335.2397

Epoch 217/250

- 0s - loss: 1595406.1265 - mean_squared_error: 1595406.1265 - val_loss: 1548897.1456 - val_mean_squared_error: 1548897.1456

Epoch 218/250

- 0s - loss: 1591035.0155 - mean_squared_error: 1591035.0155 - val_loss: 1546232.4290 - val_mean_squared_error: 1546232.4290

Epoch 219/250

- 0s - loss: 1587376.0179 - mean_squared_error: 1587376.0179 - val_loss: 1541615.9383 - val_mean_squared_error: 1541615.9383

Epoch 220/250

- 0s - loss: 1583196.2946 - mean_squared_error: 1583196.2946 - val_loss: 1539573.1838 - val_mean_squared_error: 1539573.1838

Epoch 221/250

- 0s - loss: 1580048.1778 - mean_squared_error: 1580048.1778 - val_loss: 1539254.7977 - val_mean_squared_error: 1539254.7977

Epoch 222/250

- 0s - loss: 1576428.4779 - mean_squared_error: 1576428.4779 - val_loss: 1537425.3814 - val_mean_squared_error: 1537425.3814

Epoch 223/250

- 0s - loss: 1571698.2321 - mean_squared_error: 1571698.2321 - val_loss: 1533045.4937 - val_mean_squared_error: 1533045.4937

Epoch 224/250

- 1s - loss: 1569309.8116 - mean_squared_error: 1569309.8116 - val_loss: 1524448.8505 - val_mean_squared_error: 1524448.8505

Epoch 225/250

- 0s - loss: 1565261.5082 - mean_squared_error: 1565261.5082 - val_loss: 1521244.5449 - val_mean_squared_error: 1521244.5449

Epoch 226/250

- 0s - loss: 1561696.2988 - mean_squared_error: 1561696.2988 - val_loss: 1521136.5315 - val_mean_squared_error: 1521136.5315

Epoch 227/250

- 0s - loss: 1557760.5976 - mean_squared_error: 1557760.5976 - val_loss: 1514913.8519 - val_mean_squared_error: 1514913.8519

Epoch 228/250

- 0s - loss: 1554509.0372 - mean_squared_error: 1554509.0372 - val_loss: 1511611.5119 - val_mean_squared_error: 1511611.5119

Epoch 229/250

- 0s - loss: 1550976.7215 - mean_squared_error: 1550976.7215 - val_loss: 1509094.8388 - val_mean_squared_error: 1509094.8388

Epoch 230/250

- 0s - loss: 1546977.6634 - mean_squared_error: 1546977.6634 - val_loss: 1505029.9383 - val_mean_squared_error: 1505029.9383

Epoch 231/250

- 0s - loss: 1543970.9746 - mean_squared_error: 1543970.9746 - val_loss: 1502910.6582 - val_mean_squared_error: 1502910.6582

Epoch 232/250

- 0s - loss: 1540305.0970 - mean_squared_error: 1540305.0970 - val_loss: 1498495.6655 - val_mean_squared_error: 1498495.6655

Epoch 233/250

- 0s - loss: 1537501.6374 - mean_squared_error: 1537501.6374 - val_loss: 1495304.1388 - val_mean_squared_error: 1495304.1388

Epoch 234/250

- 0s - loss: 1533388.2508 - mean_squared_error: 1533388.2508 - val_loss: 1492806.1005 - val_mean_squared_error: 1492806.1005

Epoch 235/250

- 0s - loss: 1530941.4702 - mean_squared_error: 1530941.4702 - val_loss: 1499393.1403 - val_mean_squared_error: 1499393.1403

Epoch 236/250

- 0s - loss: 1526883.5424 - mean_squared_error: 1526883.5424 - val_loss: 1490247.0621 - val_mean_squared_error: 1490247.0621

Epoch 237/250

- 0s - loss: 1524231.6881 - mean_squared_error: 1524231.6881 - val_loss: 1493653.0241 - val_mean_squared_error: 1493653.0241

Epoch 238/250

- 0s - loss: 1519628.2648 - mean_squared_error: 1519628.2648 - val_loss: 1482725.0214 - val_mean_squared_error: 1482725.0214

Epoch 239/250

- 0s - loss: 1517536.3750 - mean_squared_error: 1517536.3750 - val_loss: 1476558.6559 - val_mean_squared_error: 1476558.6559

Epoch 240/250

- 0s - loss: 1514165.7144 - mean_squared_error: 1514165.7144 - val_loss: 1475985.6456 - val_mean_squared_error: 1475985.6456

Epoch 241/250

- 0s - loss: 1510455.2794 - mean_squared_error: 1510455.2794 - val_loss: 1470677.5349 - val_mean_squared_error: 1470677.5349

Epoch 242/250

- 0s - loss: 1508992.9663 - mean_squared_error: 1508992.9663 - val_loss: 1474781.7924 - val_mean_squared_error: 1474781.7924

Epoch 243/250

- 0s - loss: 1503767.5517 - mean_squared_error: 1503767.5517 - val_loss: 1465611.5074 - val_mean_squared_error: 1465611.5074

Epoch 244/250

- 0s - loss: 1501195.4531 - mean_squared_error: 1501195.4531 - val_loss: 1464832.4664 - val_mean_squared_error: 1464832.4664

Epoch 245/250

- 0s - loss: 1497824.0992 - mean_squared_error: 1497824.0992 - val_loss: 1462425.5150 - val_mean_squared_error: 1462425.5150

Epoch 246/250

- 0s - loss: 1495264.5258 - mean_squared_error: 1495264.5258 - val_loss: 1459882.0771 - val_mean_squared_error: 1459882.0771

Epoch 247/250

- 0s - loss: 1493924.6338 - mean_squared_error: 1493924.6338 - val_loss: 1454529.6073 - val_mean_squared_error: 1454529.6073

Epoch 248/250

- 0s - loss: 1489410.9016 - mean_squared_error: 1489410.9016 - val_loss: 1451906.8467 - val_mean_squared_error: 1451906.8467

Epoch 249/250

- 0s - loss: 1486580.2059 - mean_squared_error: 1486580.2059 - val_loss: 1449524.4469 - val_mean_squared_error: 1449524.4469

Epoch 250/250

- 0s - loss: 1482848.0573 - mean_squared_error: 1482848.0573 - val_loss: 1445007.3935 - val_mean_squared_error: 1445007.3935

32/13485 [..............................] - ETA: 0s