Utilities Needed for Financial Data

Johannes Graner (LinkedIn), Albert Nilsson (LinkedIn) and Raazesh Sainudiin (LinkedIn)

2020, Uppsala, Sweden

This project was supported by Combient Mix AB through summer internships at:

Combient Competence Centre for Data Engineering Sciences, Department of Mathematics, Uppsala University, Uppsala, Sweden

Here, certain delta.io tables are loaded. These tables have been prepared already.

You will not be able to load them directly but libraries used in the process are all open-sourced.

object TrendUtils {

private val fx1mPath = "s3a://XXXXX/findata/com/histdata/free/FX-1-Minute-Data/"

private val trendCalculusCheckpointPath = "s3a://XXXXX/summerinterns2020/trend-calculus-blog/public/"

private val streamableTrendCalculusPath = "s3a://XXXXX/summerinterns2020/johannes/streamable-trend-calculus/"

private val yfinancePath = "s3a://XXXXX/summerinterns2020/yfinance/"

def getFx1mPath = fx1mPath

def getTrendCalculusCheckpointPath = trendCalculusCheckpointPath

def getStreamableTrendCalculusPath = streamableTrendCalculusPath

def getYfinancePath = yfinancePath

}

defined object TrendUtils

class TrendUtils:

def getTrendCalculusCheckpointPath():

return "s3a://XXXXX/summerinterns2020/trend-calculus-blog/public/"

def getYfinancePath():

return "s3a://XXXXX/summerinterns2020/yfinance/"

Utilities Needed for Mass Media Data

Johannes Graner (LinkedIn), Albert Nilsson (LinkedIn) and Raazesh Sainudiin (LinkedIn)

2020, Uppsala, Sweden

This project was supported by Combient Mix AB through summer internships at:

Combient Competence Centre for Data Engineering Sciences, Department of Mathematics, Uppsala University, Uppsala, Sweden

Here, certain delta.io tables are loaded. These tables have been prepared already.

You will not be able to load them directly but libraries used in the process are all open-sourced.

object GdeltUtils {

private val gdeltV1Path = "s3a://XXXXXX/GDELT/delta/bronze/v1/"

private val eoiCheckpointPath = "s3a://XXXXXX/.../texata/"

private val poiCheckpointPath = "s3a://XXXXXX/.../person_graph/"

def getGdeltV1Path = gdeltV1Path

def getEOICheckpointPath = eoiCheckpointPath

def getPOICheckpointPath = poiCheckpointPath

}

defined object GdeltUtils

class GdeltUtils:

def getEOICheckpointPath():

return "s3a://XXXXXX/.../texata/"

Trends in Financial Stocks and News Events

Johannes Graner (LinkedIn), Albert Nilsson (LinkedIn) and Raazesh Sainudiin (LinkedIn)

2020, Uppsala, Sweden

This project was supported by Combient Mix AB through summer internships at:

Combient Competence Centre for Data Engineering Sciences, Department of Mathematics, Uppsala University, Uppsala, Sweden

According to Merriam-Webster Dictionary the definitionof trend is as follows:

a prevailing tendency or inclination : drift. How to use trend in a sentence. Synonym Discussion of trend.

Since people invest in financial stocks of publicly traded companies and make these decisions based on their understanding of current events reported in mass media, a natural question is:

How can one try to represent and understand this interplay?

The following material, first goes through the ETL process to ingest:

- financial data and then

- mass-media data

in a structured manner so that one can begin scalable data science processes upon them.

In the sequel two libraries are used to take advantage of SparkSQL and delta.io tables ("Spark on ACID"):

- for encoding and interpreting trends (so-called trend calculus) in any time-series, say financial stock prices, for instance.

- for structured representaiton of the worl'd largest open-sourced mass media data:

- The GDELT Project: https://www.gdeltproject.org/.

The last few notebooks show some simple data analytics to help extract and identify events that may be related to trends of interest.

We note that the sequel here is mainly focused on the data engineering science of ETL and basic ML Pipelines. We hope it will inspire others to do more sophisticated research, including scalable causal inference and various forms of distributed deep/reinforcement learning for more sophisticated decision problems.

Historical FX-1-M Financial Data

Johannes Graner (LinkedIn), Albert Nilsson (LinkedIn) and Raazesh Sainudiin (LinkedIn)

2020, Uppsala, Sweden

This project was supported by Combient Mix AB through summer internships at:

Combient Competence Centre for Data Engineering Sciences, Department of Mathematics, Uppsala University, Uppsala, Sweden

Resources

This notebook builds on the following repository in order to obtain SparkSQL DatSets and DataFrames of freely available FX-1-M Data so that they can be ingested into delta.io Tables:

"./000a_finance_utils"

The Trend Calculus library is needed for case classes and parsers for the data.

import org.lamastex.spark.trendcalculus._

import org.lamastex.spark.trendcalculus._

defined object TrendUtils

val filePathRoot = TrendUtils.getFx1mPath

There are many pairs of currencies and/or commodities available.

dbutils.fs.ls(filePathRoot).foreach(fi => println("exchange pair: " + fi.name))

exchange pair: audcad/

exchange pair: audchf/

exchange pair: audjpy/

exchange pair: audnzd/

exchange pair: audusd/

exchange pair: auxaud/

exchange pair: bcousd/

exchange pair: cadchf/

exchange pair: cadjpy/

exchange pair: chfjpy/

exchange pair: etxeur/

exchange pair: euraud/

exchange pair: eurcad/

exchange pair: eurchf/

exchange pair: eurczk/

exchange pair: eurdkk/

exchange pair: eurgbp/

exchange pair: eurhuf/

exchange pair: eurjpy/

exchange pair: eurnok/

exchange pair: eurnzd/

exchange pair: eurpln/

exchange pair: eursek/

exchange pair: eurtry/

exchange pair: eurusd/

exchange pair: frxeur/

exchange pair: gbpaud/

exchange pair: gbpcad/

exchange pair: gbpchf/

exchange pair: gbpjpy/

exchange pair: gbpnzd/

exchange pair: gbpusd/

exchange pair: grxeur/

exchange pair: hkxhkd/

exchange pair: jpxjpy/

exchange pair: nsxusd/

exchange pair: nzdcad/

exchange pair: nzdchf/

exchange pair: nzdjpy/

exchange pair: nzdusd/

exchange pair: sgdjpy/

exchange pair: spxusd/

exchange pair: udxusd/

exchange pair: ukxgbp/

exchange pair: usdcad/

exchange pair: usdchf/

exchange pair: usdczk/

exchange pair: usddkk/

exchange pair: usdhkd/

exchange pair: usdhuf/

exchange pair: usdjpy/

exchange pair: usdmxn/

exchange pair: usdnok/

exchange pair: usdpln/

exchange pair: usdsek/

exchange pair: usdsgd/

exchange pair: usdtry/

exchange pair: usdzar/

exchange pair: wtiusd/

exchange pair: xagusd/

exchange pair: xauaud/

exchange pair: xauchf/

exchange pair: xaueur/

exchange pair: xaugbp/

exchange pair: xauusd/

exchange pair: zarjpy/

Let's look at Brent Oil price in USD.

dbutils.fs.ls(filePathRoot + "bcousd/").foreach(fi => println("name: " + fi.name + ", size: " + fi.size))

name: DAT_ASCII_BCOUSD_M1_2010.csv.gz, size: 284384

name: DAT_ASCII_BCOUSD_M1_2010.txt.gz, size: 41157

name: DAT_ASCII_BCOUSD_M1_2011.csv.gz, size: 2479115

name: DAT_ASCII_BCOUSD_M1_2011.txt.gz, size: 216327

name: DAT_ASCII_BCOUSD_M1_2012.csv.gz, size: 2327511

name: DAT_ASCII_BCOUSD_M1_2012.txt.gz, size: 321867

name: DAT_ASCII_BCOUSD_M1_2013.csv.gz, size: 2109500

name: DAT_ASCII_BCOUSD_M1_2013.txt.gz, size: 417973

name: DAT_ASCII_BCOUSD_M1_2014.csv.gz, size: 1961172

name: DAT_ASCII_BCOUSD_M1_2014.txt.gz, size: 431591

name: DAT_ASCII_BCOUSD_M1_2015.csv.gz, size: 2205678

name: DAT_ASCII_BCOUSD_M1_2015.txt.gz, size: 333277

name: DAT_ASCII_BCOUSD_M1_2016.csv.gz, size: 2131659

name: DAT_ASCII_BCOUSD_M1_2016.txt.gz, size: 342616

name: DAT_ASCII_BCOUSD_M1_2017.csv.gz, size: 1854793

name: DAT_ASCII_BCOUSD_M1_2017.txt.gz, size: 434781

name: DAT_ASCII_BCOUSD_M1_2018.csv.gz, size: 2251250

name: DAT_ASCII_BCOUSD_M1_2018.txt.gz, size: 306810

name: DAT_ASCII_BCOUSD_M1_2019.csv.gz, size: 2701059

name: DAT_ASCII_BCOUSD_M1_2019.txt.gz, size: 102290

name: DAT_ASCII_BCOUSD_M1_202001.csv.gz, size: 233757

name: DAT_ASCII_BCOUSD_M1_202001.txt.gz, size: 8391

name: DAT_ASCII_BCOUSD_M1_202002.csv.gz, size: 222628

name: DAT_ASCII_BCOUSD_M1_202002.txt.gz, size: 4379

name: DAT_ASCII_BCOUSD_M1_202003.csv.gz, size: 265471

name: DAT_ASCII_BCOUSD_M1_202003.txt.gz, size: 834

name: DAT_ASCII_BCOUSD_M1_202004.csv.gz, size: 245819

name: DAT_ASCII_BCOUSD_M1_202004.txt.gz, size: 953

name: DAT_ASCII_BCOUSD_M1_202005.csv.gz, size: 233828

name: DAT_ASCII_BCOUSD_M1_202005.txt.gz, size: 850

name: DAT_ASCII_BCOUSD_M1_202006.csv.gz, size: 244557

name: DAT_ASCII_BCOUSD_M1_202006.txt.gz, size: 1394

name: DAT_ASCII_BCOUSD_M1_202007.csv.gz, size: 74976

name: DAT_ASCII_BCOUSD_M1_202007.txt.gz, size: 1637

We use the parser available from Trend Calculus to read the csv files into a Spark Dataset.

val oilPath = filePathRoot + "bcousd/*.csv.gz"

val oilDS = spark.read.fx1m(oilPath).orderBy($"time")

oilDS.show(20, false)

+-------------------+-----+-----+-----+-----+------+

|time |open |high |low |close|volume|

+-------------------+-----+-----+-----+-----+------+

|2010-11-14 20:15:00|86.73|86.74|86.73|86.74|0 |

|2010-11-14 20:17:00|86.75|86.75|86.75|86.75|0 |

|2010-11-14 20:18:00|86.76|86.78|86.76|86.76|0 |

|2010-11-14 20:19:00|86.74|86.74|86.74|86.74|0 |

|2010-11-14 20:21:00|86.75|86.75|86.74|86.74|0 |

|2010-11-14 20:24:00|86.75|86.75|86.75|86.75|0 |

|2010-11-14 20:26:00|86.76|86.77|86.74|86.77|0 |

|2010-11-14 20:27:00|86.79|86.79|86.75|86.75|0 |

|2010-11-14 20:28:00|86.77|86.79|86.75|86.79|0 |

|2010-11-14 20:32:00|86.8 |86.81|86.79|86.81|0 |

|2010-11-14 20:33:00|86.81|86.81|86.81|86.81|0 |

|2010-11-14 20:34:00|86.81|86.81|86.81|86.81|0 |

|2010-11-14 20:35:00|86.81|86.81|86.79|86.79|0 |

|2010-11-14 20:36:00|86.79|86.8 |86.78|86.8 |0 |

|2010-11-14 20:37:00|86.78|86.8 |86.78|86.79|0 |

|2010-11-14 20:38:00|86.79|86.79|86.79|86.79|0 |

|2010-11-14 20:39:00|86.79|86.8 |86.79|86.79|0 |

|2010-11-14 20:40:00|86.79|86.8 |86.79|86.8 |0 |

|2010-11-14 20:41:00|86.8 |86.8 |86.8 |86.8 |0 |

|2010-11-14 20:42:00|86.8 |86.8 |86.8 |86.8 |0 |

+-------------------+-----+-----+-----+-----+------+

only showing top 20 rows

yfinance Stock Data

Johannes Graner (LinkedIn), Albert Nilsson (LinkedIn) and Raazesh Sainudiin (LinkedIn)

2020, Uppsala, Sweden

This project was supported by Combient Mix AB through summer internships at:

Combient Competence Centre for Data Engineering Sciences, Department of Mathematics, Uppsala University, Uppsala, Sweden

This notebook builds on the following repositories in order to obtain SparkSQL DataSets and DataFrames of freely available Yahoo! Finance Data so that they can be ingested into delta.io Tables for trend analysis and more:

Resources:

Yfinance is a python library that makes it easy to download various financial data from Yahoo Finance.

pip install yfinance

"./000a_finance_utils"

To illustrate the library, we use two stocks of the Swedish bank SEB. The (default) data resolution is one day so we use 20 years of data to get a lot of observations.

import yfinance as yf

dataSEBAST = yf.download("SEB-A.ST", start="2001-07-01", end="2020-07-12")

dataSEBCST = yf.download("SEB-C.ST", start="2001-07-01", end="2020-07-12")

dataSEBAST.size

dataSEBAST

We can also download several tickers at once.

Note that the result is in a pandas dataframe so we are not doing any distributed computing.

This means that some care has to be taken to not overwhelm the local machine.

defined object TrendUtils

dataSEBAandCSTBST = yf.download("SEB-A.ST SEB-C.ST", start="2020-07-01", end="2020-07-12", group_by="ticker")

dataSEBAandCSTBST

type(dataSEBAST)

Loading the data into a Spark DataFrame.

dataSEBAST_sp = spark.createDataFrame(dataSEBAandCSTBST)

dataSEBAST_sp.printSchema()

The conversion to Spark DataFrame works but is quite messy. Just imagine if there were more tickers!

dataSEBAST_sp.show(20, False)

When selecting a column with a dot in the name (as in ('SEB-A.ST', 'High')) using PySpark, we have to enclose the column name in backticks `.

dataSEBAST_sp.select("`('SEB-A.ST', 'High')`")

We can also get information about individual tickers.

msft = yf.Ticker("MSFT")

print(msft)

msft.info

We write a function to transform data downloaded by yfinance and write a better formatted Spark DataFrame.

import pandas as pd

import yfinance as yf

import sys, getopt

# example:

# python3 yfin_to_csv.py -i "60m" "SEB-A.ST INVE-A.ST" "2019-07-01" "2019-07-06" "/root/GIT/yfin_test.csv"

def ingest(interval, tickers, start, end, csv_path):

df = yf.download(tickers, start=start, end=end, interval=interval, group_by='ticker')

findf = df.unstack().unstack(1).sort_index(level=1)

findf.reset_index(level=0, inplace=True)

findf = findf.loc[start:end]

findf.rename(columns={'level_0':'Ticker'}, inplace=True)

findf.index.name='Time'

findf['Volume'] = pd.to_numeric(findf['Volume'], downcast='integer')

findf = findf.reset_index(drop=False)

findf['Time'] = findf['Time'].map(lambda x: str(x))

spark.createDataFrame(findf).write.mode('overwrite').save(csv_path, format='csv')

return(findf)

Let's look at some top value companies in the world as well as an assortment of Swedish Companies.

The number of tickers is now much larger, using the previous method would result in over 100 columns.

This would make it quite difficult to see what's going on, not to mention trying to analyze the data!

The data resolution is now one minute, meaning that 7 days gives a lot of observations.

Yfinance only allows downloading 1-minute data one week at a time and only for dates within the last 30 days.

topValueCompanies = 'MSFT AAPL AMZN GOOG BABA FB BRK-B BRK-A TSLA'

swedishCompanies = 'ASSA-B.ST ATCO-A.ST ALIV-SDB.ST ELUX-A.ST ELUX-B.ST EPI-A.ST EPI-B.ST ERIC-A.ST ERIC-B.ST FORTUM.HE HUSQ-A.ST HUSQ-B.ST INVE-A.ST INVE-B.ST KESKOA.HE KESKOB.HE KNEBV.HE KCR.HE MTRS.ST SAAB-B.ST SAS.ST SEB-A.ST SEB-C.ST SKF-A.ST SKF-B.ST STE-R.ST STERV.HE WRT1V.HE'

tickers = topValueCompanies + ' ' + swedishCompanies

interval = '1m'

start = '2021-01-01'

end = '2021-01-07'

csv_path = TrendUtils.getYfinancePath() + 'stocks_' + interval + '_' + start + '_' + end + '.csv'

dbutils.fs.rm(csv_path, recurse=True)

df = ingest(interval, tickers, start, end, csv_path)

Having written the result to a csv file, we can use the parser in the Trend Calculus library https://github.com/lamastex/spark-trend-calculus to read the data into a Dataset in Scala Spark.

import org.lamastex.spark.trendcalculus._

import org.lamastex.spark.trendcalculus._

val rootPath = TrendUtils.getYfinancePath

val csv_path = rootPath + "stocks_1m_2021-01-01_2021-01-07.csv"

val yfinDF = spark.read.yfin(csv_path)

yfinDF.count

res1: Long = 71965

yfinDF.show(20, false)

+-------------------+---------+------------------+------------------+------------------+------------------+------------------+------+

|time |ticker |open |high |low |close |adjClose |volume|

+-------------------+---------+------------------+------------------+------------------+------------------+------------------+------+

|2021-01-04 14:45:00|BRK-B |230.7899932861328 |230.99000549316406|230.72999572753906|230.97000122070312|230.97000122070312|13367 |

|2021-01-04 14:45:00|ELUX-A.ST|null |null |null |null |null |null |

|2021-01-04 14:45:00|ELUX-B.ST|190.14999389648438|190.14999389648438|190.14999389648438|190.14999389648438|190.14999389648438|2770 |

|2021-01-04 14:45:00|EPI-A.ST |null |null |null |null |null |null |

|2021-01-04 14:45:00|EPI-B.ST |null |null |null |null |null |null |

|2021-01-04 14:45:00|ERIC-A.ST|null |null |null |null |null |null |

|2021-01-04 14:45:00|ERIC-B.ST|null |null |null |null |null |null |

|2021-01-04 14:45:00|FB |271.30499267578125|271.42498779296875|271.30499267578125|271.42498779296875|271.42498779296875|28330 |

|2021-01-04 14:45:00|FORTUM.HE|20.31999969482422 |20.31999969482422 |20.31999969482422 |20.31999969482422 |20.31999969482422 |49824 |

|2021-01-04 14:45:00|GOOG |1749.4150390625 |1751.260009765625 |1749.4150390625 |1750.18994140625 |1750.18994140625 |4109 |

|2021-01-04 14:45:00|HUSQ-A.ST|null |null |null |null |null |null |

|2021-01-04 14:45:00|HUSQ-B.ST|108.9000015258789 |108.9000015258789 |108.9000015258789 |108.9000015258789 |108.9000015258789 |7170 |

|2021-01-04 14:45:00|INVE-A.ST|null |null |null |null |null |null |

|2021-01-04 14:45:00|INVE-B.ST|612.5999755859375 |612.5999755859375 |612.4000244140625 |612.4000244140625 |612.4000244140625 |1628 |

|2021-01-04 14:45:00|KCR.HE |29.040000915527344|29.040000915527344|29.040000915527344|29.040000915527344|29.040000915527344|0 |

|2021-01-04 14:45:00|KESKOA.HE|null |null |null |null |null |null |

|2021-01-04 14:45:00|KESKOB.HE|null |null |null |null |null |null |

|2021-01-04 14:45:00|KNEBV.HE |null |null |null |null |null |null |

|2021-01-04 14:45:00|MSFT |220.25 |220.32000732421875|220.1699981689453 |220.1699981689453 |220.1699981689453 |96255 |

|2021-01-04 14:45:00|MTRS.ST |null |null |null |null |null |null |

+-------------------+---------+------------------+------------------+------------------+------------------+------------------+------+

only showing top 20 rows

Finding trends in oil price data.

Johannes Graner (LinkedIn), Albert Nilsson (LinkedIn) and Raazesh Sainudiin (LinkedIn)

2020, Uppsala, Sweden

This project was supported by Combient Mix AB through summer internships at:

Combient Competence Centre for Data Engineering Sciences, Department of Mathematics, Uppsala University, Uppsala, Sweden

Resources

This builds on the following library and its antecedents therein to find trends in historical oil prices:

This work was inspired by:

- Antoine Aamennd's texata-2017

- Andrew Morgan's Trend Calculus Library

"./000a_finance_utils"

When dealing with time series, it can be difficult to find a good way to find and analyze trends in the data.

One approach is by using the Trend Calculus algorithm invented by Andrew Morgan. More information about Trend Calculus can be found at https://lamastex.github.io/spark-trend-calculus-examples/.

defined object TrendUtils

import org.lamastex.spark.trendcalculus._

import spark.implicits._

import org.apache.spark.sql._

import org.apache.spark.sql.functions._

import java.sql.Timestamp

import org.lamastex.spark.trendcalculus._

import spark.implicits._

import org.apache.spark.sql._

import org.apache.spark.sql.functions._

import java.sql.Timestamp

The input to the algorithm is data in the format (ticker, time, value). In this example, ticker is "BCOUSD" (Brent Crude Oil), time is given in minutes and value is the closing price for Brent Crude Oil during that minute.

This data is historical data from 2010 to 2019 taken from https://www.histdata.com/ using methods from FX-1-Minute-Data by Philippe Remy. In this notebook, everything is done on static dataframes. We will soon see examples on streaming dataframes.

There are gaps in the data, notably during the weekends when no trading takes place, but this does not affect the algorithm as it is does not place any assumptions on the data other than that time is monotonically increasing.

The window size is set to 2, which is minimal, because we want to retain as much information as possible.

val windowSize = 2

val dataRootPath = TrendUtils.getFx1mPath

val oilDS = spark.read.fx1m(dataRootPath + "bcousd/*.csv.gz").toDF.withColumn("ticker", lit("BCOUSD")).select($"ticker", $"time" as "x", $"close" as "y").as[TickerPoint].orderBy("x")

If we want to look at long term trends, we can use the output time series as input for another iteration. The output contains the points of the input where the trend changes (reversals). This can be repeated several times, resulting in longer term trends.

Here, we look at (up to) 15 iterations of the algorithm. It is no problem if the output of some iteration is too small to find a reversal in the next iteration, since the output will just be an empty dataframe in that case.

val numReversals = 15

val dfWithReversals = new TrendCalculus2(oilDS, windowSize, spark).nReversalsJoinedWithMaxRev(numReversals)

numReversals: Int = 15

dfWithReversals: org.apache.spark.sql.DataFrame = [ticker: string, x: timestamp ... 17 more fields]

dfWithReversals.show(20, false)

+------+-------------------+-----+---------+---------+---------+---------+---------+---------+---------+---------+---------+----------+----------+----------+----------+----------+----------+------+

|ticker|x |y |reversal1|reversal2|reversal3|reversal4|reversal5|reversal6|reversal7|reversal8|reversal9|reversal10|reversal11|reversal12|reversal13|reversal14|reversal15|maxRev|

+------+-------------------+-----+---------+---------+---------+---------+---------+---------+---------+---------+---------+----------+----------+----------+----------+----------+----------+------+

|BCOUSD|2010-11-14 20:15:00|86.74|null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:17:00|86.75|null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:18:00|86.76|-1 |null |null |null |null |null |null |null |null |null |null |null |null |null |null |1 |

|BCOUSD|2010-11-14 20:19:00|86.74|null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:21:00|86.74|1 |null |null |null |null |null |null |null |null |null |null |null |null |null |null |1 |

|BCOUSD|2010-11-14 20:24:00|86.75|null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:26:00|86.77|null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:27:00|86.75|null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:28:00|86.79|null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:32:00|86.81|null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:33:00|86.81|-1 |null |null |null |null |null |null |null |null |null |null |null |null |null |null |1 |

|BCOUSD|2010-11-14 20:34:00|86.81|null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:35:00|86.79|null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:36:00|86.8 |null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:37:00|86.79|null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:38:00|86.79|1 |null |null |null |null |null |null |null |null |null |null |null |null |null |null |1 |

|BCOUSD|2010-11-14 20:39:00|86.79|null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:40:00|86.8 |null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:41:00|86.8 |null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

|BCOUSD|2010-11-14 20:42:00|86.8 |null |null |null |null |null |null |null |null |null |null |null |null |null |null |null |0 |

+------+-------------------+-----+---------+---------+---------+---------+---------+---------+---------+---------+---------+----------+----------+----------+----------+----------+----------+------+

only showing top 20 rows

The number of reversals decrease rapidly as more iterations are done.

dfWithReversals.cache.count

res3: Long = 2859310

(1 to numReversals).foreach( i => println(dfWithReversals.filter(s"reversal$i is not null").count))

775283

253258

93804

37068

15397

6595

2858

1240

530

230

96

45

25

11

6

We write the resulting dataframe to parquet in order to produce visualizations using Python.

val checkPointPath = TrendUtils.getTrendCalculusCheckpointPath

dfWithReversals.write.mode(SaveMode.Overwrite).parquet(checkPointPath + "joinedDSWithMaxRev_new")

dfWithReversals.unpersist

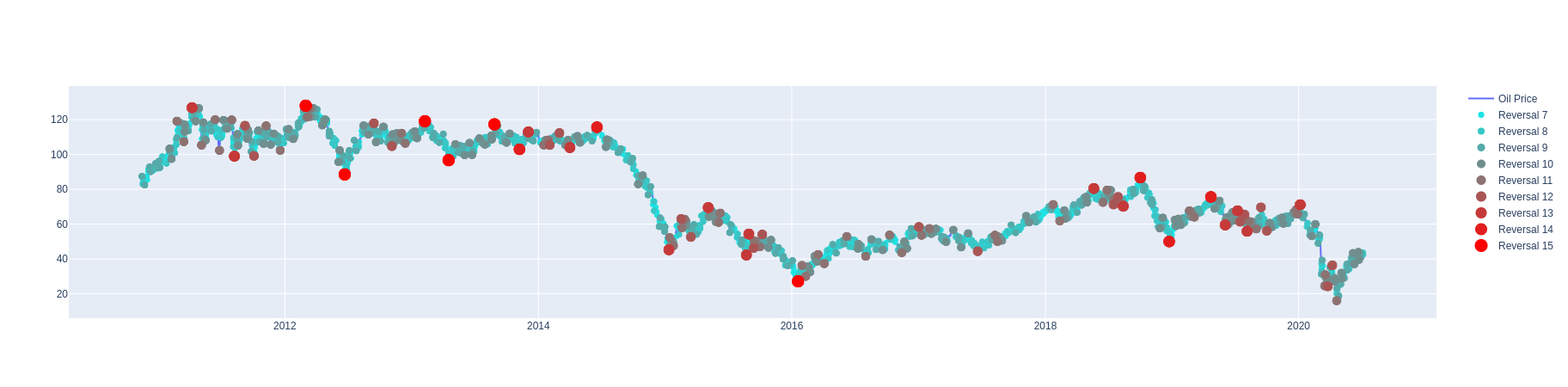

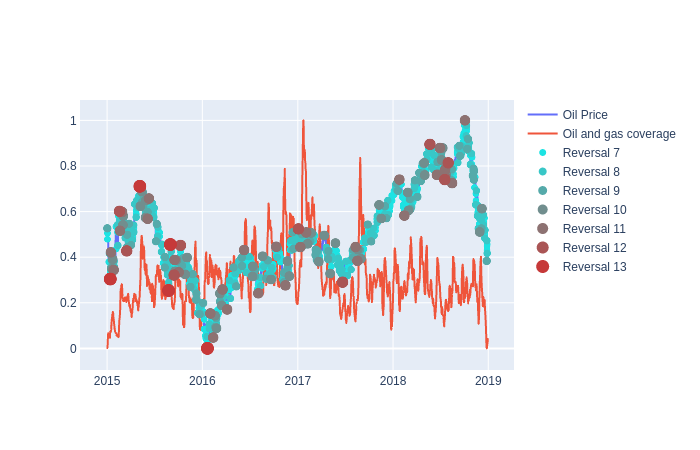

Visualization

The Python library plotly is used to make interactive visualizations.

from plotly.offline import plot

from plotly.graph_objs import *

from datetime import *

checkPointPath = TrendUtils.getTrendCalculusCheckpointPath()

joinedDS = spark.read.parquet(checkPointPath + "joinedDSWithMaxRev_new").orderBy("x")

We check the size of the dataframe to see if it possible to handle locally since plotly is not available for distributed data.

joinedDS.count()

Almost 3 million rows might be too much for the driver! The timeseries has to be thinned out in order to display locally.

No information about higher order trend reversals is lost since every higher order reversal is also a lower order reversal and the lowest orders of reversal are on the scale of minutes (maybe hours) and that is probably not very interesting considering that the data stretches over roughly 10 years!

joinedDS.filter("maxRev > 2").count()

Just shy of 100k rows is no problem for the driver.

We select the relevant information in the dataframe for visualization.

fullTS = joinedDS.filter("maxRev > 2").select("x","y","maxRev").collect()

Picking an interval to focus on.

Start and end dates as (year, month, day, hour, minute, second). Only year, month and day are required. The interval from years 1800 to 2200 ensures all data is selected.

startDate = datetime(1800,1,1)

endDate= datetime(2200,12,31)

TS = [row for row in fullTS if startDate <= row['x'] and row['x'] <= endDate]

Setting up the visualization.

numReversals = 15

startReversal = 7

allData = {'x': [row['x'] for row in TS], 'y': [row['y'] for row in TS], 'maxRev': [row['maxRev'] for row in TS]}

revTS = [row for row in TS if row[2] >= startReversal]

colorList = ['rgba(' + str(tmp) + ',' + str(255-tmp) + ',' + str(255-tmp) + ',1)' for tmp in [int(i*255/(numReversals-startReversal+1)) for i in range(1,numReversals-startReversal+2)]]

def getRevTS(tsWithRevMax, revMax):

x = [row[0] for row in tsWithRevMax if row[2] >= revMax]

y = [row[1] for row in tsWithRevMax if row[2] >= revMax]

return x,y,revMax

reducedData = [getRevTS(revTS, i) for i in range(startReversal, numReversals+1)]

markerPlots = [Scattergl(x=x, y=y, mode='markers', marker=dict(color=colorList[i-startReversal], size=i), name='Reversal ' + str(i)) for (x,y,i) in [getRevTS(revTS, i) for i in range(startReversal, numReversals+1)]]

Plotting result as plotly graph

The graph is interactive, one can drag to zoom in on an area (double-click to get back) and click on the legend to hide or show different series.

Note that we have left out many of the lower order reversals in order to not make the graph too cluttered. The seventh order reversal (the lowest order shown) is still on the scale of hours to a few days.

p = plot(

[Scattergl(x=allData['x'], y=allData['y'], mode='lines', name='Oil Price')] + markerPlots

,

output_type='div'

)

displayHTML(p)

Streaming Trend Calculus with Maximum Necessary Reversals

Johannes Graner (LinkedIn), Albert Nilsson (LinkedIn) and Raazesh Sainudiin (LinkedIn)

2020, Uppsala, Sweden

This project was supported by Combient Mix AB through summer internships at:

Combient Competence Centre for Data Engineering Sciences, Department of Mathematics, Uppsala University, Uppsala, Sweden

Resources

This builds on the following library and its antecedents therein:

This work was inspired by:

- Antoine Aamennd's texata-2017

- Andrew Morgan's Trend Calculus Library

"./000a_finance_utils"

We use the spark-trend-calculus library and Spark structured streams over delta.io files to obtain a representation of the complete time series of trends with their k-th order reversal.

This representation is a sufficient statistic for a Markov model of trends that we show in the next notebook.

defined object TrendUtils

import java.sql.Timestamp

import io.delta.tables._

import org.apache.spark.sql._

import org.apache.spark.sql.functions._

import org.apache.spark.sql.streaming.{GroupState, GroupStateTimeout, OutputMode, Trigger}

import org.apache.spark.sql.types._

import org.apache.spark.sql.expressions.{Window, WindowSpec}

import org.lamastex.spark.trendcalculus._

import java.sql.Timestamp

import io.delta.tables._

import org.apache.spark.sql._

import org.apache.spark.sql.functions._

import org.apache.spark.sql.streaming.{GroupState, GroupStateTimeout, OutputMode, Trigger}

import org.apache.spark.sql.types._

import org.apache.spark.sql.expressions.{Window, WindowSpec}

import org.lamastex.spark.trendcalculus._

Input data in s3. The data contains oil price data from 2010 to last month and gold price data from 2009 to last month.

val rootPath = "s3a://XXXXX/summerinterns2020/johannes/streamable-trend-calculus/"

val oilGoldPath = rootPath + "oilGoldDelta"

spark.read.format("delta").load(oilGoldPath).orderBy("x").show(20,false)

+------+-------------------+------+

|ticker|x |y |

+------+-------------------+------+

|XAUUSD|2009-03-15 17:00:00|929.6 |

|XAUUSD|2009-03-15 18:00:00|926.05|

|XAUUSD|2009-03-15 18:01:00|925.9 |

|XAUUSD|2009-03-15 18:02:00|925.9 |

|XAUUSD|2009-03-15 18:03:00|926.95|

|XAUUSD|2009-03-15 18:04:00|925.8 |

|XAUUSD|2009-03-15 18:05:00|926.35|

|XAUUSD|2009-03-15 18:06:00|925.8 |

|XAUUSD|2009-03-15 18:07:00|925.6 |

|XAUUSD|2009-03-15 18:08:00|925.7 |

|XAUUSD|2009-03-15 18:09:00|925.4 |

|XAUUSD|2009-03-15 18:10:00|925.75|

|XAUUSD|2009-03-15 18:11:00|925.7 |

|XAUUSD|2009-03-15 18:12:00|925.65|

|XAUUSD|2009-03-15 18:13:00|925.65|

|XAUUSD|2009-03-15 18:14:00|925.75|

|XAUUSD|2009-03-15 18:15:00|925.75|

|XAUUSD|2009-03-15 18:16:00|925.65|

|XAUUSD|2009-03-15 18:17:00|925.6 |

|XAUUSD|2009-03-15 18:18:00|925.85|

+------+-------------------+------+

only showing top 20 rows

Reading the data from s3 as a Structured Stream to simulate streaming.

val input = spark

.readStream

.format("delta")

.load(oilGoldPath)

.as[TickerPoint]

input: org.apache.spark.sql.Dataset[org.lamastex.spark.trendcalculus.TickerPoint] = [ticker: string, x: timestamp ... 1 more field]

Using the trendcalculus library to 1. Apply Trend Calculus to the streaming dataset. - Save the result as a delta table. - Read the result as a stream. - Repeat from 1. using the latest result as input. Stop when result is empty.

val windowSize = 2

// Initializing variables for while loop.

var i = 1

var prevSinkPath = ""

var sinkPath = rootPath + "multiSinks/reversal" + (i)

var chkptPath = rootPath + "multiSinks/checkpoint/" + (i)

// The first order reversal.

var stream = new TrendCalculus2(input, windowSize, spark)

.reversals

.select("tickerPoint.ticker", "tickerPoint.x", "tickerPoint.y", "reversal")

.as[FlatReversal]

.writeStream

.format("delta")

.option("path", sinkPath)

.option("checkpointLocation", chkptPath)

.trigger(Trigger.Once())

.start

stream.processAllAvailable

i += 1

var lastReversalSeries = spark.emptyDataset[TickerPoint]

while (!spark.read.format("delta").load(sinkPath).isEmpty) {

prevSinkPath = rootPath + "multiSinks/reversal" + (i-1)

sinkPath = rootPath + "multiSinks/reversal" + (i)

chkptPath = rootPath + "multiSinks/checkpoint/" + (i)

// Reading last result as stream

lastReversalSeries = spark

.readStream

.format("delta")

.load(prevSinkPath)

.drop("reversal")

.as[TickerPoint]

// Writing next result

stream = new TrendCalculus2(lastReversalSeries, windowSize, spark)

.reversals

.select("tickerPoint.ticker", "tickerPoint.x", "tickerPoint.y", "reversal")

.as[FlatReversal]

.map( rev => rev.copy(reversal=i*rev.reversal))

.writeStream

.format("delta")

.option("path", sinkPath)

.option("checkpointLocation", chkptPath)

.partitionBy("ticker")

.trigger(Trigger.Once())

.start

stream.processAllAvailable()

i += 1

}

Checking the total number of reversals written. The last sink is empty so the highest order reversal is \[\text{number of sinks} - 1\].

val i = dbutils.fs.ls(rootPath + "multiSinks").length - 1

i: Int = 18

The written delta tables can be read as streams but for now we read them as static datasets to be able to join them together.

val sinkPaths = (1 to i-1).map(rootPath + "multiSinks/reversal" + _)

val maxRevPath = rootPath + "maxRev"

val revTables = sinkPaths.map(DeltaTable.forPath(_).toDF.as[FlatReversal])

val oilGoldTable = DeltaTable.forPath(oilGoldPath).toDF.as[TickerPoint]

The number of reversals decrease rapidly as the reversal order increases.

revTables.map(_.cache.count)

res6: scala.collection.immutable.IndexedSeq[Long] = Vector(1954849, 677939, 262799, 108202, 46992, 21154, 9703, 4427, 1992, 890, 404, 183, 83, 35, 15, 7, 2)

Joining all results to get a dataset with all reversals in a single column.

def maxByAbs(a: Int, b: Int): Int = {

Seq(a,b).maxBy(math.abs)

}

val maxByAbsUDF = udf((a: Int, b: Int) => maxByAbs(a,b))

val maxRevDS = revTables.foldLeft(oilGoldTable.toDF.withColumn("reversal", lit(0)).as[FlatReversal]){ (acc: Dataset[FlatReversal], ds: Dataset[FlatReversal]) =>

acc

.toDF

.withColumnRenamed("reversal", "oldMaxRev")

.join(ds.select($"ticker" as "tmpt", $"x" as "tmpx", $"reversal" as "newRev"), $"ticker" === $"tmpt" && $"x" === $"tmpx", "left")

.drop("tmpt", "tmpx")

.na.fill(0,Seq("newRev"))

.withColumn("reversal", maxByAbsUDF($"oldMaxRev", $"newRev"))

.select("ticker", "x", "y", "reversal")

.as[FlatReversal]

}

maxByAbs: (a: Int, b: Int)Int

maxByAbsUDF: org.apache.spark.sql.expressions.UserDefinedFunction = SparkUserDefinedFunction($Lambda$9089/155868360@d163ce5,IntegerType,List(Some(class[value[0]: int]), Some(class[value[0]: int])),None,false,true)

maxRevDS: org.apache.spark.sql.Dataset[org.lamastex.spark.trendcalculus.FlatReversal] = [ticker: string, x: timestamp ... 2 more fields]

Writing result as delta table.

maxRevDS.write.format("delta").partitionBy("ticker").save(maxRevPath)

The reversal column in the joined dataset contains the information of all orders of reversals.

0 indicates that no reversal happens while a non-zero value indicates that this is a reversal point for that order and every lower order.

For example, row 33 contains the value -4, meaning that this point is trend reversal downwards for orders 1, 2, 3, and 4.

DeltaTable.forPath(maxRevPath).toDF.as[FlatReversal].filter("ticker == 'BCOUSD'").orderBy("x").show(35, false)

+------+-------------------+-----+--------+

|ticker|x |y |reversal|

+------+-------------------+-----+--------+

|BCOUSD|2010-11-14 20:15:00|86.74|0 |

|BCOUSD|2010-11-14 20:17:00|86.75|0 |

|BCOUSD|2010-11-14 20:18:00|86.76|-1 |

|BCOUSD|2010-11-14 20:19:00|86.74|0 |

|BCOUSD|2010-11-14 20:21:00|86.74|1 |

|BCOUSD|2010-11-14 20:24:00|86.75|0 |

|BCOUSD|2010-11-14 20:26:00|86.77|0 |

|BCOUSD|2010-11-14 20:27:00|86.75|0 |

|BCOUSD|2010-11-14 20:28:00|86.79|0 |

|BCOUSD|2010-11-14 20:32:00|86.81|0 |

|BCOUSD|2010-11-14 20:33:00|86.81|-1 |

|BCOUSD|2010-11-14 20:34:00|86.81|0 |

|BCOUSD|2010-11-14 20:35:00|86.79|0 |

|BCOUSD|2010-11-14 20:36:00|86.8 |0 |

|BCOUSD|2010-11-14 20:37:00|86.79|0 |

|BCOUSD|2010-11-14 20:38:00|86.79|1 |

|BCOUSD|2010-11-14 20:39:00|86.79|0 |

|BCOUSD|2010-11-14 20:40:00|86.8 |0 |

|BCOUSD|2010-11-14 20:41:00|86.8 |0 |

|BCOUSD|2010-11-14 20:42:00|86.8 |0 |

|BCOUSD|2010-11-14 20:43:00|86.82|0 |

|BCOUSD|2010-11-14 20:44:00|86.81|0 |

|BCOUSD|2010-11-14 20:47:00|86.84|0 |

|BCOUSD|2010-11-14 20:48:00|86.82|0 |

|BCOUSD|2010-11-14 20:49:00|86.84|-1 |

|BCOUSD|2010-11-14 20:50:00|86.82|0 |

|BCOUSD|2010-11-14 20:51:00|86.83|0 |

|BCOUSD|2010-11-14 20:52:00|86.82|1 |

|BCOUSD|2010-11-14 20:53:00|86.83|0 |

|BCOUSD|2010-11-14 20:54:00|86.84|0 |

|BCOUSD|2010-11-14 20:58:00|86.88|0 |

|BCOUSD|2010-11-14 20:59:00|86.89|0 |

|BCOUSD|2010-11-14 21:00:00|86.9 |-4 |

|BCOUSD|2010-11-14 21:03:00|86.87|0 |

|BCOUSD|2010-11-14 21:04:00|86.87|0 |

+------+-------------------+-----+--------+

only showing top 35 rows

Detecting Events of Interest to OIL/GAS Price Trends

Johannes Graner (LinkedIn), Albert Nilsson (LinkedIn) and Raazesh Sainudiin (LinkedIn)

2020, Uppsala, Sweden

This project was supported by Combient Mix AB through summer internships at:

Combient Competence Centre for Data Engineering Sciences, Department of Mathematics, Uppsala University, Uppsala, Sweden

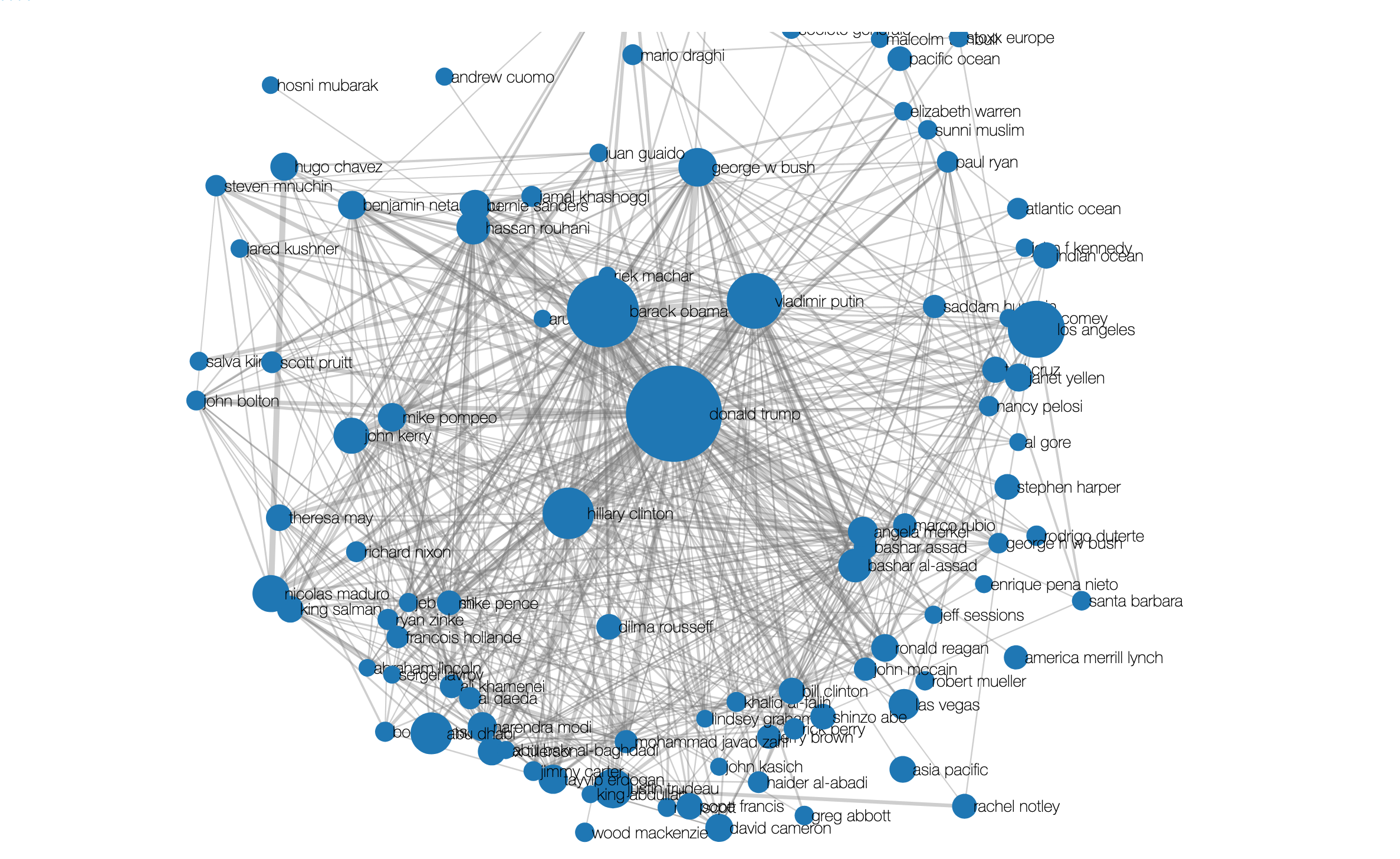

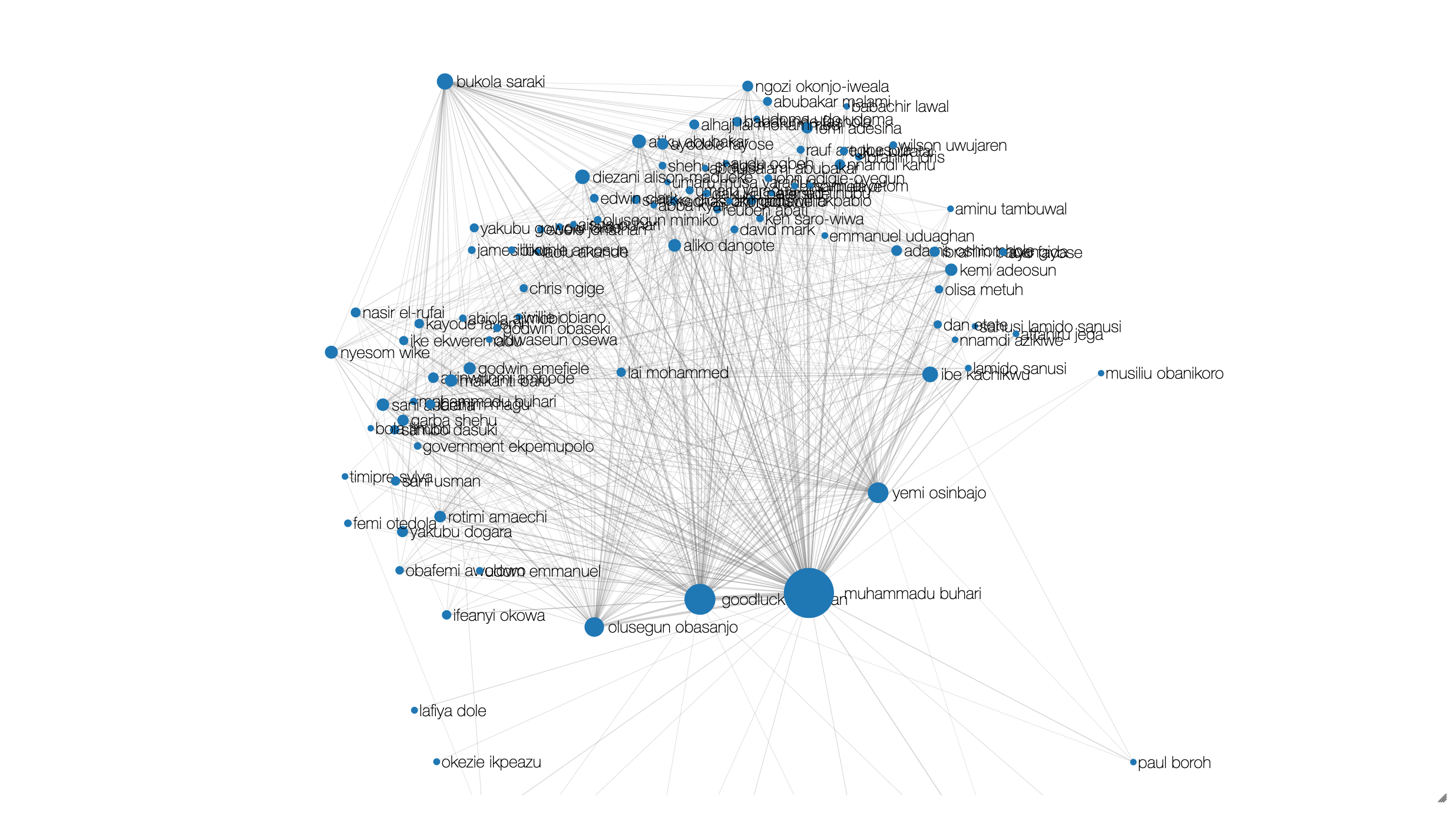

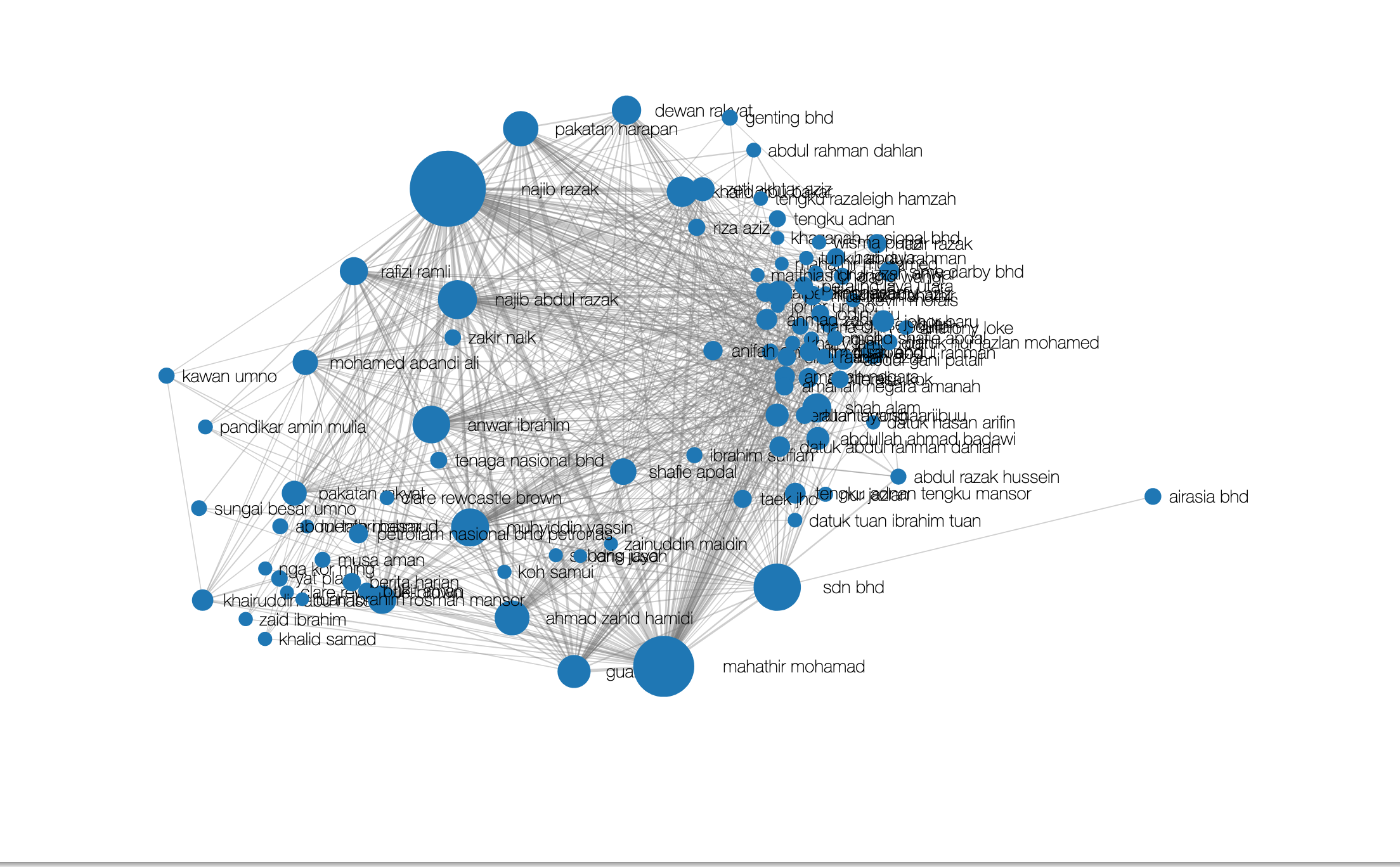

Here we will build a pipeline to investigate possible events related to oil and gas that are reported in mass media around the world and their possible co-occurrence with certain trends and trend-reversals in oil price.

Steps:

- Step 0. Setting up and loading GDELT delta.io tables

- Step 1. Extracting coverage around gas and oil from each country

- Step 2. Extracting the news around dates with high coverage (big events)

- Step 3. Enrich oil price with trend calculus and comparing it to the coverage

Resources:

This builds on the following libraries and its antecedents therein:

This work was inspired by:

- Antoine Aamennd's texata-2017

- Andrew Morgan's Trend Calculus Library

import spark.implicits._

import io.delta.tables._

import com.aamend.spark.gdelt._

import org.apache.spark.sql.{Dataset,DataFrame,SaveMode}

import org.apache.spark.sql.functions._

import org.apache.spark.sql.expressions._

import org.lamastex.spark.trendcalculus._

import java.sql.{Date,Timestamp}

import java.text.SimpleDateFormat

import spark.implicits._

import io.delta.tables._

import com.aamend.spark.gdelt._

import org.apache.spark.sql.{Dataset, DataFrame, SaveMode}

import org.apache.spark.sql.functions._

import org.apache.spark.sql.expressions._

import org.lamastex.spark.trendcalculus._

import java.sql.{Date, Timestamp}

import java.text.SimpleDateFormat

Loading in the data from our live-updating delta.io tables

"./000a_finance_utils"

defined object TrendUtils

"./000b_gdelt_utils"

defined object GdeltUtils

val rootPath = GdeltUtils.getGdeltV1Path

val rootCheckpointPath = GdeltUtils.getEOICheckpointPath

val gkgPath = rootPath+"gkg"

val eventPath = rootPath+"events"

val gkg_v1 = spark.read.format("delta").load(gkgPath).as[GKGEventV1]

val eve_v1 = spark.read.format("delta").load(eventPath).as[EventV1]

- Extracting coverage around gas and oil from each country

Limits the data and extracts the events related to oil and gas theme

val gkg_v1_filt = gkg_v1.filter($"publishDate">"2013-04-01 00:00:00" && $"publishDate"<"2019-12-31 00:00:00")

val oil_gas_themeGKG = gkg_v1_filt.filter(c =>c.themes.contains("ENV_GAS") || c.themes.contains("ENV_OIL"))

.select(explode($"eventIds"))

.toDF("eventId")

.groupBy($"eventId")

.agg(count($"eventId"))

.toDF("eventId","count")

val oil_gas_eventDF = eve_v1.toDF()

.join( oil_gas_themeGKG, "eventId")

Checkpoint

oil_gas_eventDF.write.parquet(rootCheckpointPath + "oil_gas_event_v1")

val oil_gas_eventDF = spark.read.parquet(rootCheckpointPath + "oil_gas_event_v1")

Extracting coverage for each country

// Counting number of articles for each country, each day and applying a moving average on each country's coverage

def movingAverage(df:DataFrame,size:Int,avgOn:String):DataFrame = {

val windowSpec = Window.partitionBy($"country").orderBy($"date").rowsBetween(-size/2, size/2)

return df.withColumn("coverage",avg(avgOn).over(windowSpec))

}

val (mean_articles, std_articles) = oilEventTemp.select(mean("articles"), stddev("articles"))

.as[(Double, Double)]

.first()

val oilEventTemp = oil_gas_eventDF

.filter(length(col("eventGeo.countryCode")) > 0)

.groupBy(

col("eventGeo.countryCode").as("country"),

col("eventDay").as("date")

)

.agg(

sum(col("numArticles")).as("articles"),

)

//Applying moving averge over weeks and of normalized number of articles.

val oilEventWeeklyCoverage = movingAverage(oilEventTemp.withColumn("normArticles",

($"articles"-mean_articles) / std_articles),

7,

"normArticles")

.drop("normArticles")

movingAverage: (df: org.apache.spark.sql.DataFrame, size: Int, avgOn: String)org.apache.spark.sql.DataFrame

oilEventTemp: org.apache.spark.sql.DataFrame = [country: string, date: date ... 1 more field]

mean_articles: Double = 1593.3213115310818

std_articles: Double = 10276.10388376719

oilEventWeeklyCoverage: org.apache.spark.sql.DataFrame = [country: string, date: date ... 2 more fields]

Checkpoint

oilEventWeeklyCoverage.write.parquet(rootCheckpointPath +"oil_gas_cov_norm")

val oil_gas_cov_norm = spark.read.parquet(rootCheckpointPath +"oil_gas_cov_norm")

Enrich the event data with the extracted coverage

val oilEventWeeklyCoverageC = oil_gas_cov_norm.drop($"articles").toDF("country","tempDate","coverage")

val oilEventCoverageDF = oilEventWeeklyCoverageC.join(oil_gas_eventDF,oil_gas_eventDF("eventDay") === oilEventWeeklyCoverageC("tempDate") && oil_gas_eventDF("eventGeo.countryCode")

=== oilEventWeeklyCoverageC("country"))

Checkpoint

oilEventCoverageDF.write.parquet(rootCheckpointPath+"oil_gas_eve_cov")

val oilEventCoverageDF = spark.read.parquet(rootCheckpointPath+"oil_gas_eve_cov")

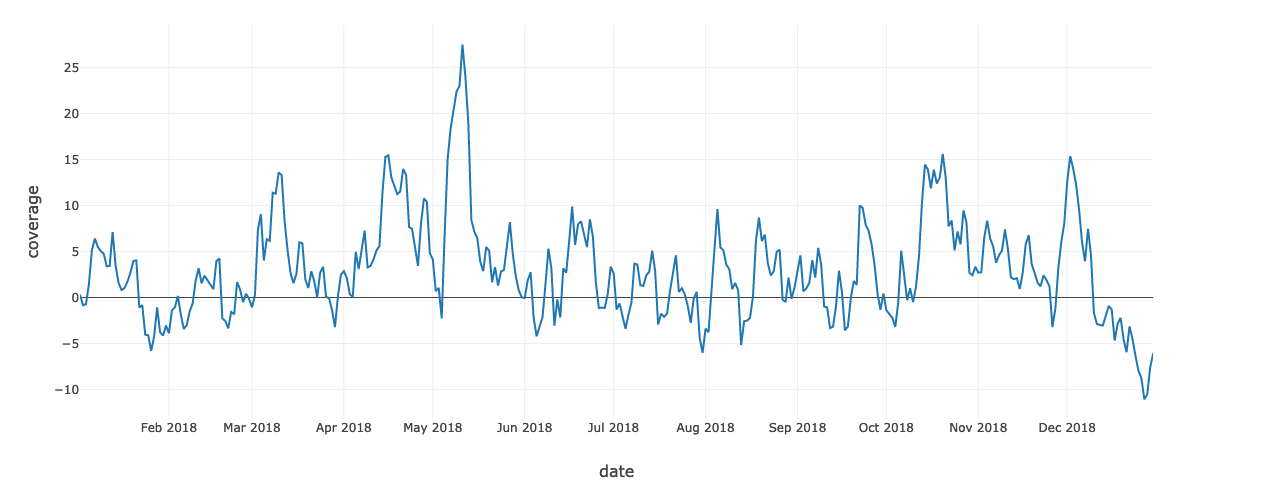

Let us look at 2018

val tot_cov_2018 = oil_gas_cov_norm.filter($"date" >"2018-01-01" && $"date"<"2018-12-31").groupBy($"date").agg(sum($"coverage").as("coverage")).orderBy(desc("coverage"))

Total Coverage

display(tot_cov_2018)

| date | coverage |

|---|---|

| 2018-05-11 | 27.486972103755587 |

| 2018-05-12 | 23.96793050873498 |

| 2018-05-10 | 22.996724752589337 |

| 2018-05-09 | 22.386286223280464 |

| 2018-05-08 | 20.1775002337218 |

| 2018-05-13 | 18.849262389568942 |

| 2018-05-07 | 18.356561615113378 |

| 2018-10-20 | 15.61132729731101 |

| 2018-04-16 | 15.462361791574757 |

| 2018-12-02 | 15.35916815092816 |

| 2018-04-15 | 15.283319413257985 |

| 2018-05-06 | 15.074567688377858 |

| 2018-10-14 | 14.456182009909384 |

| 2018-12-03 | 14.029036688423556 |

| 2018-04-21 | 13.984623515510002 |

| 2018-10-15 | 13.913223469927237 |

| 2018-10-17 | 13.899999502069956 |

| 2018-03-10 | 13.596583766909582 |

| 2018-03-11 | 13.328385180680641 |

| 2018-04-22 | 13.313579680574394 |

| 2018-04-17 | 13.06945600192825 |

| 2018-10-21 | 13.05918925998796 |

| 2018-10-19 | 13.042145921984297 |

| 2018-12-01 | 12.686288838799623 |

| 2018-10-18 | 12.395778148350379 |

| 2018-12-04 | 12.259894218727634 |

| 2018-04-18 | 12.19829841568054 |

| 2018-10-16 | 11.886229012014399 |

| 2018-04-20 | 11.528415547014669 |

| 2018-03-08 | 11.404094561394555 |

| 2018-04-14 | 11.330716620584942 |

| 2018-03-09 | 11.287064996907633 |

| 2018-04-19 | 11.254357433090025 |

| 2018-04-28 | 10.78023000863521 |

| 2018-04-29 | 10.393243293127174 |

| 2018-10-13 | 10.336221435744559 |

| 2018-09-22 | 10.000035737769323 |

| 2018-06-17 | 9.89640767482373 |

| 2018-09-23 | 9.743320330587773 |

| 2018-08-05 | 9.629453375016213 |

| 2018-12-05 | 9.51893722763343 |

| 2018-10-27 | 9.478760481207281 |

| 2018-03-04 | 9.062493219829184 |

| 2018-08-19 | 8.696936272089726 |

| 2018-06-23 | 8.508148813945867 |

| 2018-05-14 | 8.433635112149537 |

| 2018-10-23 | 8.378055403769487 |

| 2018-11-04 | 8.361164257492085 |

| 2018-03-12 | 8.332151356942868 |

| 2018-06-20 | 8.288965286725801 |

| 2018-05-27 | 8.195992626048099 |

| 2018-10-28 | 8.172456609861717 |

| 2018-11-30 | 8.076037057921956 |

| 2018-04-27 | 7.992062718099141 |

| 2018-06-19 | 7.982720769955921 |

| 2018-09-24 | 7.928409505440399 |

| 2018-10-22 | 7.7411860313545 |

| 2018-04-23 | 7.671714791621996 |

| 2018-12-08 | 7.477213251581839 |

| 2018-04-24 | 7.470870941142693 |

| 2018-11-10 | 7.411391505403686 |

| 2018-03-03 | 7.325979536642015 |

| 2018-04-08 | 7.268136025226444 |

| 2018-09-25 | 7.248527813504637 |

| 2018-10-25 | 7.192913236533858 |

| 2018-05-15 | 7.168859614571561 |

| 2018-01-13 | 7.126823571685313 |

| 2018-05-05 | 6.906878404112484 |

| 2018-08-21 | 6.841289525780963 |

| 2018-11-18 | 6.796834601675052 |

| 2018-06-21 | 6.764683340770092 |

| 2018-06-24 | 6.596574538956665 |

| 2018-11-03 | 6.522762585872373 |

| 2018-05-16 | 6.48615923713745 |

| 2018-01-07 | 6.420844453924965 |

| 2018-11-05 | 6.417688909844269 |

| 2018-03-06 | 6.349945149300961 |

| 2018-08-18 | 6.187501294449016 |

| 2018-03-07 | 6.172352349287472 |

| 2018-08-20 | 6.13211999534919 |

| 2018-06-16 | 6.118537632718658 |

| 2018-11-29 | 6.065241569765681 |

| 2018-03-17 | 6.006603083752083 |

| 2018-12-06 | 5.996538534079764 |

| 2018-03-18 | 5.910026828166202 |

| 2018-11-17 | 5.808400635657502 |

| 2018-09-26 | 5.801206664345313 |

| 2018-10-26 | 5.779325153878007 |

| 2018-06-18 | 5.734887914637875 |

| 2018-05-26 | 5.602190842490095 |

| 2018-04-13 | 5.596783148609628 |

| 2018-11-06 | 5.576395930577082 |

| 2018-01-08 | 5.517962782003598 |

| 2018-06-22 | 5.497506327930426 |

| 2018-05-19 | 5.490496043971789 |

| 2018-04-25 | 5.466491283594766 |

| 2018-08-06 | 5.445391605702541 |

| 2018-09-08 | 5.4006446510414 |

| 2018-04-07 | 5.382023244868721 |

| 2018-06-09 | 5.3258559201817555 |

| 2018-08-04 | 5.245437247626126 |

| 2018-11-11 | 5.241172880707882 |

| 2018-08-26 | 5.204499498662823 |

| 2018-03-13 | 5.185461263940412 |

| 2018-08-07 | 5.135946238463144 |

| 2018-10-24 | 5.134761205866823 |

| 2018-01-06 | 5.128352030068818 |

| 2018-04-12 | 5.125693671750433 |

| 2018-11-09 | 5.1252556486967205 |

| 2018-10-06 | 5.09820222957644 |

| 2018-05-20 | 5.095244730309911 |

| 2018-07-14 | 5.0886378741637985 |

| 2018-01-09 | 5.049410466223003 |

| 2018-08-25 | 4.980661941915239 |

| 2018-04-05 | 4.9786741555894025 |

| 2018-04-30 | 4.819035426044705 |

| 2018-01-10 | 4.76593736234763 |

| 2018-05-28 | 4.68026580074614 |

| 2018-12-09 | 4.625354233483328 |

| 2018-11-08 | 4.6069033872765415 |

| 2018-09-02 | 4.595944833281466 |

| 2018-07-22 | 4.593393861441349 |

| 2018-10-12 | 4.524520721372113 |

| 2018-02-18 | 4.255421749359614 |

| 2018-04-11 | 4.15326769550994 |

| 2018-05-01 | 4.148203765592582 |

| 2018-09-06 | 4.074638491116442 |

| 2018-01-21 | 4.040933228369039 |

| 2018-03-05 | 4.028081329371769 |

| 2018-01-20 | 3.983184963690456 |

| 2018-02-17 | 3.9592179437267587 |

| 2018-12-07 | 3.928415210549354 |

| 2018-05-17 | 3.900997379661076 |

| 2018-11-07 | 3.781090171979379 |

| 2018-08-22 | 3.7540960066842066 |

| 2018-07-08 | 3.691214257139836 |

| 2018-11-19 | 3.6381439060823175 |

| 2018-07-09 | 3.587919930383572 |

| 2018-08-08 | 3.582098553125515 |

| 2018-09-09 | 3.5759640874830154 |

| 2018-09-27 | 3.569020349975437 |

| 2018-01-14 | 3.4918510250085406 |

| 2018-04-26 | 3.4455823158435064 |

| 2018-01-12 | 3.4339601863531612 |

| 2018-04-10 | 3.4318089048960854 |

| 2018-01-11 | 3.4028826063454725 |

| 2018-06-30 | 3.355114219075089 |

| 2018-10-31 | 3.3540417080572116 |

| 2018-03-25 | 3.3523733004600995 |

| 2018-05-22 | 3.3116791760493247 |

| 2018-04-09 | 3.284132718620538 |

| 2018-11-28 | 3.2337105614328596 |

| 2018-02-11 | 3.1992058723611114 |

| 2018-06-14 | 3.1851964256157874 |

| 2018-06-10 | 3.161260627929845 |

| 2018-04-06 | 3.110227198132983 |

| 2018-08-09 | 3.0843001500412646 |

| 2018-05-25 | 3.0016326095327823 |

| 2018-09-01 | 2.9837093513174255 |

| 2018-09-15 | 2.9179880634576563 |

| 2018-07-21 | 2.8933506992926987 |

| 2018-07-15 | 2.8759383920429187 |

| 2018-05-18 | 2.8735511886835856 |

| 2018-04-01 | 2.8681500129386905 |

| 2018-03-21 | 2.859885597017876 |

| 2018-08-24 | 2.8589575441530255 |

| 2018-05-24 | 2.83382654942222 |

| 2018-07-13 | 2.787470623222106 |

| 2018-11-16 | 2.7626001178609734 |

| 2018-06-03 | 2.75461670243995 |

| 2018-11-02 | 2.74905636125065 |

| 2018-11-01 | 2.712890648324257 |

| 2018-03-24 | 2.705494621418867 |

| 2018-01-19 | 2.6930317927735197 |

| 2018-06-15 | 2.6915907378300803 |

| 2018-10-29 | 2.686991403988603 |

| 2018-11-20 | 2.668421959015086 |

| 2018-03-14 | 2.6656798705206937 |

| 2018-07-01 | 2.639377107255994 |

| 2018-03-16 | 2.567052974029147 |

| 2018-03-31 | 2.4768260481588094 |

| 2018-07-12 | 2.4322463716166536 |

| 2018-10-30 | 2.4248784673751347 |

| 2018-11-23 | 2.4229459696536297 |

| 2018-08-23 | 2.40527330970895 |

| 2018-02-13 | 2.3758812754650664 |

| 2018-05-29 | 2.316169222449841 |

| 2018-10-07 | 2.3156859613446947 |

| 2018-08-29 | 2.1799793376147036 |

| 2018-11-12 | 2.1693098056774884 |

| 2018-09-07 | 2.1595089361052366 |

| 2018-04-02 | 2.1315521682622944 |

| 2018-11-14 | 2.1134659160565357 |

| 2018-11-13 | 2.0047810335864957 |

| 2018-03-19 | 1.9628808187501918 |

| 2018-02-14 | 1.9227148290299392 |

| 2018-11-24 | 1.906880362157891 |

| 2018-06-02 | 1.8526803126666398 |

| 2018-02-10 | 1.8259332815621994 |

| 2018-03-22 | 1.8111175258908978 |

| 2018-09-20 | 1.8086046133719123 |

| 2018-01-18 | 1.7316578551283315 |

| 2018-02-24 | 1.679820897111581 |

| 2018-05-21 | 1.666972371077739 |

| 2018-06-25 | 1.648684473303101 |

| 2018-11-21 | 1.6143364878774755 |

| 2018-08-11 | 1.6057134493921184 |

| 2018-09-05 | 1.6002086245984404 |

| 2018-01-15 | 1.5938763419330733 |

| 2018-03-15 | 1.5571475346089114 |

| 2018-02-12 | 1.5543185252081144 |

| 2018-02-15 | 1.4696386764646396 |

| 2018-01-05 | 1.456344971425907 |

| 2018-06-08 | 1.4293196749694965 |

| 2018-09-21 | 1.382626912966169 |

| 2018-07-10 | 1.3382347067653138 |

| 2018-05-23 | 1.306861677680712 |

| 2018-08-03 | 1.2780186081652762 |

| 2018-07-11 | 1.2738690114677595 |

| 2018-10-11 | 1.2464405605483622 |

| 2018-11-25 | 1.2250799832621464 |

| 2018-11-22 | 1.214306483368349 |

| 2018-08-31 | 1.0760382222088678 |

| 2018-05-03 | 1.0552549601062509 |

| 2018-03-20 | 1.0422082525834733 |

| 2018-01-17 | 1.0252444061854975 |

| 2018-07-24 | 1.0143208574559224 |

| 2018-10-09 | 1.0071266127941847 |

| 2018-09-04 | 1.0005788282401595 |

| 2018-11-15 | 0.9223148101050318 |

| 2018-02-16 | 0.9074606306465425 |

| 2018-08-10 | 0.9036341501072411 |

| 2018-02-25 | 0.8639406420071154 |

| 2018-01-16 | 0.826683836667204 |

| 2018-08-12 | 0.7744852475885409 |

| 2018-05-30 | 0.7712897297484758 |

| 2018-07-20 | 0.7028110841076054 |

| 2018-05-02 | 0.6991097660288634 |

| 2018-09-03 | 0.683775948999076 |

| 2018-07-29 | 0.6335306876798337 |

| 2018-07-23 | 0.6152813959254133 |

| 2018-09-16 | 0.6123061206765863 |

| 2018-09-28 | 0.4523549405630516 |

| 2018-09-30 | 0.4515656786872553 |

| 2018-02-27 | 0.41566505849738844 |

| 2018-06-29 | 0.3820426144303619 |

| 2018-04-03 | 0.370570192088862 |

| 2018-07-25 | 0.3567793924043352 |

| 2018-01-02 | 0.3212670677455316 |

| 2018-03-02 | 0.25908410397409654 |

| 2018-03-30 | 0.17316334177262105 |

| 2018-02-04 | 0.16616025932341172 |

| 2018-08-17 | 0.1629558992156963 |

| 2018-03-26 | 6.727633754213702e-2 |

| 2018-04-04 | 6.601144887406996e-2 |

| 2018-03-23 | 3.179181663940911e-2 |

| 2018-05-31 | 3.136427694204036e-2 |

| 2018-06-01 | -4.39999461099605e-2 |

| 2018-03-27 | -4.958289477016642e-2 |

| 2018-09-19 | -5.8010942496990126e-2 |

| 2018-07-28 | -0.11868598530518337 |

| 2018-02-28 | -0.1254175938875366 |

| 2018-08-30 | -0.13668850733493443 |

| 2018-06-12 | -0.2045192799011617 |

| 2018-08-27 | -0.23392175194313714 |

| 2018-10-08 | -0.2850772445866747 |

| 2018-08-28 | -0.47637045929834043 |

| 2018-10-05 | -0.4849237606022401 |

| 2018-10-10 | -0.4941231461582305 |

| 2018-02-26 | -0.5065333868479032 |

| 2018-07-07 | -0.5303029545852589 |

| 2018-07-03 | -0.6413684311625434 |

| 2018-02-09 | -0.6442009502015447 |

| 2018-01-04 | -0.7244459631773861 |

| 2018-01-23 | -0.8192604176824385 |

| 2018-01-03 | -0.8225724376179675 |

| 2018-07-26 | -0.9098762772299227 |

| 2018-12-15 | -0.9122642095213793 |

| 2018-09-14 | -0.9531946203004753 |

| 2018-09-10 | -0.9810538927641539 |

| 2018-03-01 | -1.0602110951733992 |

| 2018-01-28 | -1.0608057763149794 |

| 2018-09-11 | -1.0616430797274217 |

| 2018-02-03 | -1.0717219413020924 |

| 2018-01-22 | -1.0789334608052936 |

| 2018-06-27 | -1.0891339752023268 |

| 2018-06-28 | -1.130141051322766 |

| 2018-06-26 | -1.137654948062775 |

| 2018-11-27 | -1.1812408908959169 |

| 2018-07-02 | -1.3086446749179 |

| 2018-12-16 | -1.309809287500618 |

| 2018-09-29 | -1.3248856236581052 |

| 2018-03-28 | -1.3552367963698195 |

| 2018-10-01 | -1.3870131165921822 |

| 2018-02-02 | -1.3895744806721257 |

| 2018-02-08 | -1.4776949758916373 |

| 2018-02-22 | -1.5599837016132585 |

| 2018-12-10 | -1.6813019271362846 |

| 2018-07-17 | -1.74591448327502 |

| 2018-07-19 | -1.7573347621843314 |

| 2018-10-02 | -1.8101306308776306 |

| 2018-02-23 | -1.8113157090322092 |

| 2018-02-05 | -1.8461329397893045 |

| 2018-07-06 | -1.8676357265862635 |

| 2018-07-04 | -1.9752014850954325 |

| 2018-12-14 | -1.9804298977904016 |

| 2018-07-18 | -2.090632333608432 |

| 2018-06-07 | -2.142892866101778 |

| 2018-06-04 | -2.1431848510987486 |

| 2018-06-13 | -2.1665641636390136 |

| 2018-12-19 | -2.1964153243240783 |

| 2018-10-03 | -2.200248596348493 |

| 2018-08-16 | -2.2183974753427886 |

| 2018-05-04 | -2.2567422734823293 |

| 2018-02-19 | -2.260530603583992 |

| 2018-08-15 | -2.504414714014903 |

| 2018-02-20 | -2.5476634565787677 |

| 2018-08-14 | -2.582734658569964 |

| 2018-07-27 | -2.748020923559909 |

| 2018-12-18 | -2.8451915413795694 |

| 2018-12-11 | -2.8570741374604642 |

| 2018-07-16 | -2.930993932605596 |

| 2018-12-12 | -2.9849609775754398 |

| 2018-02-07 | -2.9921828893184763 |

| 2018-12-13 | -3.035720198357624 |

| 2018-01-31 | -3.0422540354753624 |

| 2018-06-11 | -3.0482803401492116 |

| 2018-06-06 | -3.1153431364601376 |

| 2018-12-22 | -3.128129764637562 |

| 2018-09-13 | -3.14691361777108 |

| 2018-09-18 | -3.1473488148909317 |

| 2018-10-04 | -3.1826525202627054 |

| 2018-11-26 | -3.206987780540401 |

| 2018-03-29 | -3.2177095470263226 |

| 2018-09-12 | -3.3188840423945587 |

| 2018-02-21 | -3.3415718617878376 |

| 2018-08-01 | -3.374807833645513 |

| 2018-07-05 | -3.401297748343711 |

| 2018-02-06 | -3.41801131537882 |

| 2018-09-17 | -3.5595776490375344 |

| 2018-01-29 | -3.75670988031478 |

| 2018-08-02 | -3.766465397939953 |

| 2018-02-01 | -3.8767280752294666 |

| 2018-01-24 | -4.0353868362684135 |

| 2018-01-25 | -4.099231233918816 |

| 2018-01-30 | -4.132258540931211 |

| 2018-06-05 | -4.204603868662037 |

| 2018-01-27 | -4.225338770320211 |

| 2018-07-30 | -4.340032591506121 |

| 2018-12-23 | -4.496748804270663 |

| 2018-12-20 | -4.530356321068381 |

| 2018-12-17 | -4.685629860910394 |

| 2018-08-13 | -5.158738574164472 |

| 2018-01-26 | -5.786964396963983 |

| 2018-12-21 | -5.938428867782927 |

| 2018-07-31 | -6.001366042606405 |

| 2018-12-30 | -6.048890091027438 |

| 2018-12-24 | -6.303273492337473 |

| 2018-12-29 | -7.5290821169025826 |

| 2018-12-25 | -7.942541335592582 |

| 2018-12-26 | -8.732956905335165 |

| 2018-12-28 | -10.492764195593848 |

| 2018-12-27 | -11.056655636490078 |

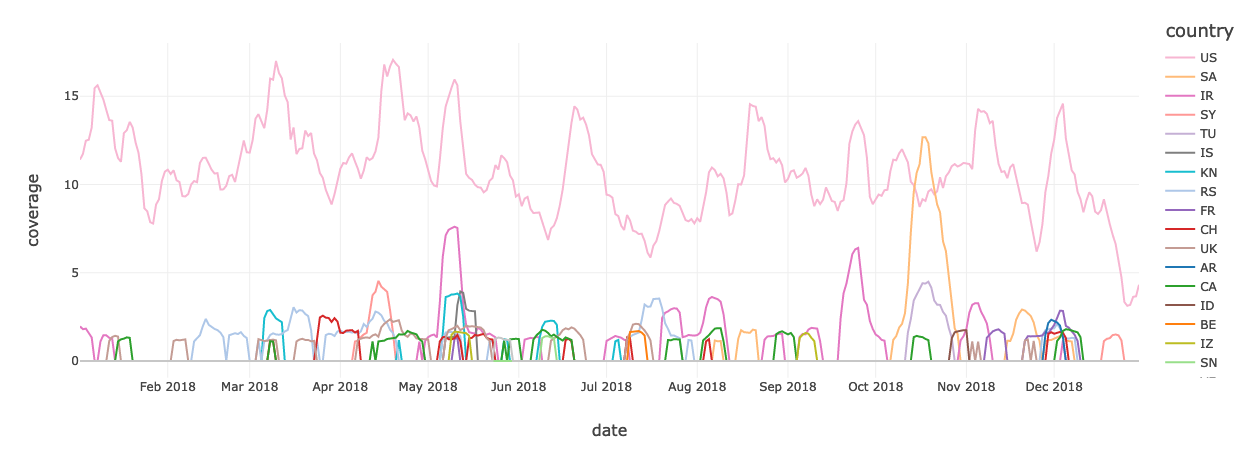

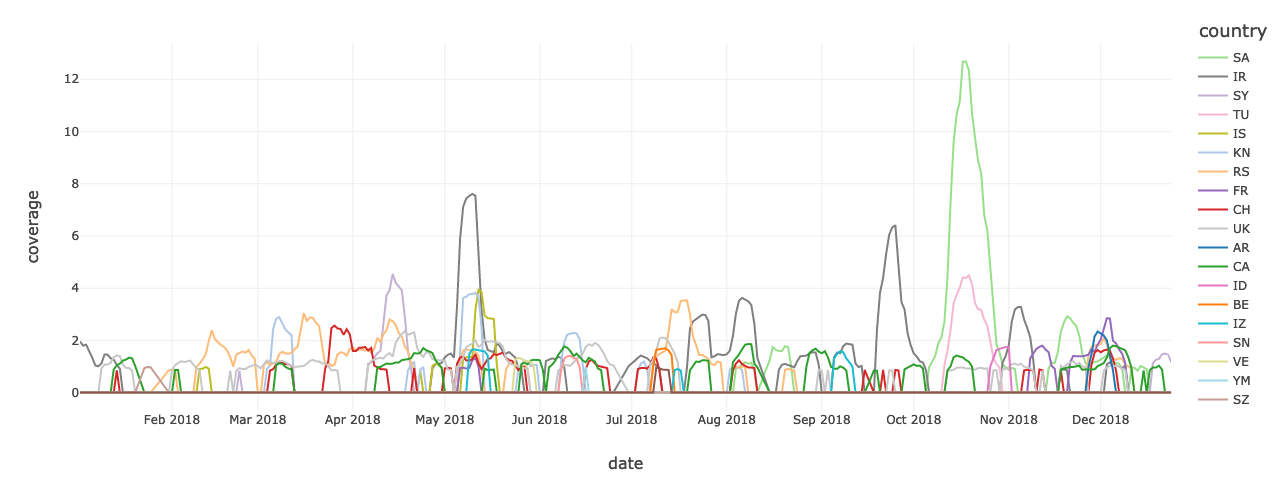

Coverage Grouped by Country

display(oil_gas_cov_norm.filter($"date" >"2018-01-01" && $"date"<"2018-12-31").orderBy(desc("coverage")).limit(1000))

| country | date | articles | coverage |

|---|---|---|---|

| US | 2018-04-19 | 293978.0 | 17.06571958044547 |

| US | 2018-03-10 | 121473.0 | 16.997419653664288 |

| US | 2018-04-20 | 128613.0 | 16.8411625445618 |

| US | 2018-04-16 | 121972.0 | 16.804795231624784 |

| US | 2018-04-18 | 325093.0 | 16.69630497544722 |

| US | 2018-04-21 | 94415.0 | 16.66300997755572 |

| US | 2018-03-11 | 192305.0 | 16.321746675576378 |

| US | 2018-04-17 | 198695.0 | 16.118084162377855 |

| US | 2018-03-12 | 121061.0 | 16.02109075933443 |

| US | 2018-03-08 | 167306.0 | 16.003087827280364 |

| US | 2018-05-10 | 239649.0 | 15.9504831207996 |

| US | 2018-03-09 | 172436.0 | 15.937985332447004 |

| US | 2018-05-11 | 156365.0 | 15.620396928759616 |

| US | 2018-01-08 | 125330.0 | 15.618951133444076 |

| US | 2018-05-09 | 250456.0 | 15.48443656158681 |

| US | 2018-01-07 | 75339.0 | 15.46596096567959 |

| US | 2018-04-15 | 49398.0 | 15.288016926844017 |

| US | 2018-01-09 | 135528.0 | 15.24454575423161 |

| US | 2018-04-22 | 75971.0 | 15.150165904258586 |

| US | 2018-03-13 | 254054.0 | 15.04659691290507 |

| US | 2018-05-08 | 249174.0 | 15.008311140799353 |

| US | 2018-01-10 | 269866.0 | 14.808527251572325 |

| US | 2018-03-14 | 156586.0 | 14.661737321411012 |

| US | 2018-12-04 | 143569.0 | 14.583942411932586 |

| US | 2018-08-19 | 39816.0 | 14.553163653965246 |

| US | 2018-08-20 | 147857.0 | 14.451221182341712 |

| US | 2018-06-20 | 191796.0 | 14.431702945581934 |

| US | 2018-05-07 | 122164.0 | 14.423876188248778 |

| US | 2018-08-21 | 249440.0 | 14.406957602681363 |

| US | 2018-11-05 | 120204.0 | 14.290807411707052 |

| US | 2018-06-21 | 184814.0 | 14.249073973848898 |

| US | 2018-03-07 | 205189.0 | 14.224467649728664 |

| US | 2018-01-11 | 200361.0 | 14.220547320123071 |

| US | 2018-12-03 | 152099.0 | 14.201279317167904 |

| US | 2018-11-07 | 309670.0 | 14.157238167556102 |

| US | 2018-11-06 | 122098.0 | 14.120787443350878 |

| US | 2018-04-24 | 185880.0 | 14.027617056766815 |

| US | 2018-11-08 | 194910.0 | 13.999187716187226 |

| US | 2018-03-04 | 64364.0 | 13.985730698250288 |

| US | 2018-04-25 | 216270.0 | 13.917486378981236 |

| US | 2018-04-27 | 156431.0 | 13.857416363996196 |

| US | 2018-08-23 | 137032.0 | 13.819353021939026 |

| US | 2018-06-23 | 120879.0 | 13.788852301532364 |

| US | 2018-12-02 | 91072.0 | 13.773893880767753 |

| US | 2018-03-03 | 47130.0 | 13.73437084151701 |

| US | 2018-06-22 | 218166.0 | 13.678457488064144 |

| US | 2018-01-12 | 203985.0 | 13.651376629653857 |

| US | 2018-04-23 | 105819.0 | 13.640894613616197 |

| US | 2018-01-13 | 65962.0 | 13.617164107812112 |

| US | 2018-11-10 | 64706.0 | 13.606612582384283 |

| US | 2018-09-25 | 270785.0 | 13.592029512326587 |

| US | 2018-08-22 | 224768.0 | 13.588415024037738 |

| US | 2018-04-26 | 185412.0 | 13.5723444530304 |

| US | 2018-05-12 | 74624.0 | 13.565184985842878 |

| US | 2018-01-19 | 188615.0 | 13.551477734101324 |

| US | 2018-03-05 | 125744.0 | 13.548697358494518 |

| US | 2018-06-19 | 137218.0 | 13.493937860918477 |

| US | 2018-11-09 | 144998.0 | 13.474364016646565 |

| US | 2018-09-24 | 184067.0 | 13.394525531097136 |

| US | 2018-06-24 | 51756.0 | 13.392064898685112 |

| US | 2018-08-24 | 143121.0 | 13.323584247489487 |

| US | 2018-01-06 | 97326.0 | 13.23302741397582 |

| US | 2018-01-20 | 182911.0 | 13.232971806463684 |

| US | 2018-03-16 | 102338.0 | 13.232457436976429 |

| US | 2018-04-28 | 86493.0 | 13.21701245048062 |

| US | 2018-03-06 | 177846.0 | 13.19096033104485 |

| US | 2018-05-06 | 32559.0 | 13.183856471369461 |

| US | 2018-09-26 | 159017.0 | 13.145681914288017 |

| US | 2018-11-04 | 72936.0 | 13.084555356572393 |

| US | 2018-01-18 | 160609.0 | 13.077229066848458 |

| US | 2018-03-20 | 145334.0 | 13.059212232916357 |

| US | 2018-09-23 | 65296.0 | 13.002534276171621 |

| US | 2018-03-22 | 148019.0 | 12.917079431896443 |

| US | 2018-01-17 | 157335.0 | 12.912283283974704 |

| US | 2018-09-27 | 122838.0 | 12.795479704732795 |

| US | 2018-06-25 | 90453.0 | 12.78376042155011 |

| US | 2018-03-21 | 177917.0 | 12.748171613782992 |

| SA | 2018-10-18 | 101417.0 | 12.684765148069788 |

| SA | 2018-10-17 | 194570.0 | 12.679829981367707 |

| US | 2018-04-14 | 52822.0 | 12.663425765287553 |

| US | 2018-12-05 | 175971.0 | 12.61896755933473 |

| US | 2018-08-18 | 105451.0 | 12.58164101681336 |

| US | 2018-03-15 | 145679.0 | 12.549889127383638 |

| US | 2018-01-05 | 230917.0 | 12.53889274185872 |

| US | 2018-12-01 | 198620.0 | 12.532331055426658 |

| US | 2018-03-02 | 198169.0 | 12.500885007313688 |

| US | 2018-02-27 | 89118.0 | 12.491028575787562 |

| US | 2018-01-04 | 189356.0 | 12.48233990201629 |

| US | 2018-01-21 | 44909.0 | 12.383150002243495 |

| SA | 2018-10-19 | 73494.0 | 12.331977189200233 |

| US | 2018-09-22 | 85630.0 | 12.31143021346594 |

| US | 2018-11-11 | 61567.0 | 12.179709919093515 |

| US | 2018-06-18 | 131499.0 | 12.142897746059406 |

| US | 2018-01-14 | 33044.0 | 12.052771870764795 |

| US | 2018-03-19 | 170160.0 | 12.050116612060297 |

| US | 2018-05-13 | 66083.0 | 12.02158995833447 |

| US | 2018-03-18 | 40394.0 | 12.01758621746067 |

| US | 2018-10-10 | 176220.0 | 12.002155132842898 |

| US | 2018-08-25 | 46571.0 | 11.99847113516388 |

| US | 2018-04-29 | 51144.0 | 11.917756831298309 |

| US | 2018-04-13 | 178010.0 | 11.870893600445594 |

| US | 2018-02-28 | 187108.0 | 11.82981355085504 |

| US | 2018-03-01 | 198743.0 | 11.80548526429549 |

| US | 2018-01-22 | 118502.0 | 11.7943359581122 |

| US | 2018-11-30 | 131668.0 | 11.764530331607242 |

| US | 2018-04-05 | 151628.0 | 11.763904747095708 |

| US | 2018-10-09 | 187842.0 | 11.761305095903344 |

| US | 2018-12-06 | 139691.0 | 11.756884298688522 |

| US | 2018-03-23 | 174925.0 | 11.754715605715216 |

| US | 2018-01-03 | 109245.0 | 11.73426594312716 |

| US | 2018-03-17 | 93789.0 | 11.721045257116797 |

| US | 2018-06-26 | 145159.0 | 11.720266751946893 |

| US | 2018-02-26 | 75722.0 | 11.688403647492896 |

| US | 2018-05-26 | 77976.0 | 11.6531901904327 |

| US | 2018-10-11 | 140387.0 | 11.62099344090589 |

| US | 2018-04-04 | 176372.0 | 11.548161501885613 |

| US | 2018-04-10 | 141686.0 | 11.541516404185346 |

| US | 2018-02-14 | 127538.0 | 11.518022230307839 |

| US | 2018-09-28 | 101232.0 | 11.507498508636077 |

| US | 2018-02-13 | 133936.0 | 11.506761709100273 |

| US | 2018-01-15 | 84388.0 | 11.500144415156077 |

| US | 2018-04-12 | 184872.0 | 11.498267661621483 |

| US | 2018-05-27 | 99428.0 | 11.497072100110556 |

| US | 2018-08-27 | 112195.0 | 11.488355622583217 |

| US | 2018-08-29 | 184301.0 | 11.448095783796672 |

| US | 2018-06-27 | 163254.0 | 11.447164357968393 |

| US | 2018-08-26 | 56428.0 | 11.435903836760827 |

| US | 2018-04-30 | 126325.0 | 11.431733273350618 |

| US | 2018-01-02 | 85597.0 | 11.41303524739485 |

| US | 2018-05-05 | 40375.0 | 11.409351249715835 |

| US | 2018-10-07 | 62963.0 | 11.407961061912431 |

| US | 2018-04-11 | 136299.0 | 11.38833161012838 |

| US | 2018-03-24 | 71415.0 | 11.37479118092324 |

| US | 2018-10-08 | 92961.0 | 11.371663258365583 |

| US | 2018-04-06 | 123941.0 | 11.30307139214568 |

| US | 2018-01-16 | 133067.0 | 11.28647254977305 |

| US | 2018-05-28 | 69840.0 | 11.266676275452593 |

| US | 2018-10-12 | 148567.0 | 11.254359211514444 |

| US | 2018-08-28 | 154121.0 | 11.245170070133952 |

| US | 2018-02-12 | 95901.0 | 11.223844589229751 |

| US | 2018-10-31 | 150322.0 | 11.222843654011301 |

| US | 2018-04-02 | 128317.0 | 11.218923324405706 |

| US | 2018-02-15 | 211996.0 | 11.193886042066419 |

| US | 2018-04-03 | 171774.0 | 11.180095379056663 |

| US | 2018-11-01 | 108141.0 | 11.171921074772655 |

| US | 2018-11-12 | 82452.0 | 11.163816279878814 |

| US | 2018-11-02 | 157228.0 | 11.159993263419455 |

| US | 2018-10-28 | 76599.0 | 11.156031228179758 |

| US | 2018-11-17 | 67482.0 | 11.151429706550493 |

| SA | 2018-10-16 | 232742.0 | 11.147328652530456 |

| US | 2018-06-28 | 141057.0 | 11.13551205620153 |

| US | 2018-06-29 | 141666.0 | 11.115076295491507 |

| US | 2018-05-24 | 146696.0 | 11.10189731511525 |

| US | 2018-10-30 | 143007.0 | 11.096322662023605 |

| US | 2018-08-30 | 140805.0 | 11.092749879368858 |

| US | 2018-10-27 | 52983.0 | 11.036808722159924 |

| US | 2018-10-29 | 121062.0 | 11.020446211713873 |

| US | 2018-06-17 | 64893.0 | 11.008448890970508 |

| US | 2018-11-16 | 112630.0 | 10.980547821756213 |

| US | 2018-08-06 | 129756.0 | 10.961307622557113 |

| US | 2018-02-25 | 66114.0 | 10.947350137010949 |

| US | 2018-09-07 | 95556.0 | 10.937368588582514 |

| US | 2018-04-01 | 25971.0 | 10.898387722575098 |

| US | 2018-04-07 | 63841.0 | 10.88479168585782 |

| US | 2018-11-03 | 62084.0 | 10.869318895605945 |

| US | 2018-05-25 | 177910.0 | 10.849327994993013 |

| US | 2018-05-01 | 139814.0 | 10.846380796849797 |

| US | 2018-02-01 | 140101.0 | 10.839874717929872 |

| US | 2018-02-16 | 139981.0 | 10.837261164859473 |

| US | 2018-08-07 | 158735.0 | 10.81875776519618 |

| US | 2018-02-03 | 80910.0 | 10.807830889061433 |

| US | 2018-12-07 | 159194.0 | 10.804369321430963 |

| US | 2018-09-03 | 41958.0 | 10.80388275569977 |

| US | 2018-04-09 | 95168.0 | 10.789855760763434 |

| US | 2018-11-14 | 207029.0 | 10.752431905095829 |

| US | 2018-10-06 | 48236.0 | 10.747343817735375 |

| US | 2018-09-02 | 30867.0 | 10.738543928939833 |

| US | 2018-01-31 | 173319.0 | 10.726921958903386 |

| US | 2018-06-30 | 101234.0 | 10.718636439595103 |

| US | 2018-11-13 | 131611.0 | 10.713840291673364 |

| SA | 2018-10-20 | 128817.0 | 10.709919962067769 |

| US | 2018-08-05 | 31112.0 | 10.692181165696349 |

| US | 2018-10-26 | 151770.0 | 10.69042952906406 |

| SA | 2018-10-15 | 141823.0 | 10.667699958478423 |

| US | 2018-03-25 | 52544.0 | 10.650322610935886 |

| US | 2018-02-18 | 26847.0 | 10.644177980844844 |

| US | 2018-09-06 | 145505.0 | 10.623491986330208 |

| US | 2018-02-17 | 80161.0 | 10.621587429039549 |

| US | 2018-08-09 | 137140.0 | 10.604668843472135 |

| US | 2018-02-02 | 177181.0 | 10.596633557968469 |

| US | 2018-05-14 | 98420.0 | 10.596522342944194 |

| US | 2018-01-23 | 110156.0 | 10.596007973456935 |

| US | 2018-12-08 | 57274.0 | 10.56952489580211 |

| US | 2018-02-23 | 140434.0 | 10.549186448238325 |

| US | 2018-08-17 | 146305.0 | 10.511957218863193 |

| US | 2018-05-29 | 157439.0 | 10.508398338086483 |

| US | 2018-09-08 | 67881.0 | 10.495636414051246 |

| US | 2018-11-29 | 108948.0 | 10.482360120528751 |

| US | 2018-09-05 | 214833.0 | 10.479148786702885 |

| US | 2018-02-22 | 145437.0 | 10.473087567880048 |

| US | 2018-10-25 | 117894.0 | 10.464134758426136 |

| US | 2018-08-08 | 174538.0 | 10.464093052792034 |

| US | 2018-11-18 | 33272.0 | 10.4360946704315 |

| US | 2018-10-23 | 118091.0 | 10.407623624217809 |

| US | 2018-09-04 | 168347.0 | 10.38582547946045 |

| US | 2018-03-26 | 86548.0 | 10.379958886930089 |

| US | 2018-05-15 | 101337.0 | 10.376942179396707 |

| US | 2018-05-23 | 120110.0 | 10.36708574787058 |

| US | 2018-11-15 | 121834.0 | 10.35907826612298 |

| US | 2018-08-10 | 94133.0 | 10.342326503091973 |

| US | 2018-04-08 | 41490.0 | 10.327701727400177 |

| US | 2018-09-01 | 61168.0 | 10.31409178880486 |

| US | 2018-05-16 | 139421.0 | 10.262515821298615 |

| US | 2018-02-04 | 40178.0 | 10.239786250712976 |

| US | 2018-05-30 | 108880.0 | 10.23472596710859 |

| US | 2018-05-22 | 99615.0 | 10.206060294602423 |

| US | 2018-05-02 | 122811.0 | 10.205253985676448 |

| US | 2018-01-30 | 104797.0 | 10.204836929335428 |

| US | 2018-02-10 | 79351.0 | 10.195953629271683 |

| US | 2018-03-31 | 37365.0 | 10.195397554150322 |

| US | 2018-10-13 | 65561.0 | 10.157973698482717 |

| US | 2018-02-05 | 56912.0 | 10.142973572084 |

| US | 2018-02-24 | 94693.0 | 10.14180581432914 |

| US | 2018-02-11 | 50163.0 | 10.12755638934426 |

| US | 2018-08-31 | 125628.0 | 10.116323671892767 |

| US | 2018-09-21 | 87025.0 | 10.073464181913854 |

| US | 2018-08-15 | 82951.0 | 10.017550828460989 |

| US | 2018-02-09 | 119630.0 | 10.00206413633108 |

| US | 2018-08-16 | 144365.0 | 9.997976984189078 |

| US | 2018-05-17 | 137140.0 | 9.99126237709864 |

| US | 2018-10-14 | 35545.0 | 9.96765698819686 |

| US | 2018-05-03 | 150451.0 | 9.94688758241402 |

| US | 2018-02-21 | 129163.0 | 9.927202523117833 |

| US | 2018-05-04 | 114325.0 | 9.889041867914427 |

| US | 2018-05-18 | 140570.0 | 9.84651602300833 |

| US | 2018-09-14 | 123342.0 | 9.836381553921525 |

| US | 2018-05-19 | 66393.0 | 9.822576989033735 |

| US | 2018-10-24 | 141746.0 | 9.798165291205978 |

| SA | 2018-10-21 | 50740.0 | 9.754541197935195 |

| US | 2018-11-19 | 127156.0 | 9.733215717030996 |

| US | 2018-10-20 | 96823.0 | 9.728767116060103 |

| US | 2018-02-20 | 118422.0 | 9.725180431527326 |

| US | 2018-02-19 | 70248.0 | 9.71888288077791 |

| US | 2018-06-16 | 53423.0 | 9.718146081242109 |

| US | 2018-03-27 | 118005.0 | 9.7100134825922 |

| US | 2018-10-05 | 120539.0 | 9.6986556482384 |

| US | 2018-05-21 | 88008.0 | 9.68696416881178 |

| US | 2018-10-04 | 142998.0 | 9.677218952309927 |

| US | 2018-10-22 | 104784.0 | 9.618970083347346 |

| US | 2018-10-19 | 95040.0 | 9.60205149777993 |

| US | 2018-12-09 | 29060.0 | 9.587315507063863 |

| US | 2018-11-28 | 86662.0 | 9.577403468025599 |

| SA | 2018-10-14 | 50385.0 | 9.574067017297429 |

| US | 2018-12-13 | 109585.0 | 9.55767670309531 |

| US | 2018-05-20 | 46571.0 | 9.554117822318602 |

| US | 2018-08-04 | 63962.0 | 9.5381445644575 |

| US | 2018-08-11 | 38450.0 | 9.53361255221841 |

| US | 2018-10-15 | 66588.0 | 9.47582244523095 |

| US | 2018-09-15 | 48527.0 | 9.46422827895057 |

| US | 2018-02-08 | 133137.0 | 9.460043813662326 |

| US | 2018-03-30 | 126734.0 | 9.447907474138619 |

| US | 2018-06-01 | 123365.0 | 9.444543219654383 |

| US | 2018-10-21 | 28694.0 | 9.439830483000849 |

| US | 2018-10-02 | 112407.0 | 9.432949053374003 |

| US | 2018-09-09 | 41250.0 | 9.423885028895816 |

| US | 2018-07-01 | 29338.0 | 9.423551383822998 |

| US | 2018-07-02 | 88983.0 | 9.381150655819212 |

| US | 2018-10-03 | 128700.0 | 9.359449824208093 |

| US | 2018-02-06 | 119989.0 | 9.342906589347596 |

| US | 2018-12-14 | 105697.0 | 9.339903783692248 |

| US | 2018-02-07 | 132458.0 | 9.321233561492546 |

| US | 2018-05-31 | 130123.0 | 9.318536597153946 |

| US | 2018-09-29 | 53523.0 | 9.305746869362638 |

| US | 2018-06-04 | 78904.0 | 9.298045228931786 |

| US | 2018-07-03 | 116642.0 | 9.256464711732006 |

| US | 2018-03-28 | 125804.0 | 9.236654535533516 |

| US | 2018-07-23 | 107114.0 | 9.206348441419332 |

| US | 2018-06-03 | 33524.0 | 9.203484654544322 |

| US | 2018-12-18 | 63736.0 | 9.169313838336679 |

| US | 2018-12-10 | 83582.0 | 9.168785566971385 |

| US | 2018-10-17 | 162530.0 | 9.166297130803295 |

| US | 2018-01-29 | 74409.0 | 9.165463018121253 |

| US | 2018-10-01 | 91419.0 | 9.164545494171007 |

| US | 2018-09-11 | 136572.0 | 9.15999958005388 |

| US | 2018-09-13 | 98737.0 | 9.135031807104763 |

| US | 2018-09-20 | 94641.0 | 9.1131085454451 |

| US | 2018-08-14 | 100562.0 | 9.086111098303014 |

| US | 2018-12-12 | 105318.0 | 9.07701927006876 |

| US | 2018-10-18 | 105008.0 | 9.07105536439216 |

| US | 2018-09-16 | 58808.0 | 9.068928377052954 |

| US | 2018-07-22 | 53621.0 | 9.03136550260501 |

| US | 2018-09-19 | 109304.0 | 9.02291316076032 |

| US | 2018-09-17 | 114986.0 | 9.011986284625573 |

| US | 2018-11-21 | 155573.0 | 8.964136020432443 |

| US | 2018-07-24 | 120368.0 | 8.961021999752822 |

| US | 2018-11-20 | 143903.0 | 8.948148860693312 |

| US | 2018-07-25 | 96753.0 | 8.903259696521433 |

| US | 2018-09-12 | 137739.0 | 8.89094263258328 |

| US | 2018-09-30 | 40105.0 | 8.884283633004982 |

| SA | 2018-10-22 | 116446.0 | 8.871146358262825 |

| US | 2018-03-29 | 128571.0 | 8.867239930535263 |

| US | 2018-11-22 | 71274.0 | 8.862707918296168 |

| US | 2018-01-28 | 32053.0 | 8.861748688711822 |

| US | 2018-07-21 | 47326.0 | 8.843829167925959 |

| US | 2018-06-15 | 120982.0 | 8.803902974212228 |

| US | 2018-07-26 | 108648.0 | 8.789778666129655 |

| US | 2018-09-10 | 64536.0 | 8.773721997000349 |

| US | 2018-06-02 | 58290.0 | 8.771469892758835 |

| US | 2018-10-16 | 108976.0 | 8.73169661970348 |

| US | 2018-08-03 | 104387.0 | 8.658030568001163 |

| US | 2018-01-24 | 96205.0 | 8.655917482539987 |

| US | 2018-06-05 | 109023.0 | 8.612918973780738 |

| US | 2018-12-17 | 67917.0 | 8.544869280804166 |

| US | 2018-09-18 | 109802.0 | 8.507111780063742 |

| US | 2018-01-25 | 118254.0 | 8.477194938534513 |

| US | 2018-12-15 | 104170.0 | 8.464919580230465 |

| US | 2018-12-11 | 126676.0 | 8.425076797784937 |

| SA | 2018-10-23 | 116063.0 | 8.419627261595597 |

| US | 2018-06-08 | 74082.0 | 8.414149921650191 |

| US | 2018-06-07 | 136925.0 | 8.39927491215378 |

| US | 2018-06-06 | 139956.0 | 8.382578756634912 |

| US | 2018-12-19 | 95718.0 | 8.377184827957707 |

| US | 2018-08-13 | 110885.0 | 8.360822317511655 |

| US | 2018-07-27 | 121914.0 | 8.333560734686925 |

| US | 2018-12-16 | 63635.0 | 8.331461551103787 |

| US | 2018-07-04 | 70095.0 | 8.32111855384647 |

| US | 2018-07-08 | 21576.0 | 8.270515717802605 |

| US | 2018-08-12 | 41224.0 | 8.260381248715797 |

| US | 2018-07-05 | 138007.0 | 8.213212176546335 |

| US | 2018-07-20 | 139561.0 | 8.141047527671692 |

| US | 2018-06-14 | 103210.0 | 8.087608708508885 |

| US | 2018-08-01 | 91525.0 | 8.086482656388128 |

| US | 2018-07-30 | 74297.0 | 8.041106926485059 |

| US | 2018-07-28 | 43171.0 | 7.986820092762175 |

| US | 2018-07-09 | 49654.0 | 7.96962346963408 |

| US | 2018-11-23 | 56158.0 | 7.927292251020463 |

| US | 2018-07-29 | 45458.0 | 7.914141074400271 |

| US | 2018-06-09 | 41721.0 | 7.891870265789757 |

| US | 2018-08-02 | 117781.0 | 7.887046314111948 |